Domains of AI-Awareness for Education Copyright © 2025 by Dani Dilkes is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License, except where otherwise noted.

Centre for Teaching and Learning, Western University

London, Ontario

Domains of AI-Awareness for Education Copyright © 2025 by Dani Dilkes is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License, except where otherwise noted.

I

This resource provides an overview of key considerations when exploring the impact of generative AI on Teaching and Learning. It is built around 7 domains of AI-Awareness: Knowledge, Skill, Ethics, Values, Affect, Pedagogy and Interconnectedness. You can dive into the sections that align with your specific questions and interests in generative AI.

Throughout, there will be opportunities to reflect and to engage in different activities designed to allow you to explore the domains of AI-Awareness. At the end of each section, you can reflect on your own level of awareness within each domain.

These will be indicated as follows:

This resource is intended for anyone working in a higher education space, but was developed at Western University. Throughout, there will be some specific references to Western resources and policies. You are encouraged to seek out similar information at your own institutions.

The generative AI landscape, including the capability of generative AI, information about the social impact, and other details are constantly and rapidly evolving. This resource should be considered a living resource and will be updated regularly to reflect changes.

If you have feedback, would like to report inaccuracies, typos, or other issues, please contact me at ddilkes2@uwo.ca or submit your feedback anonymously here.

This resource was last updated on 22-August-2025. You can find the revision history here.

The introduction of generative AI has had an unsettling effect on the education landscape. It has surfaced questions about the nature of knowledge production, the design of assessment, the roles of both learners and instructors within the learning environments, and the purpose of education broadly.

There are also increasing demands for educators and students to develop AI Literacy, which can be understood broadly as the ability to understand and use generative AI technologies. Many AI literacy frameworks are built upon an assumption that generative AI use is either desirable and/or inevitable; thus, focused on building capacity to use these technologies effectively through the development of knowledge and skill, often with an ethical or human-centred lens (Hibbert et. al., 2024; UNESCO, 2024; Stanford Teaching Commons, n.d.).

Common across many definitions of AI Literacy include the ability to:

(Ng et. al., 2021; Miao and Cukurova, 2024, Becker et. al., 2024)

However, many AI Literacy frameworks fall short of critical engagement with AI technologies and practices, including allowing for the possibility to choose to not use generative AI technologies. Furthermore, they often fail to recognize the socioemotional aspects of AI discourse.

As an alternative, the Domains of AI-Awareness Framework shifts the focus from use to critical awareness, arguing that this awareness is essential for making informed decisions about generative AI adoption and the development of new pedagogical practices.

This framework expands on the typical focus on knowledge and skills and includes:

Knowledge: What do educators need to know about how generative AI works, how it’s trained, and how it’s developed?

Ethics: What ethical considerations do educators need to be aware of when choosing to use/not use generative AI?

Value: How do an educator’s fundamental values impact their pedagogical practices and their approach to generative AI? How can an educator’s values conflict with the values of peers or organizations?

Affect: How can educators navigate their emotional response to generative AI technologies and to others with different values or practices?

Skill: What skills are required by educators and learners to use generative AI technologies effectively?

Pedagogy: What impact can generative AI technologies have on teaching and learning? How can we reimagine education to minimize the negative impact and maximize the positive potential?

Interconnectedness: How are generative AI technologies and practices impacted by larger institutional, social, and political factors?

To get started, click on any of the sections below:

Domain of AI-Awareness for Education

Part 1: Foundations of Generative AI (Knowledge)

Part 2: Ethical Considerations of Generative AI (Ethics)

Part 2: Ethical Considerations of Generative AI (Ethics)

Part 3: A Values-Based Approach to Generative AI (Values)

Part 3: A Values-Based Approach to Generative AI (Values)

Part 4: Emotional Considerations of Generative AI (Affect)

Part 5: How to use Generative AI (Skill)

Part 6: Teaching & Learning with Generative AI (Pedagogy)

I

1

Artificial Intelligence (AI) is a quickly evolving field with increasing impact on our daily lives. Generative AI is only one subfield of AI, but it has had (and will continue to have) a profound impact on how we produce knowledge and information media.

By the end of this section, you will be able to:

Generative AI is a type of Artificial Intelligence that creates new content, including text, images, videos, audio, and computer code. It is trained to identify patterns, relationships, and characteristics of existing data, and then mimic those patterns and relationships when creating new content. It is called “generative” because it generates new content based on these patterns. For example, the image on the right shows a new piece of artwork in the style of Gustav Klimt. Below, is an AI Generated poem in the style of Shel Silverstein generated in March 2025 using Google Gemini. As discussed in the Ethics section, although generative AI technologies are quite good at mimicking style, the ability to mimic writing and art styles of specific authors and artists has led to numerous controversies around intellectual property and copyright.

Artificial Intelligence (AI) are technologies that can simulate human intelligence by performing tasks that require the ability to reason, learn, and act independently. In popular media, AI is often represented as nearly indistinguishable from humans (for example the Cylons in Battlestar Galactica ![]() or the Replicants in Bladerunner

or the Replicants in Bladerunner ![]() ). In the present, AI systems are not quite this advanced, but they are becoming more advanced and able to tackle increasingly complex tasks.

). In the present, AI systems are not quite this advanced, but they are becoming more advanced and able to tackle increasingly complex tasks.

Generative AI models are a specialized type of Artificial Intelligence built using Deep Learning techniques to create new content. Deep Learning systems are modelled on the neural networks in the human brain, which allows them to perform very complex tasks like image and speech recognition and generation.

Some generative AI models are Large Language Models (LLMs). LLMs are specifically trained for natural language processing and production tasks. They are pre-trained on large amounts of text, and from this text they learn patterns of syntax and semantics in human language. Large Language Models are used in both discriminative and generative AI systems, meaning that they can be used to both classify new input (for example, to decide if a new email message is spam or not) and to generate new content (for example, to write a new email message asking for an extension on a piece of work).

LLMs are typically general-purposed, meaning that they are trained to solve common language problems. They can be used for:

Examples of Large Language Models include GPT-4, Claude, Gemini, and LlaMA.

Pre-trained LLMs can be further trained on a smaller task or domain specific datasets to allow them to achieve better results or perform specialized tasks. This process is called fine-tuning. For some LLMs, fine-tuning can be done by the end user.

For example, imagine that we wanted to create a TA ChatBot for a course on Information Ethics. An existing LLM would have the foundational understanding of language and perhaps some knowledge on the topic but may not have the specialized knowledge of all of the content covered in the course. This model could be fine-tuned using all of the course readings, lectures, and other course content. This would increase its ability to respond accurately to specific course questions.

Diffusion Models are another type of deep learning that can be used to learn and replicate patterns in visual data. Many image or video generating tools use Diffusion Models. Examples of image-generating diffusion models include Adobe Firefly, MidJourney, DALL-E, and Stable Diffusion.

Most of the examples and activities in this resource will focus on LLMs.

ChatBots are one example of a modern user interface that has made access to generative AI models much easier for the general public. ChatBots are designed to simulate human conversation by accepting natural language prompts or inputs and producing responses in natural language. They can be built on LLMs, allowing them to provide sophisticated responses to prompts. Note that not all chatbots are generative; many are rule-based, meaning they have a set of pre-defined responses to prompts and do not generate unique or original text.

Popular generative AI ChatBots include:

The functionality of these tools is constantly changing; however, currently many of these tools are able to accept multiple types of input (e.g. text, images, files) and produce multiple types of output.

AI technologies are embedded in many other technological tools and processes. Examples of other places you may encounter AI on a daily basis include:

Stop and Reflect: Where is AI?

Stop and Reflect: Where is AI?As you use different technologies over the next 24 hours, make note of where you are noticing AI capabilities appearing. What do these tools do? How does AI add to the functionality of the technology?

2

The AI model is pre-trained on a large dataset, typically of general texts or images. For specialized AI models, they may be trained on a specific dataset of subject or domain specific data. The AI analyses the data, looking for patterns, themes, relationships and other characteristics that can be used to generate new content.

For example, early models of GPT (the LLM used by both ChatGPT and Copilot) were trained on hundreds of gigabytes of text data, including books, articles, websites, publicly available texts, licensed data, and human-generated data.

Human intervention can occur at all stages of the training.

Humans may:

The model is then released for use and can be accessed by users

LLMs generate text in response to a user-provided prompt.

A user provides a prompt, asking the AI model to perform a specific task, generate text, produce an image, or create other types of content.

Prompt: Tell me a joke about higher education. The AI breaks the prompt into tokens (words or parts of words or other meaningful chunks) and analyses these tokens in order to understand the meaning and context of what is being asked.

Token Breakdown: ["Tell", "me", "a", "joke", "about", "higher",

"education", "."] NOTE: words might also be broken into subword tokens like “high”, “er”, “edu”, “cation”]

These tokens are then converted into vectors (numerical values) that represented the position of the token in relationship to other tokens, representing how likely they are to occur in sequence.

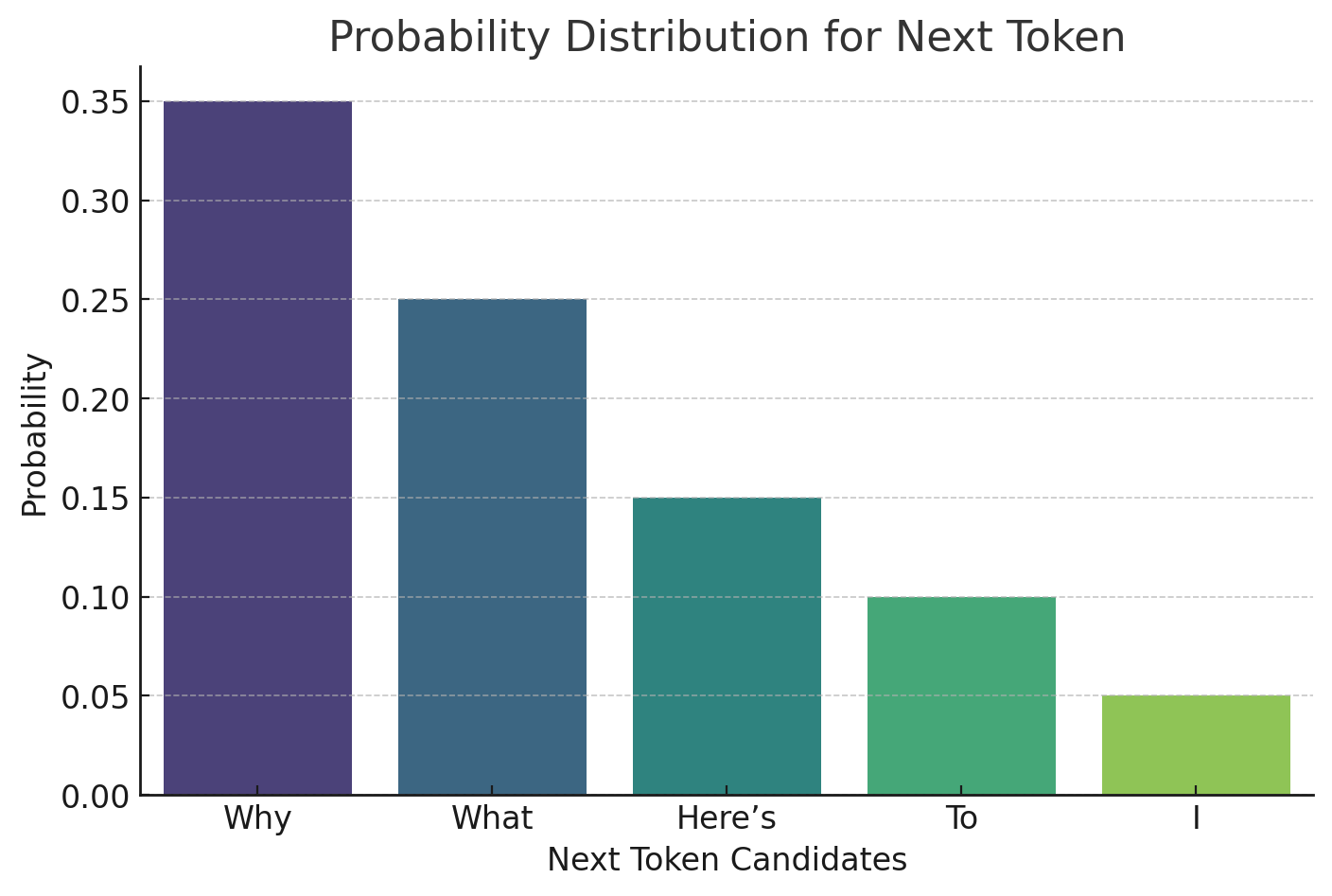

The LLM analyses the vectors and, based on the patterns and other information learned in training, the AI model will start to predict a response to the prompt based on the probability of response tokens appearing in sequence.

For example, in responding to our request to generate a joke, the most likely starting token may be “Why”, “What”, “Here’s” etc.

Although Large Language Models do generate output based on probability, they do this using millions or billions of parameters, so often the process of generating is so complex that creators and users don’t really know how they work. This opaqueness is why AI systems are often referred to as a black box, making generative AI systems vulnerability to unseen biases, vulnerabilities, and other problems.

After predicting a sequence of tokens, the LLM decodes the tokens back into natural language (words and sentences readable by a human). The complete response is shared with the user.

✅ “Why did the student bring a ladder to class? To reach higher education!”

The user can then submit a follow-up request referencing the original request or output. This is called iteration.

For more a more detailed introduction to generative AI, see this video from Google:

For a more in-depth look at how LLMs function, see this article from the Financial Times:

Generative AI exists because of the transformer ![]()

Generative AI is evolving quickly but still has certain limitations. Large Language Models (LLMs) are constrained by the data upon which they are trained and the methods through which they are trained. It’s important to be aware of the limitations of the tools that you’re using, especially if currency or accuracy is important for the tasks that you’re using generative AI to complete.

3

Generative AI is a specific type of advanced Artificial Intelligence that is able to generate new content (text, images, audio, video, etc.) based on prompts.

Large Language Models are a prevalent generative AI model. These are AI models trained on massive datasets of textual data to allowing them to process natural language and perform general language tasks. LLMs typically generate responses based on probability of certain words or tokens appearing in a sequence.

Generative AI has many limitations but is constantly evolving and improving.

Limitations include:

Making Connections

Making ConnectionsAn interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=308#h5p-4

Awareness Reflection: Knowledge

Awareness Reflection: KnowledgeAn interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=308#h5p-3

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=308#h5p-51

4

Financial Times Visual, Data & Podcasts. (2023, September 11). Generative AI exists because of the transformer [Interactive graphic]. Financial Times. https://ig.ft.com/generative-ai/

Schmid, P. (2025, June 30). The new skill in AI is not prompting, it’s context engineering. Retrieved August 21, 2025, from https://www.philschmid.de/context-engineering

Stryker, C. (n.d.). What is agentic AI? IBM. Retrieved August 21, 2025, from https://www.ibm.com/think/topics/agentic-ai

University of Waterloo Library. (2025, May 22). ChatGPT and generative artificial intelligence (AI): Incorrect bibliographic references [Web page]. University of Waterloo. https://subjectguides.uwaterloo.ca/chatgpt_generative_ai/incorrectbibreferences

Wikipedia contributors. (n.d.). Cylons. In Wikipedia… the free encyclopedia. Retrieved August 17, 2025, from https://en.wikipedia.org/wiki/Cylons

Wikipedia contributors. (n.d.). Replicant. In Wikipedia… the free encyclopedia. Retrieved August 17, 2025, from https://en.wikipedia.org/wiki/Replicant

YouTube. (2023, May 8). Introduction to generative AI [Video]. YouTube. https://www.youtube.com/watch?v=G2fqAlgmoPo

II

5

There are many ethical issues around the creation and use of generative AI tools that need to be taken into consideration as we make decision on if/how to adopt generative AI tools into our teaching and learning practices. The goals of this chapter aren’t to impose a certain ethical position on you, but to raise awareness of different ethical concerns and enable you to make decisions that align with your own ethical position. It’s also important to be aware that learners and colleagues may have ethical views different to your own. These differences will impact individual use of generative AI and how you talk with others about AI. We will talk about this more in the sections on Affect and Values as well.

By the end of this section, you will be able to:

Some key ethical considerations are:

In this section, we’ll introduce case studies to help you think about the various ethical implications.

6

A key ethical issue around the training and use of generative AI tools is understanding how data is being used. Privacy, intellectual property and copyright all need to be considered at all stages of generative AI development and use, including text and data mining (TDM), prompting, and the output of generative AI tools.

As we learned in the first section, generative AI is trained on massive datasets of texts and images. These datasets may include copyrighted or otherwise protected data or works.

In fact, multiple lawsuits have been filed against generative AI companies because of suspected unethical use of copyrighted content for training.

Ongoing discussions in Canada and abroad have focused on whether AI training and TDM require permission from copyright holders or should be an exception to copyright law (Government of Canada, 2025 ![]() ; WIPO, 2024

; WIPO, 2024 ![]() ). These discussions are ongoing as of early 2025.

). These discussions are ongoing as of early 2025.

Another question of Intellectual Property is the information and data that is entered into generative AI tools by users. Different tools have different policies around the collection and use of personal data, including the data required to set up an account and any information included in prompts. Users may inadvertently provide personal or sensitive data in their prompts or queries to AI systems, many of which are saved, stored, and used by companies for training or other purposes.

Data is typically grouped into three risk levels:

The Freedom of Information and Protection of Privacy Act (FIPPA) ![]() states that you can only use personal information for the purpose it was collection and prohibits the use of an individual’s personal information for other purposes without their consent. The answers in completed assignments, exercises, exams, etc., are considered to be the personal information of the student. (See Western’s statement on FIPPA

states that you can only use personal information for the purpose it was collection and prohibits the use of an individual’s personal information for other purposes without their consent. The answers in completed assignments, exercises, exams, etc., are considered to be the personal information of the student. (See Western’s statement on FIPPA ![]() ).

).

Before adopting new technologies, it’s important for instructors to review and fully understanding the privacy, security and data management policies and practices of the tool and encourage students to do the same. It’s also important to understand the settings and features of each tool. For example, some tools may have settings that allow you to exclude your prompts from training data or create a temporary chat.

Activity: Privacy Policies

Activity: Privacy PoliciesLook at the privacy policy for a generative AI tool that you’re familiar with or interesting in exploring.

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=416#h5p-14

See Western’s Cyber Security Awareness Training ![]() for more information on safe digital practices regarding privacy.

for more information on safe digital practices regarding privacy.

Intellectual Property is also an important consideration when using generative AI. Any information included in your prompts is subject to existing copyright laws. It’s important to ensure that you have permission to use any content (text, images, data, etc.) that you are including in your prompts.

When considering using generative AI tools to support teaching and learning, it’s especially important to keep in mind that any work created by a student as part of their academic studies is their Intellectual Property and subject to the same copyright laws. (see Policy 7.16 – Intellectual Property ![]() ).

).

Consider the cases below to examine how privacy, copyright and intellectual property might impact the use of generative AI tools.

Ethical Case Study: Privacy and Consent

A popular video conferencing platform has introduced a new AI-based tool which can be used to summarize meetings, generate action items, summarize the chat, organize ideas, and more. This tool can be turned on by the meeting host. Users may opt out only by leaving the Zoom session.

What ethical considerations are there around using this tool?

This introduces issues of privacy and consent as well as respecting individuals’ choice to opt-out of AI-use. If an instructor is using Zoom for virtual classes, they may turn on this tool in the Zoom session. If students would prefer to not have their data and contributions to the meeting used to train the AI, their only option is to leave the Zoom session. This presents inequitable access to course activities conducted through this platform. Instructors should consider obtaining full informed consent from all students in the class and refraining from using this tool if this consent is not granted by every student.

Ethical Case Study: Intellectual Property & Copyright

You are fine-tuning a Large Language Model to help your TAs with grading papers. You plan to train the LLM on all of the student submissions you’ve collected for an assignment from the past 3 years and work with it to accurately assess new papers.

What ethical considerations need to be made before moving ahead with this?

Intellectual Property – if students didn’t grant you permission to use their work in this way, it is unethical and against UWO policy to do so.

Privacy – Some student submissions may also include personal data that should not be used for training or course exemplars, including their name, student number, or personal reflections or information in the submission itself. Even if consent is received, it’s important to remove any possibly identifying data and ensure that you’re only sharing low-risk data.

Another question of Intellectual Property & Copyright arises with the output generated by generative AI models. The question that has been raised is whether or not works produced by non-human tools can be copyrighted. Currently, the discussions in Canada suggest that in order to be copyrighted, a work must have sufficient human input (Government of Canada, 2025 ![]() ). Works produced with generative AI may also raise concerns about copyright infringement if they are too similar to existing works.

). Works produced with generative AI may also raise concerns about copyright infringement if they are too similar to existing works.

Ethical Case Study: Intellectual Property & Copyright

You are an instructor for a first-year business course. You have elected to using generative AI to create a series of business cases to teach key concepts rather than paying for proprietary cases. You generate the cases and make revisions, as necessary. The cases work very well for your class, so you decide to share them under an open license for other instructors to use. A major publisher contacts the open library where you have shared the cases and requests that the content be removed claiming it is a copyright infringement because the cases very closely resemble materials in their collection.

What are the ethical considerations in this scenario?

Because generative AI is not transparent, it is impossible to identify the sources that it was trained on and whether or not the generated output includes ideas or elements of copyrighted content.

Copyright laws and standards for providing credit to training data are still evolving, so there is not a clear process for checking for copyright infringement or acknowledging the works used to train AI.

At a minimum, it should be clearly acknowledged if/when content is generated with the assistance of AI. More information on how to cite AI-use is shared in the section on Pedagogy.

7

Any technology presents issues of equitable access related to cost. Even tools that are free often offer a more feature-rich or advanced version at a cost. When allowing or encouraging students to use AI tools, it’s important to be aware that many students may not be able to afford access to certain tools or to premium licenses. When designing assignments or activities, ensure that this won’t unfairly advantage students who are able to access the paid versions of these tools.

Another important consideration when adopting any new tool for teaching is the accessibility of the tool. This means ensuring that every learner is able to access and use the tool.

Basic AODA standards ![]() require web-based tools to allow for keyboard navigation and for assistive technology compatibility. A review of the accessibility of AI interfaces conducted by Langara College

require web-based tools to allow for keyboard navigation and for assistive technology compatibility. A review of the accessibility of AI interfaces conducted by Langara College ![]() suggests that many AI tools are not in compliance with AODA requirements or present other accessibility barriers. When evaluating a new technology, like generative AI, review its documentation for information about built-in accessibility features to ensure that they comply with these basic requirements.

suggests that many AI tools are not in compliance with AODA requirements or present other accessibility barriers. When evaluating a new technology, like generative AI, review its documentation for information about built-in accessibility features to ensure that they comply with these basic requirements.

It is important to recognize that even technologies compliant with AODA standards may still present barriers to access for some learners. You may need to provide an alternative tool or an alternate way of completing an activity to ensure all students are able to participate.

For more information, see Western’s Policy on Accessibility ![]() and the Accessibility Western site

and the Accessibility Western site ![]() .

.

8

The rapid growth of AI technologies (and cloud-based technologies in general) has sparked a lot of concern around the environmental impact of technological advancement.

There are environmental considerations at each stage of the AI development process:

Hardware: the physical resources required for generative AI hardware and infrastructure involves extensive mining and extraction of minerals, which can lead to deforestation and increased soil and water pollution. The production of hardware, like Graphic Processing Units, can also consumed large amounts of energy and water. (Hosseini et. al., 2025 ![]() ). The rapid growth of generative AI technologies will also contribute to the global increase in ewaste, which when not properly disposed of can also contribute to air, water, and soil pollution. A report from 2022 indicates that only 22% of ewaste is properly recycled (Crownhart, 2024).

). The rapid growth of generative AI technologies will also contribute to the global increase in ewaste, which when not properly disposed of can also contribute to air, water, and soil pollution. A report from 2022 indicates that only 22% of ewaste is properly recycled (Crownhart, 2024).

Training: Training generative AI models requires significant amounts of energy. For example, it has been estimated that creating GPT-3 resulted in carbon dioxide emissions equivalent to the amount produced by 123 gasoline powered vehicles driven for a year (Saenko, 2023 ![]() ).

).

Usage: using generative AI also has a substantial water footprint and significant carbon emissions. It is estimated that a ChatGPT dialogue with 20-50 prompts uses approximately 500ml of water (McLean, 2023 ![]() ). Estimates suggest that by 2027, use of AI technologies globally will account for water withdrawal equivalent to 4-6 times that of Denmark or half that of the United Kingdom (Li, Islam & Ren, 2023). However, in contrast, an analysis conducted in 2024 suggests that the carbon emissions of content creation (text and images) may actually be lower for generative AI produced content than human-produced content (Tomlinson, Black, Patterson, & Torrance, 2024).

). Estimates suggest that by 2027, use of AI technologies globally will account for water withdrawal equivalent to 4-6 times that of Denmark or half that of the United Kingdom (Li, Islam & Ren, 2023). However, in contrast, an analysis conducted in 2024 suggests that the carbon emissions of content creation (text and images) may actually be lower for generative AI produced content than human-produced content (Tomlinson, Black, Patterson, & Torrance, 2024).

Data centres, though not limited to generative AI technologies, are becoming one of the largest consumers of energy, currently accounting for 3% of global energy consumption (Cohen, 2024 ![]() ). They also require large amount of water for cooling. There are more environmentally sustainable approaches to cooling down data centres, but these are substantially more expensive, which could be seen as another example of values-friction (sustainability VS profitability), as discussed in section 3.2, (Ammachchi, 2025

). They also require large amount of water for cooling. There are more environmentally sustainable approaches to cooling down data centres, but these are substantially more expensive, which could be seen as another example of values-friction (sustainability VS profitability), as discussed in section 3.2, (Ammachchi, 2025 ![]() ).

).

Often, the largest environmental impact of technological development occurs in already disadvantaged communities, perpetuating existing inequities. For example, a study in the US shows that high-pollutant data centres were more likely to be built in racialized communities (Booker, 2025 ![]() ).

).

Many of these environmental concerns are not new, but generative AI has brought a renewed focus on the environmental impact of digital technologies and rapid technological advancement. The environmental impact is a prime example of the complexity of the generative AI conversation as it highlights tensions in values and priorities, social inequities, and the affective nature of these conversations.

See AI’s impact on energy and water usage ![]() for a review of recent research on the environment impact of generative AI technologies.

for a review of recent research on the environment impact of generative AI technologies.

9

Many AI models are trained on data where social biases are present. These biases are then encoded into the patterns, relationships, rules, and decision-making processes of the AI and have a direct impact on the output.

Biased data can be easy to spot, such as in this AI generated image which shows a predominantly white class of 2023 at Western, but it can also be more invisible. AI-generated text will reflect dominant ideologies, discourses, language, values, and knowledge structures of the datasets they were trained on. For example, Large Language Models may be more likely to reproduce certain dominant forms of English, underrepresenting regional, cultural, racial, or class differences (D’Agostino, 2023 ![]() ).

).

The ethical issue is twofold: first, the information generated by generative AI is more likely to reflect dominant social identities, meaning that students who use AI will not be exposed to certain worldviews or perspectives, and some students may not feel that their experiences and identities are reflected in the output. Second, the use of generative AI to produce knowledge will continue to reinforce the dominance of these ideologies, values, and knowledge structures, contributing to further inequities in representation.

As an instructor, it’s important to be aware of this limitation of AI tools. If you ask your students to use these tools, it’s also important to teach them critical AI literacies to similarly be able to identify and reflect on these issues of representation, bias and equity.

Some generative AI companies have taken steps to correct for biases in the training data by establishing content policies or other guardrails to prevent generating biased or discriminatory output. However, these guardrails are inconsistent and can be subject to the ethical standards of each generative AI company.

Ethical Case Study: Bias

You are testing out a generative AI feedback tool with the hopes that it will be able to provide accurate personalised feedback on student submissions. You decided to review all feedback provided by the tool in detail and compare to your own evaluations. You note that many of the international students in the class received negative feedback on their writing style and coherence.

Large Language Models are trained on datasets biased towards dominant forms of English. The probability models that they use are designed to replicate these dominant patterns of language, which may result in any deviation from these “norms” being treated as errors or problems.

10

Because of the ability to generate plausible information at scale, generative AI technologies have exacerbated the potential harms of misinformation, disinformation and mal-information in a time of information abundance. The generation or dissemination of fake, inaccurate, or misleading information through generative AI could be either unintentional or deliberate.

Misinformation refers to inaccurate or false information that is shared without intending to create harm. This could occur as a result of generative AI users not verifying generative AI outputs before sharing them.

Mal-information refers to information that may be rooted in truth or fact, but removed from context or distorted in ways that can mislead. When using generative AI, this might be the result of inaccurate outputs or hallucinations. Generative AI could also be used by malicious individuals to distort information in a way that is plausible.

Disinformation refers to inaccurate or false information that is shared with malicious intend, to mislead recipients or manipulate decision-making or perspectives. Generative AI could be used to generate fake news stories, fake datasets, or otherwise employed in attempts to deceive at large scales.

(Canadian Centre for Cyber Security, 2024; Jaidka et. al., 2025)

One of example of this is the use of text-to-image and text-to-video generative AI tools to produce visual media for the purposes of (malicious or not) deception. A deepfake is the product of generative AI that creates a believable but fake video, audio, or image. They often feature real people saying or doing something that they didn’t really say or do. Deepfakes do have potential benefits for the arts, for social advocacy, for education and for other purposes, but they do present ethical issues because often permission has not been received to use the person’s likeness and because it has the potential to spread misinformation or to mislead people.

Ethical Case Study: Misinformation & Deception

One of your course assignments asks students to produce a piece of speculative fiction reflecting on the future if immediate action isn’t taken in response to Climate Change. One student creates a video of a news report showing the world in crisis. Within the video, they have deep fakes of several world leaders justifying their lack of action over the past 10 years.

What ethical considerations are there around this use of AI?

Deepfakes present a few important ethical issues, particularly with regards to misrepresentation, intention to deceive, and politics and political agendas. In this case, the student wasn’t necessarily attempting to deceive viewers, but it’s important to help students understand the ethics of generative AI and the potential harms if you allow or encourage AI use in your courses.

11

The field of AI is complex and presents many ethical considerations that need to be considered when using AI.

These ethical considerations include privacy, intellectual property and copyright, the environmental impact, the tendency of AI models to replicate existing social inequities and perpetuate bias, and the ability for AI to be used to spread misinformation.

Instructors should be aware of these ethical considerations and make sure that students are also aware of them if they choose to adopt AI tools in their courses.

The most important thing to remember is that Generative AI tools and use are emergent and constantly and quickly evolving. This means that it will be necessary to keep informed about the changing landscape as our own practices and approaches to teaching similarly evolve.

Making Connections

Making ConnectionsBased on your understanding of Generative AI technologies now:

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=418#h5p-12

Awareness Reflection: Ethics

Awareness Reflection: EthicsAn interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=418#h5p-10

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=418#h5p-52

12

Ammachchi, N. (2025, August 19). Water-guzzling data centers spark outrage across Latin America. Nearshore Americas. https://nearshoreamericas.com/water-guzzling-data-centers-spark-outrage-across-latin-america/

Booker, Mario DeSean. (2025). Digital redlining: AI Infrastructure and Environmental Racism in Contemporary America. World Journal of Advanced Research and Reviews. 10.30574/wjarr.2025.27.1.2602.

Canadian Centre for Cyber Security. (2024, May). How to identify misinformation, disinformation and malinformation (ITSAP.00.300). Communications Security Establishment. Retrieved from https://www.cyber.gc.ca/en/guidance/how-identify-misinformation-disinformation-and-malinformation-itsap00300

Chen, M. (2023, January 24). Artists and illustrators are suing three A.I. art generators for scraping and ‘collaging’ their work without consent. Artnet News. Retrieved August 17, 2025, from https://news.artnet.com/art-world/class-action-lawsuit-ai-generators-deviantart-midjourney-stable-diffusion-2246770

Cohen, A. (2024, May 23). AI is pushing the world toward an energy crisis. Forbes. https://www.forbes.com/sites/arielcohen/2024/05/23/ai-is-pushing-the-world-towards-an-energy-crisis/

Crownhart, C. (2024, October 28). AI will add to the e-waste problem. MIT Technology Review. https://www.technologyreview.com/2024/10/28/1106316/ai-e-waste/

D’Agostino, S. (2023, June 5). How AI tools both help and hinder equity. Inside Higher Ed. https://www.insidehighered.com/news/tech-innovation/artificial-intelligence/2023/06/05/how-ai-tools-both-help-and-hinder-equity

Earth.Org. (2023, April 28). The environmental impact of ChatGPT [Web article]. Earth.Org. https://earth.org/environmental-impact-chatgpt/

Hosseini, M., Gao, P., & Vivas-Valencia, C. (2024, December 15). A social-environmental impact perspective of generative artificial intelligence. Environmental Science and Ecotechnology, 23, Article 100520. https://doi.org/10.1016/j.ese.2024.100520

Ippolito, J. (n.d.). 9 takeaways about AI energy and water usage [Web page]. Learning With AI. Version 1.9. Retrieved August 17, 2025, from https://ai-impact-risk.com/ai_energy_water_impact.html

Jaidka, K., Chen, T., Chesterman, S., Hsu, W., Kan, M.-Y., Kankanhalli, M., Lee, M. L., Seres, G., Sim, T., Taeihagh, A., Tung, A., Xiao, X., & Yue, A. (2025). Misinformation, Disinformation, and Generative AI: Implications for Perception and Policy. Digital Government (New York, N.Y. Online), 6(1), Article 11. https://doi.org/10.1145/3689372

Joseph Saveri Law Firm, LLP. (n.d.). GitHub Copilot intellectual property litigation [Web page]. Joseph Saveri Law Firm, LLP. Retrieved August 17, 2025, from https://www.saverilawfirm.com/our-cases/github-copilot-intellectual-property-litigation

Langara College Educational Technology. (n.d.). Accessibility of AI interfaces [Web page]. Langara College. Retrieved August 17, 2025, from https://students.langara.ca/about-langara/academics/edtech/AI-Accessibility.html

Li, P., Yang, J., Islam, M. A., & Ren, S. (2023). Making AI Less “Thirsty”: Uncovering and Addressing the Secret Water Footprint of AI Models. arXiv. https://doi.org/10.48550/arXiv.2304.03271

Ontario. (n.d.). Freedom of Information and Protection of Privacy Act (R.S.O. 1990, c. F.31) [Statute]. e-Laws, Government of Ontario. Retrieved August 17, 2025, from https://www.ontario.ca/laws/statute/90f31

Pope, A. (2024, April 10). NYT v. OpenAI: The Times’s about-face [Blog post]. Harvard Law Review. https://harvardlawreview.org/blog/2024/04/nyt-v-openai-the-timess-about-face/

Saenko, K. (2023, May 25). A computer scientist breaks down generative AI’s hefty carbon footprint. Scientific American. Reprinted from The Conversation US. https://www.scientificamerican.com/article/a-computer-scientist-breaks-down-generative-ais-hefty-carbon-footprint/

Tomlinson, B., Black, R.W., Patterson, D.J. et al. The carbon emissions of writing and illustrating are lower for AI than for humans. Sci Rep 14, 3732 (2024). https://doi.org/10.1038/s41598-024-54271-x

Western Technology Services. (n.d.). Learn It. [Web page]. Western University. Retrieved August 17, 2025, from https://cybersmart.uwo.ca/for_western_community/learn_it/index.html

Western University. (2009, December 1). Policy 1.47 – Accessibility at Western [PDF]. Manual of Administrative Policies and Procedures. University Secretariat. Retrieved August 17, 2025, from https://www.uwo.ca/univsec/pdf/policies_procedures/section1/mapp147.pdf

Western University. (2018, April 26). Policy 7.16 – Intellectual Property [PDF]. Manual of Administrative Policies and Procedures. University Secretariat. Retrieved August 17, 2025, from https://www.uwo.ca/univsec/pdf/policies_procedures/section7/mapp716.pdf

Western University, Office of the Vice-President (Operations & Finance), Legal Counsel. (n.d.). FIPPA – Some basics for faculty and staff [Web page]. Western University. Retrieved August 17, 2025, from https://www.uwo.ca/vpfinance/legalcounsel/privacy/fippa.html#protection

World Intellectual Property Organization. (2024). Generative AI: Navigating intellectual property [Factsheet]. World Intellectual Property Organization. Retrieved August 17, 2025, from https://www.wipo.int/documents/d/frontier-technologies/docs-en-pdf-generative-ai-factsheet.pdf

III

13

Artificial Intelligence is quickly changing how we engage in knowledge production and will have a huge impact on teaching and learning.

By the end of this section, you will be able to:

14

Our values are our fundamental beliefs about what is important. Our values motivate action and impact who we are and how we exist in the world. All individuals hold multiple values simultaneously, but attribute different levels of importance to each value (Schwartz, 2012). The values that an educator holds will impact their pedagogical practices and curricular decisions (e.g. what they teach, how they teach, how they interact with learners, etc.). In particular, your values will impact how you choose to use/not use generative AI tools in your teaching and course design and how you talk about generative AI with your learners. Similarly, the values that a learner holds will impact their decisions around if/how they use generative AI as part of their learning. Recognizing and naming your values may help you better navigate your beliefs and emotional response to generative AI.

Our values are our fundamental beliefs about what is important. Our values motivate action and impact who we are and how we exist in the world. All individuals hold multiple values simultaneously, but attribute different levels of importance to each value (Schwartz, 2012). The values that an educator holds will impact their pedagogical practices and curricular decisions (e.g. what they teach, how they teach, how they interact with learners, etc.). In particular, your values will impact how you choose to use/not use generative AI tools in your teaching and course design and how you talk about generative AI with your learners. Similarly, the values that a learner holds will impact their decisions around if/how they use generative AI as part of their learning. Recognizing and naming your values may help you better navigate your beliefs and emotional response to generative AI.

Activity: Defining your values

Activity: Defining your values1.) Write down all of the values that are important to you. Don’t try and rank them, just write down ideas until you can’t think of any more. You may also find it helpful to refer to the values list below.

| Abundance Acceptance Accountability Achievement Adventure Advocacy Ambition Appreciation Attractiveness Autonomy Balance Benevolence Boldness Brilliance Calmness Caring Challenge Charity Cleverness Community Communication Commitment Compassion Conformity Connection Cooperation Collaboration Consistency Contribution Creativity Credibility | Curiosity Decisiveness Dedication Dependability Diversity Empathy Encouragement Engagement Enthusiasm Ethics Excellence Expressiveness Fairness Family Friendships Flexibility Freedom Fun Generosity Grace Gratitude Growth Flexibility Happiness Health Honesty Humility Humour Inclusiveness Independence Individuality | Innovation Inspiration Intelligence Intuition Joy Kindness Knowledge Leadership Learning Life-long learning Love Loyalty Mindfulness Motivation Optimism Open-mindedness Originality Passion Peace Perfection Playfulness Performance Personal development Popularity Power Preparedness Privacy Proactive Professionalism Punctuality Quality | Recognition Relationships Reliability Resilience Resourcefulness Responsibility Responsiveness Risk taking Safety Security Self-control Selflessness Service Simplicity Spirituality Stability Success Teamwork Thoughtfulness Traditionalism Trustworthiness Understanding Uniqueness Usefulness Versatility Vision Warmth Wealth Wellbeing Wisdom Zeal |

2.) From your list, circle your top 10 values.

3.) Rank your top 10 values in order of importance.

(Adapted from University of Edinburgh’s Values Toolkit)

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=496#h5p-58

Although values do impact decisions and actions, the link between a value and an action or decision is not always direct or obvious. There may be many factors that impact decision making, including different understanding of the value proposition of a decision. For example, one instructor who values inclusivity may encourage the use of generative AI technologies to help support student access needs, reduce language barriers, or provide additional scaffolds; another instructor who values inclusivity may discourage or ban the use of generative AI because not all student may have the resources to pay for a subscription which can result in inequal access for students.

Values friction (conflicting values) may also impact decision-making. Values friction can occur both within an individual and in interactions between an individual and others or between an individual and organizations. When one individual holds multiple values. they can come into conflict with each other (e.g. honesty and compassion; individualism and belonging). Typically, an individual will place different levels of importance on different values, which will affect which values have the greatest impact on decision making or action. However, individuals may also be constrained by the values friction that exists between their own values and peers’ values or institutional values and norms (Jensen, Schott, & Steen, 2021).

Consider the following scenarios and how values may impact decision-making.

Values Case Study: Assignment Feedback

You are teaching a second year global health course that uses case-based assessment. Each week, individual learners need to analyse a different global health scenario and submit a case analysis. In past years, you have always provided detailed feedback to each learner a few days before their next submission is due, to allow them to consider the feedback and make improvements. This year, your class size has increased significantly. Your colleague has shared how they are using genAI to help with feedback generation and suggested you do this to expedite your evaluation.

What values might impact your decision in this scenario?

If your top values include punctuality or reliability, you might decide to use generative AI to provide feedback to ensure that you are able to provide feedback in the expected timeline and honour the commitment that you’ve made to students.

If your top values include authenticity or connection, you might decide to continue to generate feedback manually without the use of generative AI, even if that means a delay in returning the assignments to learners.

If your top values include both reliability and authenticity, you may experience values-friction and struggle to make a decision without feeling discomfort.

Values Case Study: Detecting Generative AI

You are a TA in an upper-year history course. Students need to complete a series of document analyses of primary sources. You have received a number of submissions lately that seem very structurally and grammatically strong, but that lack good analysis. You have a feeling that the students may be using generative AI to complete the assignments. Other TAs in the course have echoed these concerns and shared that they have all adopted an AI-detection tool to help flag AI-generated submissions. They’ve encouraged you to do the same.

What values might impact your decision in this scenario?

If your top values include trust, you may feel uncomfortable using an AI-detection tool to identify academic misconduct since it impacts the educator-student relationship and assumes dishonesty.

If your top values include conformity or fairness, you might decide to use the AI-detection tool to align with the group consensus and to ensure that all students in the course receive a similar experience.

Values Case Study: Zoom AI Companion

You teach an Introduction to Environmental Science course online over Zoom. Recently, one of your students has requested to use the embedded AI tool, which records the meeting, generates a transcript and produces a summary with key concepts. The student says that this will help them take better notes and support their learning.

What values might impact your decision in this scenario?

If your top values include accessibility or inclusiveness, you might decide to allow the use of the AI companion tool, or even use it yourself and share the summaries with the whole class.

If your top values include privacy, you might be reluctant to use the AI companion because it records student interactions. You may also have concerns around how the data is stored or if it might be used for purposes beyond the course, such as training.

Values Case Study: Image Generation

You are teaching an introduction to anatomy class. Your course materials include a lot of visuals, which you have typically taken from standard textbooks and online repositories. However, most of the images available to you portray light-skinned, able-bodied males. You are considering using generative AI tools to generate more diverse images for your course materials.

What values might impact your decision in this scenario?

If your top values include inclusiveness, you might move ahead with using generative AI tools to generate more diverse images. However, you may also have concerns about the potential for biased or discriminatory representation in AI-generated images.

If your top values include sustainability, you might also have concerns about the potential energy costs of using generative AI to generate images compared to the costs of finding existing images.

Values are intrinsically linked to affect or emotion (Schwartz, 2012). When our values are threatened or questioned, it can activate a negative emotional response. Similarly, when our values are realized, it can activate a positive response. For more information on how to navigate the affective nature of generative AI, see the section on Emotional Intelligence.

Awareness Reflection: Your Pedagogical Values

Awareness Reflection: Your Pedagogical ValuesAn interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=496#h5p-29

15

One of the most pressing issues of generative AI on education is how it will reshape assessment and potentially redefine the meaning of original work and plagiarism.

The International Centre for Academic Integrity defines Academic Integrity as a mutual commitment to 6 fundamental values: honesty, trust, fairness, respect, responsibility, and courage (ICAI, 2021)

If you would like to explore further, the full description of each of the fundamental values can be found here.![]()

The student, the instructor, and the institution all play an important role in creating a culture of Academic Integrity.

The institution is responsible for

The instructor is responsible for

The student is responsible for decisions and actions they take regarding academic integrity.

Awareness Reflection: An Instructor’s Role

Awareness Reflection: An Instructor’s RoleAn interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=812#h5p-45

Instructors have several choices when approaching generative AI though the lens of academic integrity. Should they:

Ignoring generative AI and continuing on as usual may seem like the easiest option for instructors. However, doing so fails to address several fundamental values of Academic Integrity: trust, fairness, responsibility, and courage.

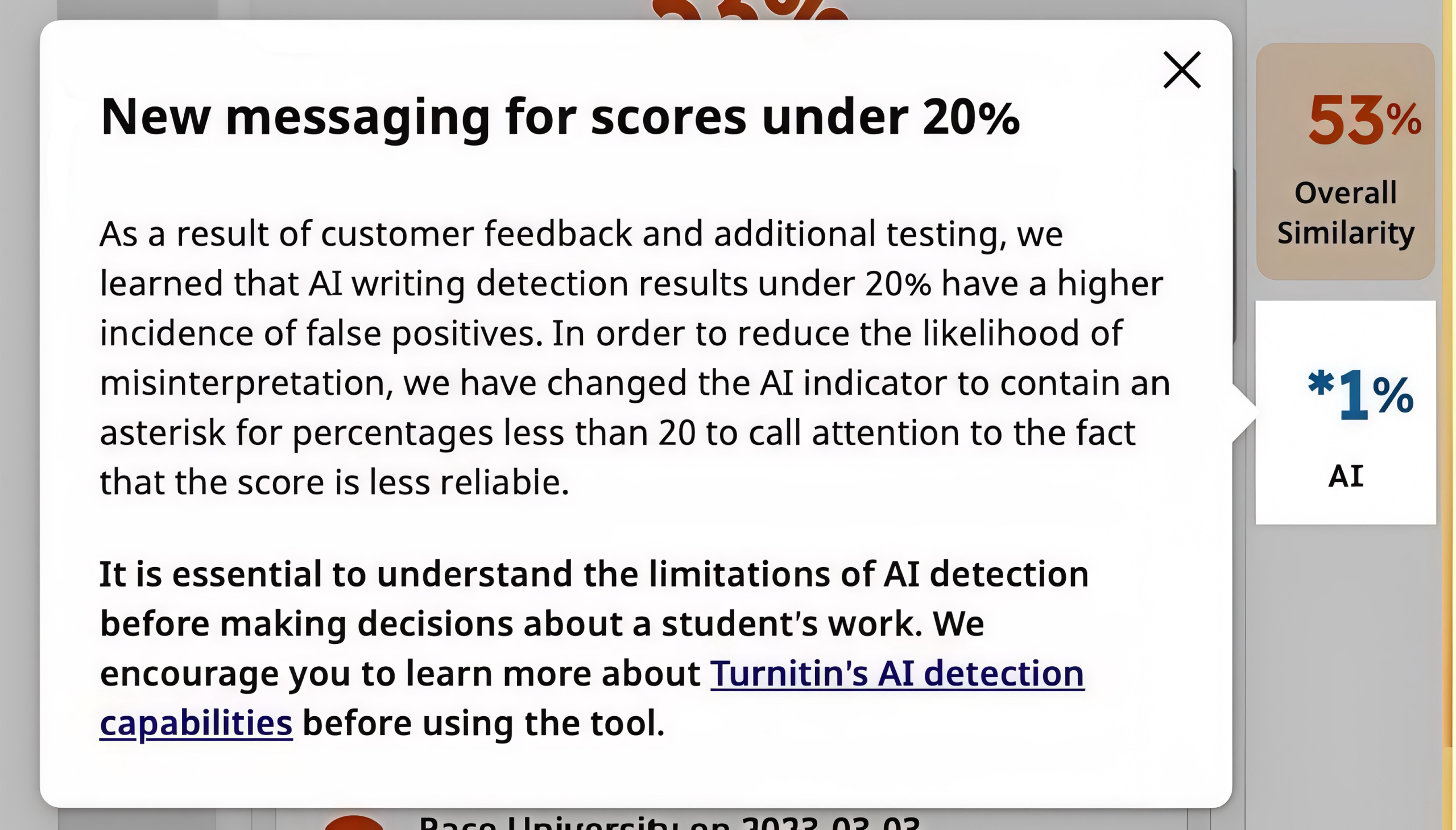

The absence of an AI policy or practices will not prevent its use. You will still encounter student submissions that you suspect (or know) to be generated with the assistance of AI, as demonstrated in the below image. When this happens, you will need to decide how to address it. Without establishing clear expectations around AI use, this process lacks transparency (a core characteristic of trust) and will impede the ability to apply policies equitably (fairness).

Responsible faculty acknowledge the possibility of academic misconduct and create and enforce clear policies around it. Academic Integrity also requires a willingness to take risks and deal with discomfort (courage).

It’s clear that institutions and instructors need to take some action in response to generative AI. The immediate reaction of many institutions and instructors may be to implement a blanket ban on AI use.

However, completely prohibiting the use of AI could also been seen to fail to address multiple fundamental values of Academic Integrity. It is also unrealistic, as generative AI tools are embedded in many other applications (such as Microsoft Word and web browsers).

This approach lacks: respect, responsibility, trust, courage, honesty, and fairness.

A blanket ban will contribute to a culture of mistrust as it could be seen to be built on the assumption that students’ most likely use of generative AI tools would be to commit scholastic offenses. It also ignores the opportunities generative AI offers to support and deepen learning.

Some students will absolutely use generative AI in inappropriate ways. However, many other students may benefit from it as a tool to enhance and improve learning and provide supports for diverse learners.

Students will also encounter AI tools in their future careers and studies, so by choosing to not consider AI in our teaching, fields, and course designs, we may be failing to prepare students for the future.

Our goal as instructors is to demonstrate respect for the motivations and goals of all learners while still being able to hold individuals responsible for their actions.

A blanket ban of generative AI lacks Trust in students’ ability to use generative AI responsibly and denies them the opportunity to develop important AI literacies. It also lacks the courage to explore if/how AI can enhance our disciplines and teaching and learning practices. Of course, there may be times when it is not appropriate for students to use generative AI tools, particularly if it interferes with students demonstrating the learning outcomes of the course. The key thing to consider is whether it is necessary to prohibit tools and, if it is, that you can clearly explain to students why.

Detecting generative AI

Another limitation of blanket bans is that it means more time must be spent detecting student use of generative AI. Yet, there is currently no reliable way to identify AI generated content. Current tools for doing so are unreliable and biased.

For example, one study shows that AI detection tools flagged more than half of the submitted essays from non-native speakers as AI-generated (Liang et. al., 2023 ![]() ).

).

Because of these limitations, the use of AI detection tools innately does not provide true evidence of academic misconduct (thus lacking honesty) and will not process all students’ work equitably (thus lacking fairness).

Instructors should explicitly acknowledge the existence of generative AI and the potential impact on teaching and learning activities in their course policies, in conversation with students, and in the design of learning activities and assessment.

By establishing clear guidelines, you establish a relationship of trust with your students, you have a clear policy that can be applied equitably to all students (fairness), you create clear boundaries and expectations (responsibility), and you provide students with the skills to use AI honestly.

See the following section for instructions on how to write an AI Policy.

By incorporating AI into your curriculum, you provide students with an opportunity to develop important skills and knowledge related to the ethical use of AI. This takes courage, as it may create discomfort, but it also fosters responsibility and respect.

By designing assignments deliberately to resist the use of AI or to embrace the use of AI, you are fostering mutual trust and respect. You are also exhibiting and encouraging courage, as AI-enhanced assessments may require risk-taking and discomfort. This also gives learners a chance to act responsibly with regards to AI use.

See the section on pedagogy for more information on assessment design.

Explore More

Explore MoreBelow are some optional activities that will enable you to develop some tools and strategies related to generative AI and academic integrity in your courses.

Review this compilation of Classroom Policies for AI Generative Tools. ![]()

Choose 1 or 2 policies that stand out to you. Reflect on whether they adhere to the 6-values of academic integrity.

Search for ways that AI are being used in your field or discipline. How will your students encounter AI in their future professions? What skills might they require to successfully engage with these tools and practices?

These may be places to start with when introducing students more broadly to the applications of AI.

16

A clear and detailed generative AI course syllabus statement is essential for setting explicit boundaries and establishing expectations of AI-use in your courses. Your course syllabus statement is a key tool for establishing the 6 core values of academic integrity in your courses.

By acknowledging generative AI and considering its possible integration into your teaching practices, you demonstrate courage. By clearly stating the scope of acceptable use, you create mutual responsibility and trust. By providing detailed rationale for the decisions you’ve made about generative AI, and by acknowledging privacy, security, and ethical considerations, you establish honesty and respect. By applying policies equitably, you create fairness.

Your syllabus statement should be tailored to your course’s specific needs, and you will likely find that you will set different boundaries for different courses as you identify the educational value of generative AI in each context.

To create a comprehensive syllabus statement, consider including this information:

Read the following example syllabus statement. Does this clearly fulfil all the suggested parts of a complete statement?

In this course, we recognize the potential benefits of generative AI to support your learning; however, there are some instances where the use of generative AI will detract from learning of key knowledge and skills, particularly skills where it’s important for you to accomplish a task unassisted. Each assignment will clearly outline the expectations and restrictions around generative AI use for that assignment. There are some learning activities and assignments where you are encouraged to use generative AI and there are others where AI-use is not allowed. However, there is no requirement in this course to use generative AI for the completion of any task. Any time you do use generative AI, you will be expected to properly cite its use, similar to the use of any other resources. You will also be responsible for addressing any inherent biases, inaccuracies, or other issues in the output. Violating the acceptable use of generative AI stated in your assignment requirements may result in academic penalties as laid out in Western University’s academic integrity and scholastic offensive policies.

Introduction | In this course, we recognize the potential benefits of generative AI to support your learning.

|

Rationale | However, there are some instances where the use of generative AI will detract from learning of key knowledge and skills, particularly skills where it’s important for you to accomplish a task unassisted.

|

Scope of Use | Each assignment will clearly outline the expectations and restrictions around generative AI use for that assignment. There are some learning activities and assignments where you are encouraged to use generative AI and there are others where AI use is not allowed;

|

Provide Alternatives | However, there is no requirement in this course to use generative AI for the completion of any task.

|

Student Responsibilities | Any time you do use generative AI, you will be expected to properly cite its use, similar to the use of any other resources. You will also be responsible for addressing any inherent biases, inaccuracies, or other issues in the output.

|

Repercussions | Violating the acceptable use of generative AI laid out in your assignment requirements may result in academic penalties as laid out in Western University’s academic integrity and scholastic offensive policies. |

Read the following example syllabus statement. Does this clearly fulfill all the suggested parts of a complete statement?

Generative AI is a useful tool for accomplishing many tasks; however, this course requires students to be able to understand and apply key mathematical concepts unassisted. You may use generative AI as a learning and study tool, but the use of generative AI for any course assessments, including homework, quizzes, and exams (or any other tasks that contribute to your course grade), is strictly prohibited. These assessments are designed to evaluate your individual understanding of the course material and your ability to engage in mathematical reasoning independently. If the use of generative AI is detected, you will fail the assignment and potentially face greater academic penalties.

Introduction | Generative AI is a useful tool for accomplishing many tasks;

|

Rationale | These assessments are designed to evaluate your individual understanding of the course material and your ability to engage in mathematical reasoning independently.

|

Scope of Use | however, this course requires students to be able to understand and apply key mathematical concepts unassisted. You may use generative AI as a learning and study tool, but the use of generative AI for any course assessments, including homework, quizzes, and exams (or any other tasks that contribute to your course grade), is strictly prohibited.

|

Provide Alternatives | N/A

|

Student Responsibilities | N/A

|

Repercussions | If the use of generative AI is detected, you will fail the assignment and potentially face greater academic penalties.

|

Generative AI will impact learning and assessment, and it is vital that instructors acknowledge and address this emerging technology in their teaching practices and course policies.

In this section, we have outlined an approach to drafting your generative AI Course Syllabus Statements that reflect the key values of Academic Integrity: Honesty, Trust, Respect, Responsibility, Fairness, and Courage.

Other institutions and instructors have taken different approaches to drafting generative AI policies ![]() which may also be helpful as you craft your own.

which may also be helpful as you craft your own.

Regardless of what structure you follow, it is important that your policy clearly states the boundaries of acceptable use, provides a rationale for this decision, and articulates any other expectations around if/how students can engage with generative AI in your courses.

17

Awareness Reflection: Values

Awareness Reflection: ValuesAn interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=610#h5p-27

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=610#h5p-54

18

IV

19

Generative AI has the potential to significantly transform the way we produce knowledge, teach, learn and collaborate. The disruption that generative AI presents to existing learning environments and pedagogical practices can evoke strong emotional reactions. Navigating the impact of generative AI on teaching and learning requires Emotional Intelligence (EI). Instructors need to be able to both recognize, understand, and manage our own emotional reactions to generative AI and recognize, understand, and potentially influence the emotional reactions of others, including both students and peers.

By the end of this section, you will be able to:

20

In this section, we will consider 4 key components of emotional intelligence (adapted from Goleman, 2020):

The following section will provide multiple case studies exploring different ways in which discussion and use of generative AI may evoke emotional responses and require Emotional Intelligence. For each case study, you will be asked to reflection on the 4 key components of EI.

Emotional Case Study: Academic Misconduct

You are teaching a first-year writing course and are marking the first assignment. You notice striking similarities in the structure and phrasing of the submissions, an unusual lack of grammatical and semantic errors, and a few tell-tale words and terms (e.g. “It’s important to note that…”, “Both sides have their merits and challenges.”) that make you suspect AI was used to generate the submissions. After hours of marking, you come across an essay with the following text “I don’t have personal experiences since I am an AI. However, I can tailor content to align with specific experiences or perspectives if you provide more details or context to guide the narrative.”

What are the affective considerations in this scenario?

Your initial reaction may be frustration or anger, which may be compounded by the work you’ve already put into providing feedback on assignments. Before acting or responding, it might be a good idea to step away and consider what factors may have led to the misuse of generative AI in this way. Are students confused about the assignment requirements? Do you have a clear policy around acceptable use of AI in your class?

Consider how you will respond to this individual student and the class as a whole around your concerns about generative AI use.

Emotional Case Study: Using AI for Marking

You are teaching a third-year psychology course. Students are required to submit weekly reflections. You have developed a generative AI tool to assess the reflections based on a rubric with detailed criteria. You are transparent about the use of this tool and students are aware that you are using generative AI for marking. In your midterm evaluations, many students have provided negative feedback about the use of generative AI for this purpose, with some comments suggesting that you aren’t doing your job as an instructor.

What are the affective considerations in this scenario?

You may feel upset or unfairly judged by the student feedback in your midterm evaluations. First, consider your motivations for using generative AI in this way. Does this use align with your values (see the section on values). Next, consider what emotion students might feel with this use of AI in your courses. How have they been messaged about your reasons for using AI in this way? Have you provided a way for them to otherwise voice their concerns?

Emotional Case Study: AI Tutors

Your department has adopted a new generative AI tool called TutorAI to help support students who are struggling academically. The tool is designed to provide personalised support to learners by providing knowledge checking questions, assessing responses, and providing resources to help learners address knowledge gaps. All students are able to access the tool, but students who are identified as needing remedial support are required to use this tool.

What are the affective considerations in this scenario?

Use of generative AI tools in this way may introduce uncertainty or discomfort, particularly if you feel as though part of your job is being replaced by technology. Consider your professional identity as an educator – is providing personalised support an important part of your practice? If so, how can you integrate this tool into your practices in a way that aligns with your values? (see the section on values). Also consider how students may feel about the use of this tool. Are there particular student populations who may experience unique challenges in using an AI support tool? How do you identify and respond to these needs?

Emotional Case Study: AI Refusal

You are teaching a graduate research skills seminars, supporting students through the research process. You have asked students to use generative AI to support their literature review for their research proposal. You have provided clear guidelines on how to use it and how to document its use. One of the learning outcomes that you’re hoping to achieve is being able to critically evaluate generative AI tools and learning how to use them to support knowledge production. One of your students tells you that they believe that the use of generative AI is completely unethical and refuses to use the tool for this assignment.

What are the affective considerations in this scenario?

Choices around whether or not and how to use generative AI technologies are very personal and tied into our individual ethics and values. You may feel conflicted or judged when another person’s use or views doesn’t align with your own. Part of emotional intelligence is considering different perspectives: what personal beliefs or values might lead to a students’ decision to not use generative AI? How can you communicate your rationale for the use of this tool? What alternatives can you provide the learner to allow them to achieve or demonstrate the same learning?

If you do integrate generative AI technologies in your teaching, learning activities, or assessments, you will also be introducing a need for learners to increase their emotional intelligence with regards to how these tools are being used. The following case provides an example of EI considerations from a student perspective.

Emotional Case Study: An AI Teammate

You are teaching a 4th year business course where students work on weekly business case studies in groups. The groups are established at the beginning of the semester and remain the same throughout the semester. This year, you’ve implemented a new requirement that all groups must create an AI Team member. They will assign the AI team member a persona and role on the team that complements the strengths of the human group members. Groups are required to engage with the AI team members on all case studies and document how and when the generative AI tool is used. In the middle of the term, a student comes to you with significant concerns about the use of generative AI in this way. They believe that their team is assigning too much authority to the contributions of the generative AI team member, constantly deferring to their recommendations and ignoring the ideas of the human members of the team. Because of this, it seems that most of the group members have disengaged from the group work.

What are the affective considerations in this scenario?

Students may feel unvalued or less confident in their contributions to a group where the input from a generative AI tool is being privileged. Consider how you could support the development of group dynamics, including with the AI team member, and how you can instil critical reflection in your students to allow them to be more critical of generative AI outputs.

21

It’s important to be aware of our own emotional responses to Generative AI and understand how our emotions may impact our decisions around Generative AI use. It’s also important to recognize that others (peers and learners) may have beliefs about Generative AI that conflict with our own and lead them to different practices or uses.

Awareness Reflection: Affect

Awareness Reflection: AffectAn interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=440#h5p-15

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=440#h5p-53

22

V

23

Using generative AI technologies requires new skills and the application of old skills to new contexts. In order to effectively engage with generative AI tools, it’s necessary to understand how to craft effective instructions and how to critically evaluate the generated output.

By the end of this section, you will be able to:

24

Many AI interfaces allow users to communicate using natural language, rather than programming or machine language. This makes it easier for general audiences to use generative AI technologies; however, as with human-to-human communication, it’s important to use clear and specific language to get the desired results. The process of designing effective prompts has been referred to as prompt engineering.

Effective prompt writing can help you more quickly achieve the results that you’re seeking, minimizing the number of queries that you need to make.

The following tips can help you write effective prompts to get the results you’re looking for:

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/aihighereducation/?p=463#h5p-39

For more tips, check out the Prompt Engineering Guide ![]() or the Prompt Library from Ethan Mollick

or the Prompt Library from Ethan Mollick ![]() .

.

Activity: Prompting AI

Activity: Prompting AIUsing the generative AI tool of your choice, try out some of the techniques shared above to accomplish the tasks below:

As AI tools become increasingly sophisticated, writing good prompts is often not enough. Successfully using generative AI tools requires context engineering. Context Engineering means building a system using the right information and tools to get the desire result (Schmid, 2025). This has become increasingly important with the development of Retrieval Augmented Generation (RAG), or the ability for generative AI tools to conduct real-time information retrieval; and with the shift towards Agentic AI, or AI systems with the ability to act autonomously, with limited human supervision, to complete a goal (Stryker, n.d.).

Prompting is still an important aspect of context engineering, but there are multiple other contextual elements to consider, including:

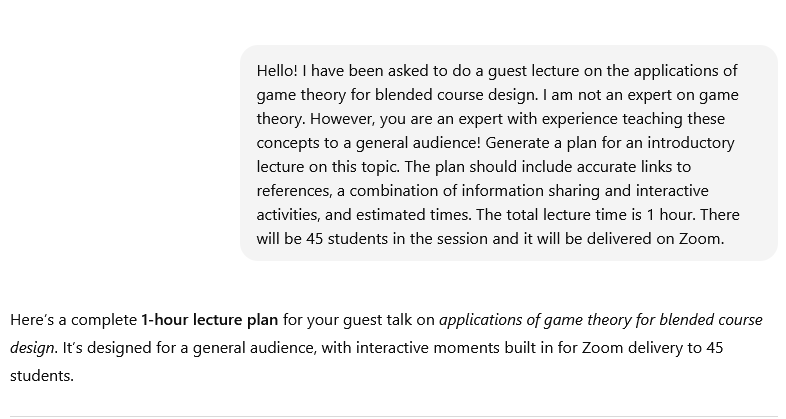

For a deeper understanding of how each of these elements impact the generate output, take a look at my conversation below asking ChatGPT 5 to help generate a lecture plan. I ask ChatGPT to provide a breakdown of the context. You can view the full conversation here.

I’m running inside a system with hidden system prompts that set guardrails (safety rules, style constraints, refusal policies, and how I should use tools).

Limitations include:

No violent/gory content.

No impersonation or harmful instructions.