A-

Abstract– the short paragraph at the beginning of an article that summarizes its main point (3.1)

Accuracy– the extent to which one’s coding procedures correspond to some preexisting standard (14.5)

Acquiescence bias– when respondents say yes to whatever the researcher asks (9.5)

Action research– research that is conducted for the purpose of creating some form of social change in collaboration with stakeholders (15.3)

Aggregate matching– when the comparison group is determined to be similar to the experimental group along important variables (12.2)

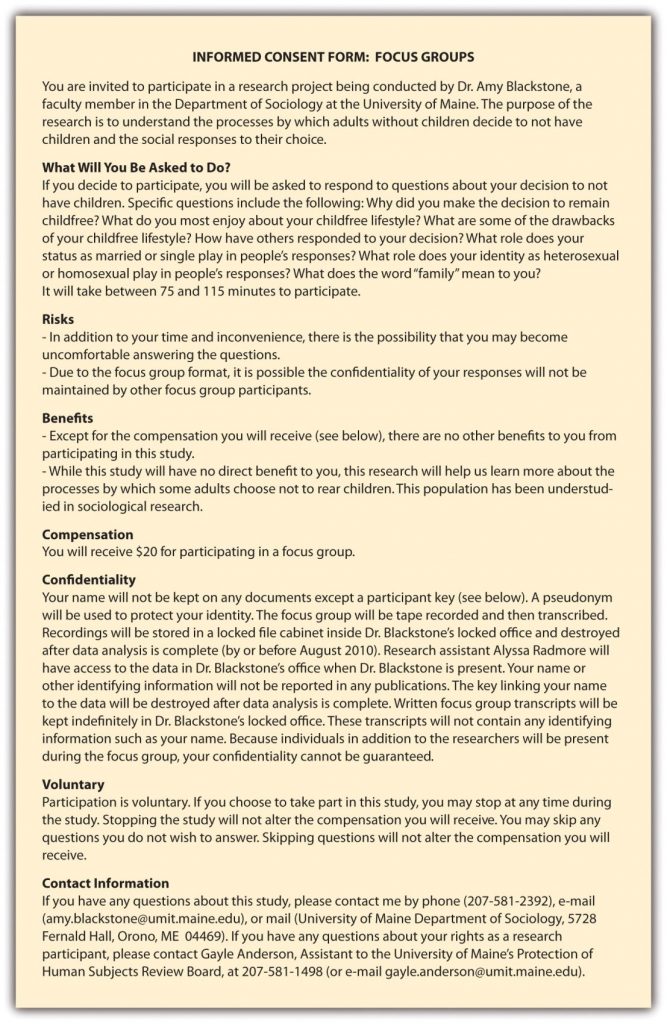

Anonymity– when the identity of research participants is not known to researchers (5.2)

Anonymized data– data that does not contain personally identifying information (14.4)

Attributes– the characteristics that make up a variable (9.5)

Authenticity– the degree to which researchers capture the multiple perspectives and values of participants in their study and foster change across participants and systems during their analysis (9.4)

Authority– learning by listening to what people in authority say is true (1.1)

B-

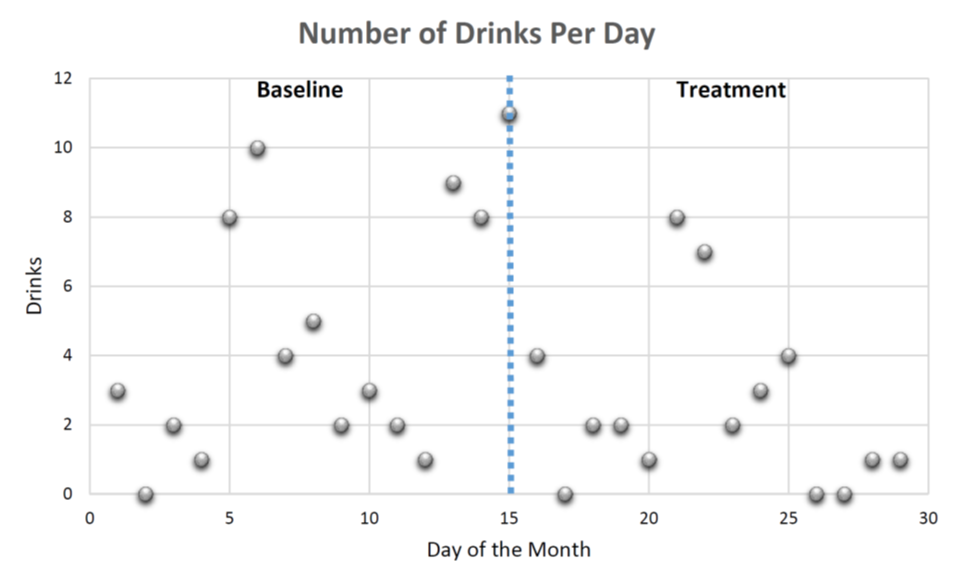

Baseline stage– the period of time before the intervention starts (15.2)

Bias– in sampling, when the elements selected for inclusion in a study do not represent the larger population from which they were drawn due to sampling method or thought processes of the researcher (10.5)

Bivariate analysis– quantitative analysis that examines relationships among two variables (12.4)

C-

Categorical measures– a measure with attributes that are categories (9.5)

Causality– the idea that one event, behavior, or belief will result in the occurrence of another, subsequent event, behavior, or belief (7.2)

Classic experimental design– a type of experimental design that uses random assignment, an experimental and control group, as well as pre- and posttesting (12.1)

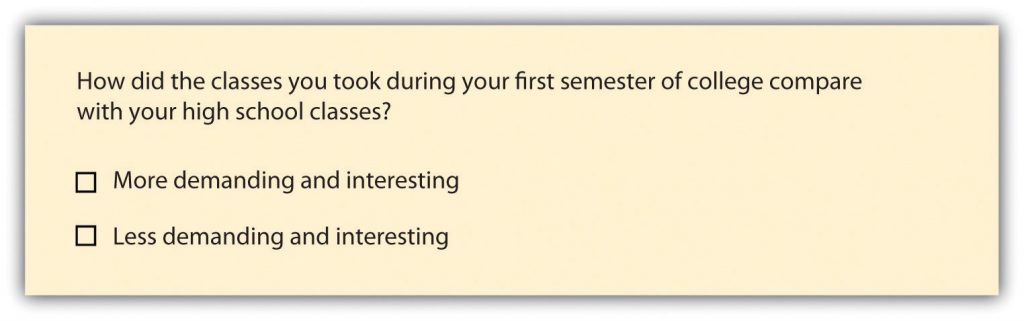

Closed-ended questions– questions for which the researcher offers response options (11.4)

Cluster sampling– a sampling approach that begins by sampling groups (or clusters) of population elements and then selects elements from within those groups (10.3)

Code– a shorthand representation of some more complex set of issues or ideas (13.5)

Code sheet– the instrument an unobtrusive researcher uses to record observations (14.3)

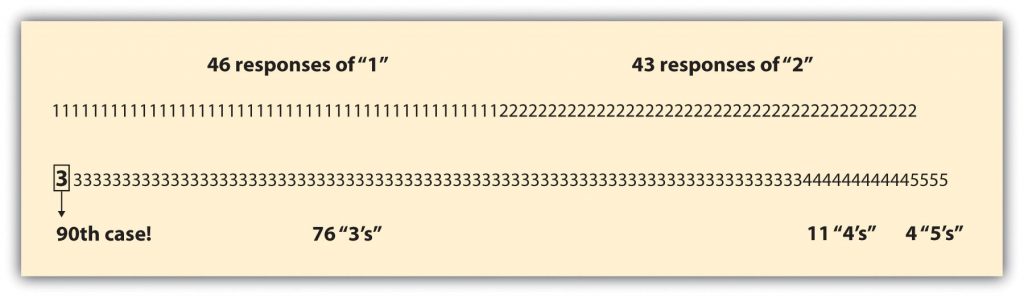

Codebook– a document that outlines how a survey researcher has translated her data from words into numbers (12.4)

Coding– identifying themes across qualitative data by reading transcripts (13.5)

Cognitive biases– predictable flaws in thinking (1.1)

Cohort survey– describes how people with a defining characteristic change over time (11.3)

Comparable groups– groups that are similar across factors important for the study (12.3)

Comparison group– a group in quasi-experimental designs that receives “treatment as usual” instead of no treatment (12.1)

Concept– notion or image that we conjure up when we think of some cluster of related observations or ideas (9.2)

Conceptualization– writing out clear, concise definitions for key concepts, particularly in quantitative research (9.2)

Concurrent validity– if a measure is able to predict outcomes from an established measure given at the same time (9.4)

Confidence interval– a range of values in which the true value is likely to be (3.1)

Confidentiality– when identifying information about research participants is known to the researchers but is not divulged to anyone else (5.2)

Confirmability– the degree to which the results reported are linked to the data obtained from participants (9.4)

Confirmation bias– observing and analyzing information in a way that confirms what you already think is true (1.1)

Constructs– are not observable but can be defined based on observable characteristics (9.1)

Content analysis– a type of unobtrusive research that involves the study of texts and their meaning (14.3)

Content validity– if the measure includes all of the possible meanings of the concept (9.4)

Contingency table– shows how variation on one variable may be contingent on variation on another (12.4)

Continuous measures– a measure with attributes that are numbers (9.5)

Control group– the group in an experiment that does not receive the intervention (12.1)

Control variables– potential “third variables” effects that are controlled for mathematically in the data analysis process to highlight the relationship between the independent and dependent variable (7.2)

Convenience sample– when a researcher gathers data from whatever cases happen to be convenient (10.2)

Convergent validity– if a measure is conceptually similar to an existing measure of the same concept (9.4)

Covariation– the degree to which two variables vary together (7.2)

Credibility– the degree to which the results are accurate and viewed as important and believable by participants (9.4)

Critical paradigm– a paradigm in social science research focused on power, inequality, and social change (6.2)

Cross-sectional surveys– surveys that are administered at just one point in time (11.3)

D-

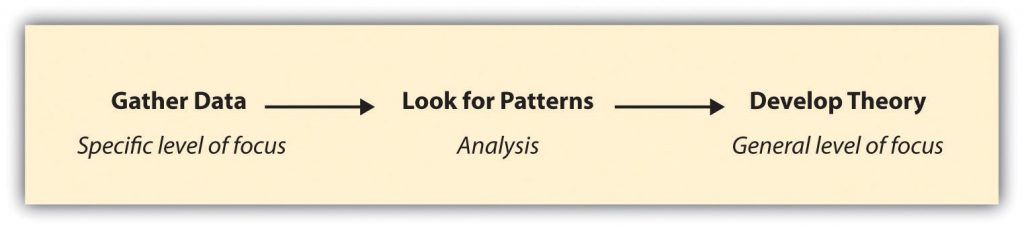

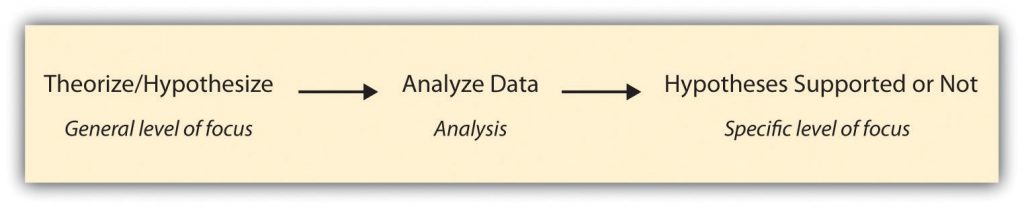

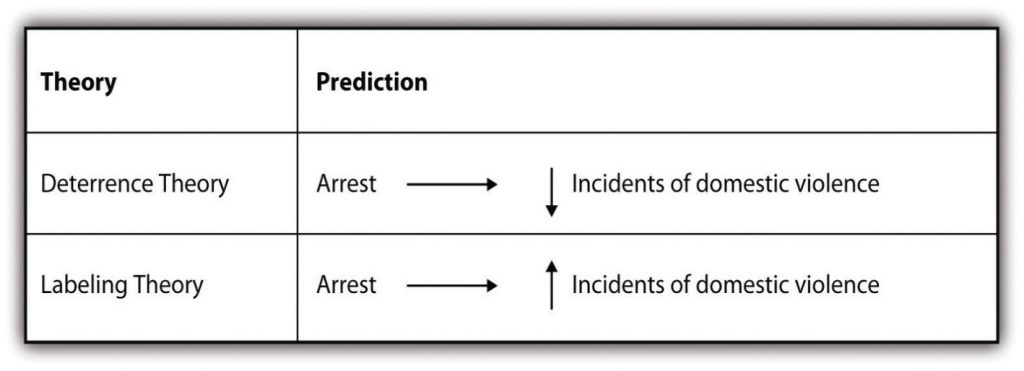

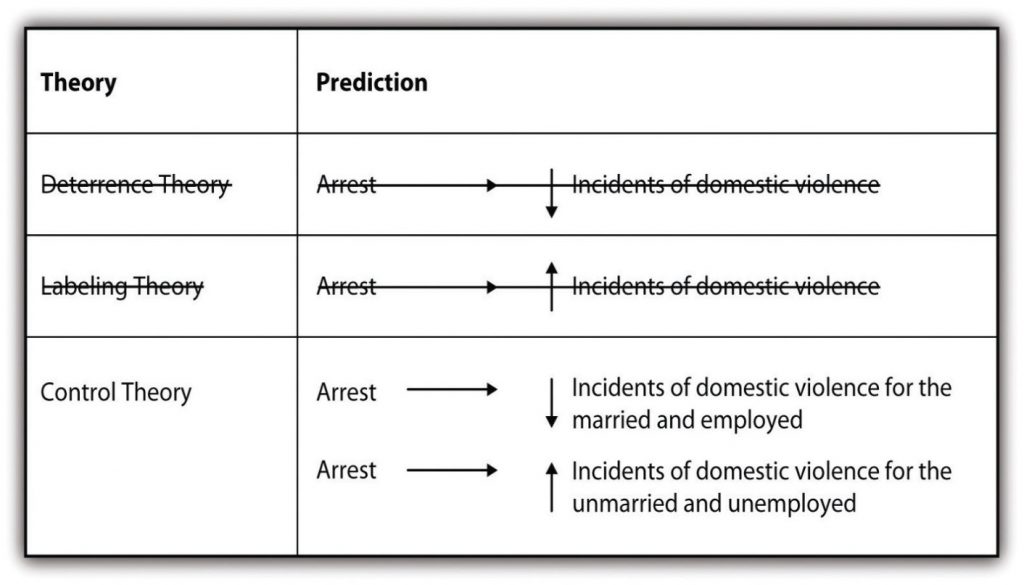

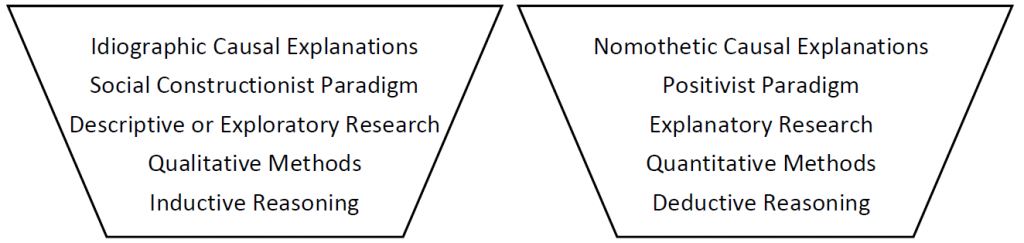

Deductive approach– when a researcher studies what others have done, reads existing theories of whatever phenomenon she is studying, and then tests hypotheses that emerge from those theories (6.3)

Dependability– ensures that proper qualitative procedures were followed during the research process and that any changes that emerged during the research process are accounted for, justified, and described in the final report (9.4)

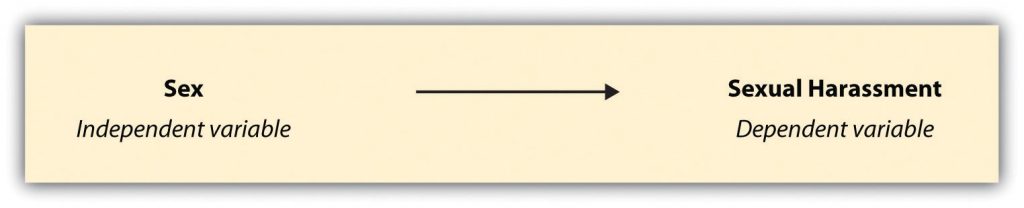

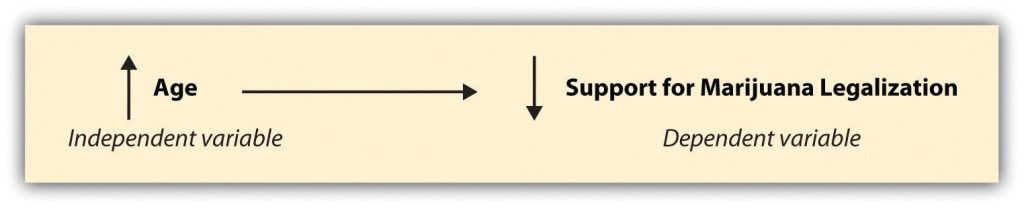

Dependent variable– a variable that depends on changes in the independent variable (7.2)

Descriptive research– research that describes or defines a particular phenomenon (7.1)

Direct experience– learning through informal observation (1.1)

Discriminant validity– if a measure is not related to measures to which it shouldn’t be statistically correlated (9.4)

Dissemination– “a planned process that involves consideration of target audiences and the settings in which research findings are to be received and, where appropriate, communicating and interacting with wider policy and…service audiences in ways that will facilitate research uptake in decision-making processes and practice” (Wilson, Petticrew, Calnan, & Natareth, 2010, p. 91) (16.2)

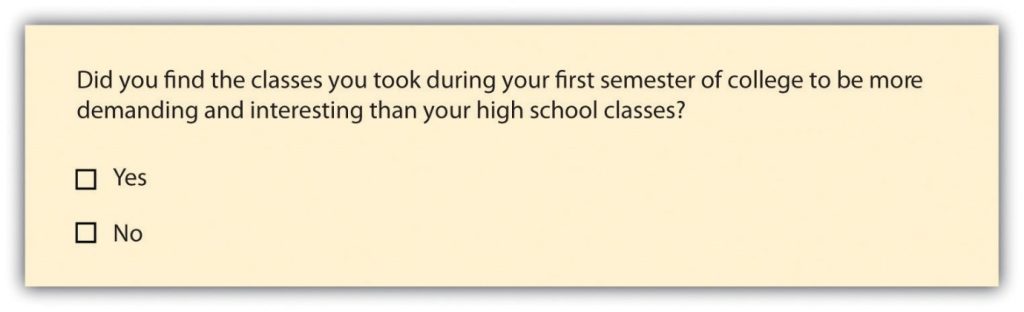

Double-barreled question– a question that asks two different questions at the same time, making it difficult for a research participant to respond accurately (11.4)

Double-blind– when researchers interact with participants are unaware of who is in the control or experimental group (12.3)

Dunning-Kruger effect– when unskilled people overestimate their ability and knowledge (and experts underestimate their ability and knowledge)

E-

Ecological fallacy– claims about one lower-level unit of analysis are made based on data from some higher-level unit of analysis (7.3)

Emphasis– in a mixed methods study, refers to the priority that each method is given (7.4)

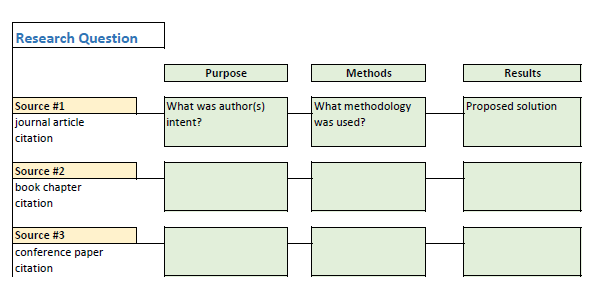

Empirical articles– apply theory to a behavior and reports the results of a quantitative or qualitative data analysis conducted by the author (2.2)

Empirical questions– questions that can be answered by observing experiences in the real world (8.1)

Epistemology– a set of assumptions about how we come to know what is real and true (1.1)

Ethical questions– questions that ask about general moral opinions about a topic and cannot be answered through science (8.1)

Evaluation research– research that evaluates the outcomes of a policy or program (1.3)

Evidence-based practice– making decisions on how to help clients based on the best available evidence (1.3)

Ex post facto control group– a control group created when a researcher matches individuals after the intervention is administered (12.2)

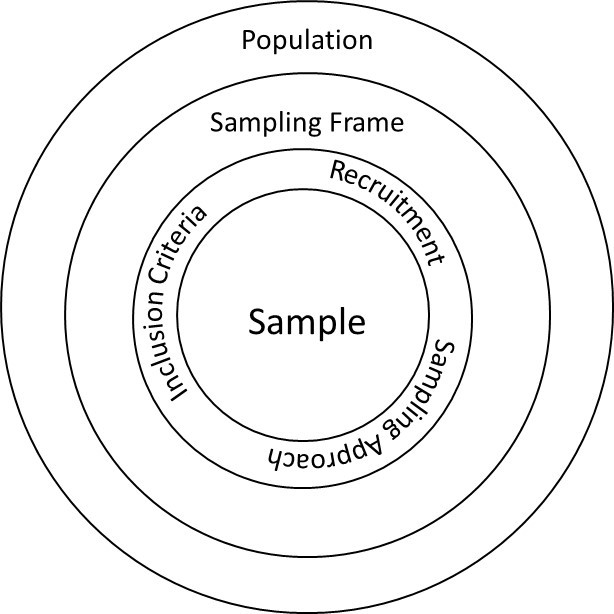

Exclusion criteria– characteristics that disqualify a person from being included in a sample (10.1)

Exempt review– lowest level of IRB review for studies with minimal risk or human subject involvement (5.1)

Exhaustiveness– when all possible attributes are listed (9.5)

Expedited review– middle level of IRB review for studies with minimal risk but greater human subject involvement (5.1)

Experiment– a method of data collection designed to test hypotheses under controlled conditions (12.1)

Experimental group– the group in an experiment that receives the intervention (12.1)

Explanatory research– explains why particular phenomena work in the way that they do; answers “why” questions (7.1)

Exploratory research– conducted during the early stages of a project, usually when a researcher wants to test the feasibility of conducting a more extensive study (7.1)

External validity– the degree to which experimental conclusions generalize to larger populations and different situations (12.3)

F-

Face validity– if it is plausible that the measure measures what it intends to (9.4)

Fairness– the degree to which “different constructions, perspectives, and positions are not only allowed to emerge, but are also seriously considered for merit and worth” (Rodwell, 1998, p. 107) (9.4)

False negative– when a measure does not indicate the presence of a phenomenon, when in reality it is present (9.5)

False positive– when a measure indicates the presence of a phenomenon, when in reality it is not present (9.5)

Fence-sitters– respondents who choose neutral response options, even if they have an opinion (11.4)

Field notes– written notes produced by the researcher during the data collection process (13.2)

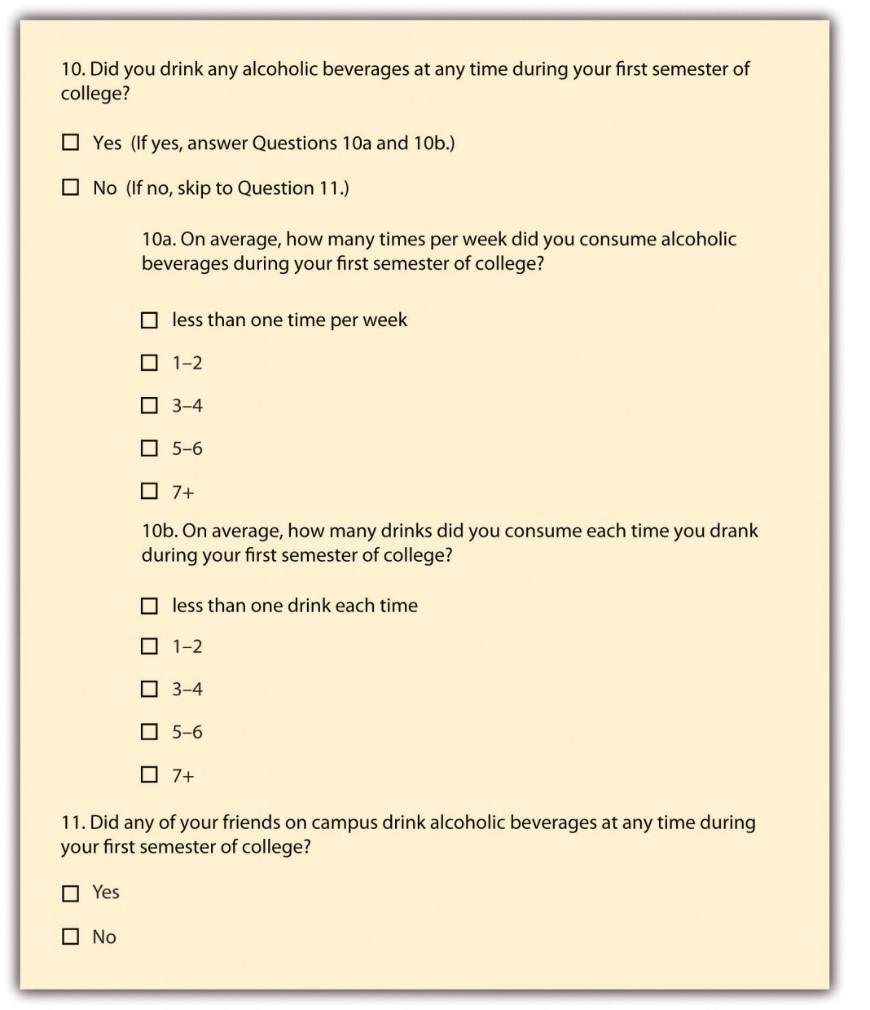

Filter question– a question that identifies some subset of survey respondents who are asked additional questions that are not relevant to the entire sample (11.4)

Floaters– respondents that choose a substantive answer to a question when really, they don’t understand the question or don’t have an opinion (11.4)

Focus groups– planned discussions designed to elicit group interaction and “obtain perceptions on a defined area of interest in a permissive, nonthreatening environment” (Krueger & Casey, 2000, p. 5) (13.4)

Focused coding– collapsing or narrowing down codes, defining codes, and recoding each transcript using a final code list (13.5)

Frequency distribution– summarizes the distribution of responses on a single survey question (12.4)

Full board review– highest level of IRB, for studies with greater than minimal risk to participants (5.1)

G-

Generalizability – the idea that a study’s results will tell us something about a group larger than the sample from which the findings were generated (10.3)

Generalize– to make claims about a larger population based on an examination of a smaller sample (7.2)

Gray literature– research and information released by non-commercial publishers, such as government agencies, policy organizations, and think-tanks (2.2)

H-

Hawthorne effect– participants in a study will behave differently because they know they are being observed (14.2)

Historical research– analyzing data from primary sources of historical events and proceedings (14.4)

Hypothesis– a statement describing a researcher’s expectation regarding what she anticipates finding (7.2)

I-

Idiographic research– attempts to explain or describe the phenomenon exhaustively, based on the subjective understandings of the participants (7.2)

Inclusion criteria– the characteristics a person must possess in order to be included in a sample (10.1)

In-depth interviews– interviews in which researchers hear from respondents about what they think is important about the topic at hand in the respondent’s own words (13.2)

Independence– when there is no relationship between the two variables in question (12.4)

Independent variable– a variable that causes a change in the dependent variable (7.2)

Index– a measure that contains several indicators and is used to summarize a more general concept (9.3)

Indicators– represent the concepts that a researcher is interested in studying (9.3)

Indirect observables– things that require indirect observation and inference to measure (9.1)

Individual matching– pairing participants with similar attributes for the purpose of assignment to groups (12.2)

Inductive approach– when a researcher starts with a set of observations and then moves from particular experiences to a more general set of propositions about those experiences (6.3)

Informed consent– a research subject’s voluntary agreement to participate in a study based on a full understanding of the study and of the possible risks and benefits involved (5.2)

Inputs– resources needed for the program to operate (15.1)

Internal consistency reliability– the degree to which scores on each question of a scale are correlated with each other (9.4)

Internal validity– the confidence researchers have about whether their intervention produced variation in their dependent variable (12.3)

Inter-rater reliability– the degree to which different observers agree on what happened (9.4)

Interval level– a level of measurement that is continuous, can be rank ordered, is exhaustive and mutually exclusive, and for which the distance between attributes is known to be equal (9.5)

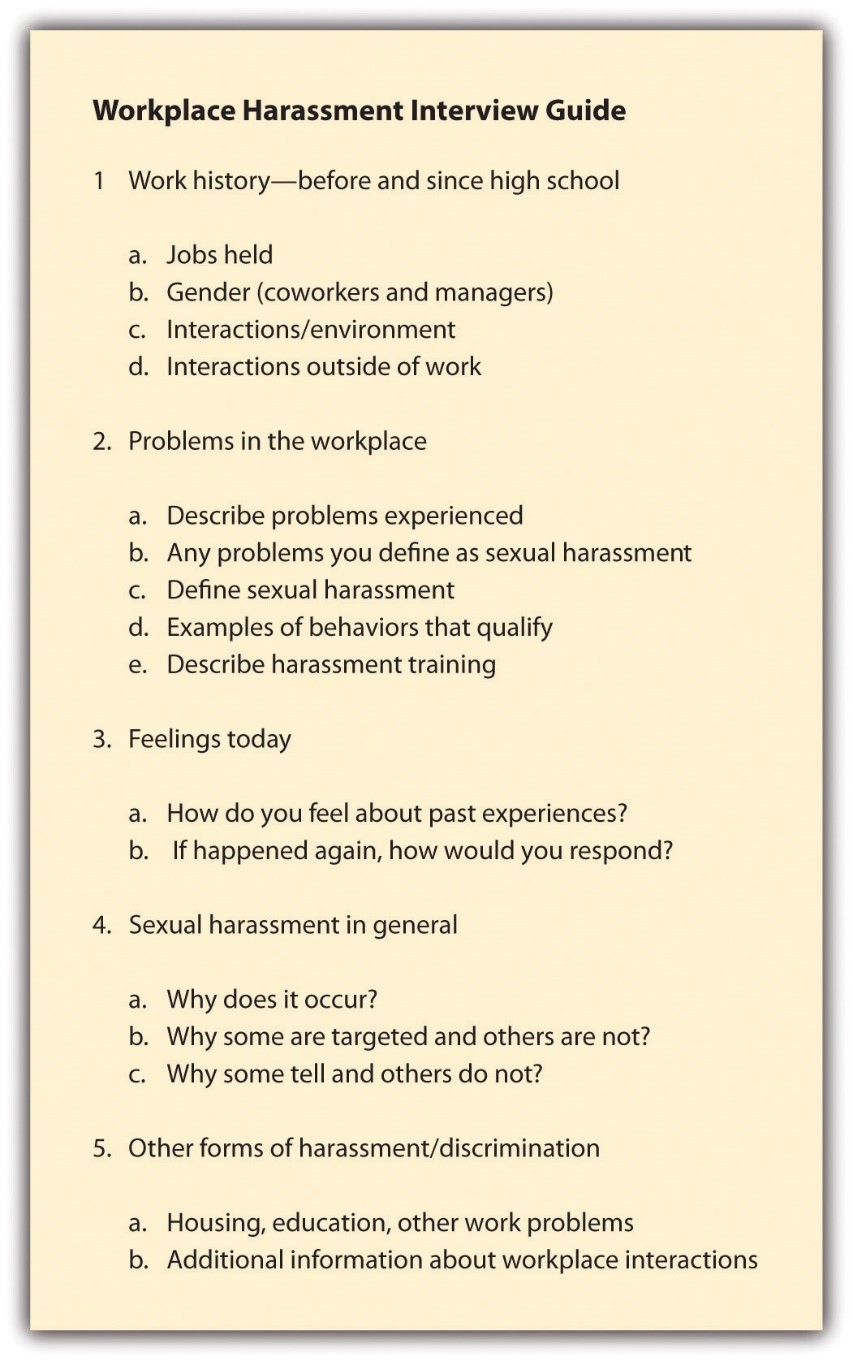

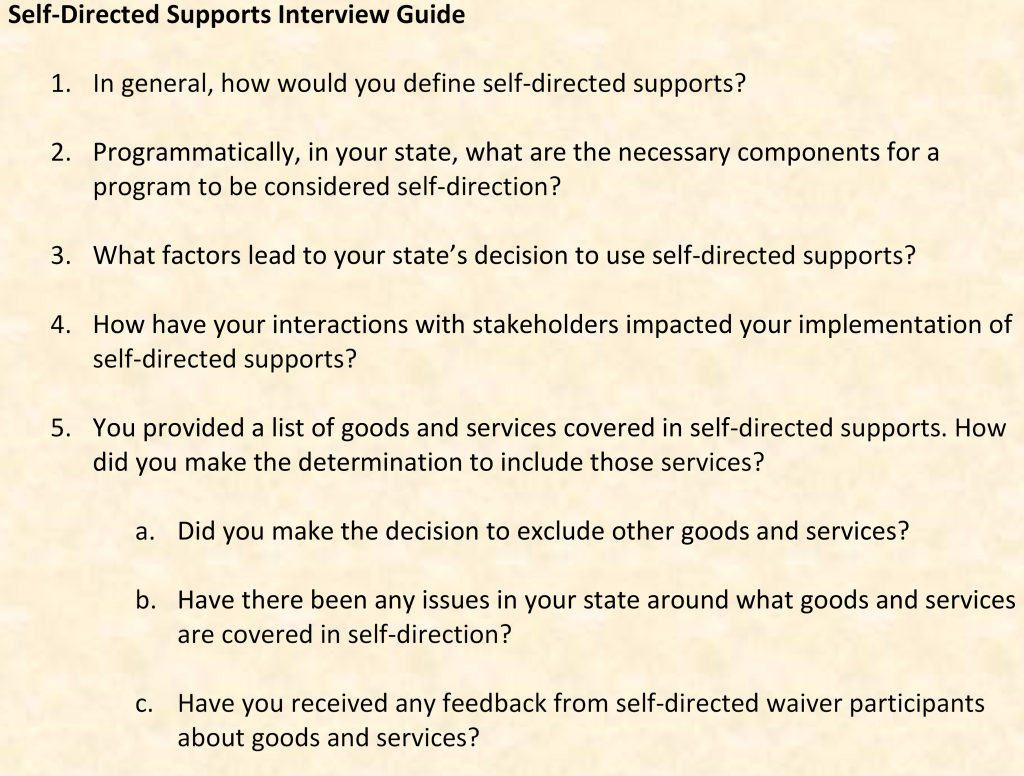

Interview guide– a list of topics or questions that the interviewer hopes to cover during the course of an interview (13.2)

Interview schedules– when a researcher poses questions verbally to respondents (11.3)

Interviews– a method of data collection that involves two or more people exchanging information through a series of questions and answers (13.1)

Intuition– your “gut feeling” about what to do

J-

Journaling– making notes of emerging issues and changes during the research process (13.2)

L-

Latent content– the underlying meaning of the surface content (14.3)

Leading question– a question with wording that influences how a participant responds (9.5)

Likert scales– ordinal measures that use numbers as a shorthand (e.g., 1=highly likely, 2=somewhat likely, etc.) to indicate what attribute the person feels describes them best (9.5)

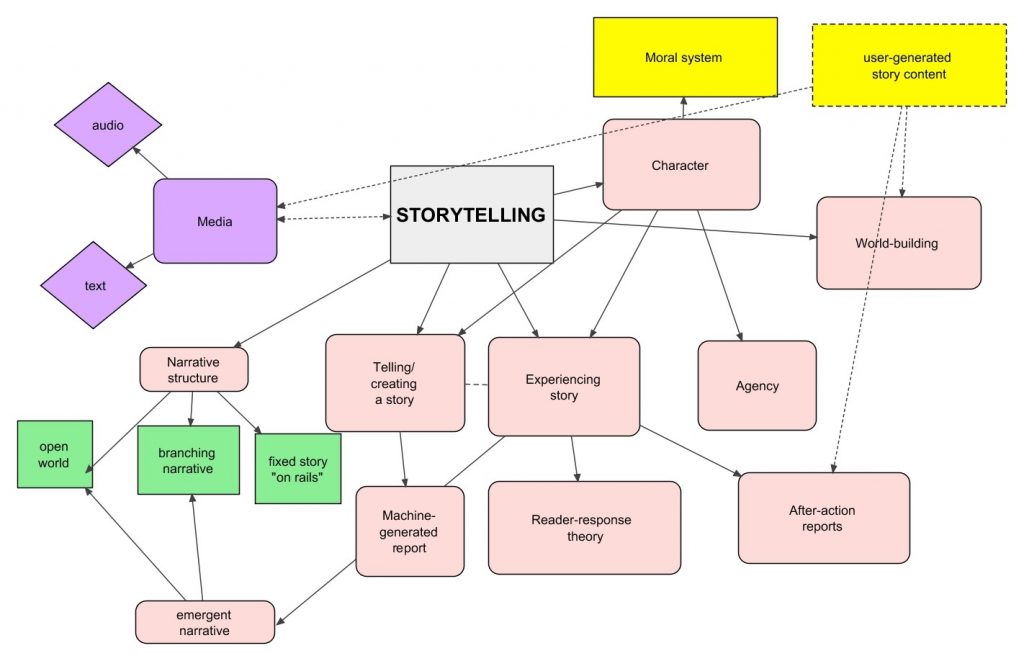

Literature review– a survey of factual or nonfiction books, articles, and other documents published on a particular subject (4.1)

Longitudinal surveys– surveys in which a researcher makes observations over an extended period of time (11.3)

M-

Macro-level– examining social structures and institutions (1.1)

Manifest content– the most apparent and surface-level content in a communication (14.3)

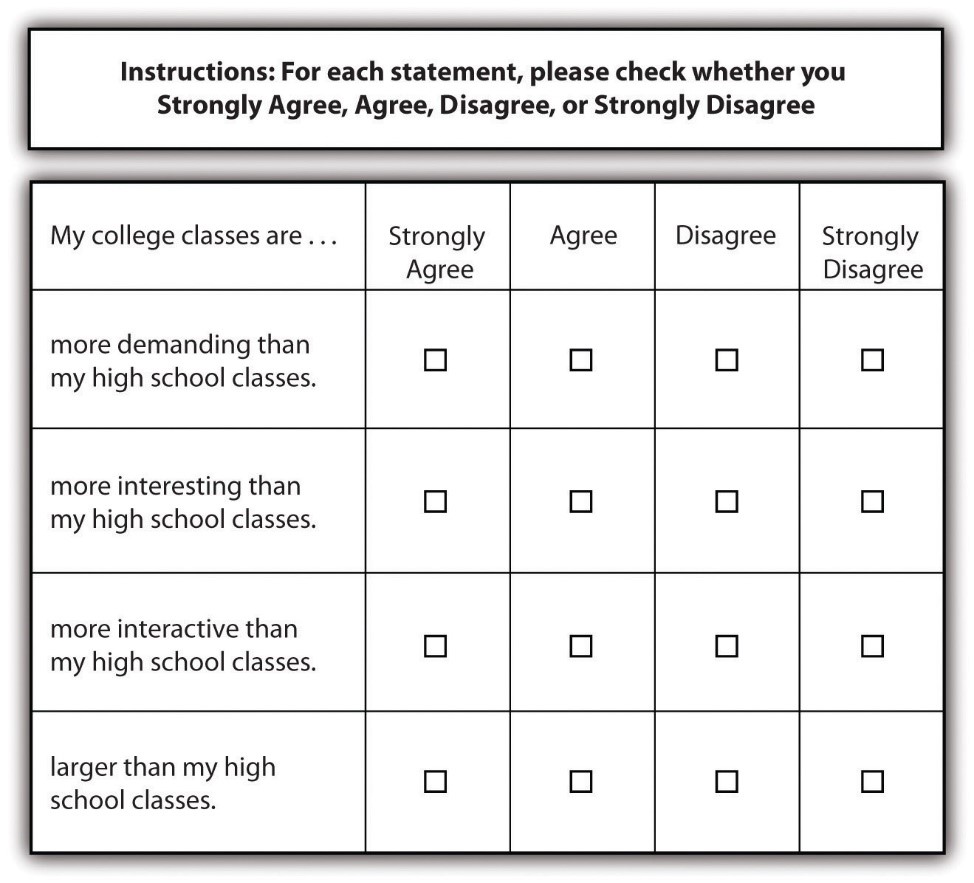

Matrix question– lists a set of questions for which the answer categories are all the same (11.4)

Mean– also known as the average, this is the sum of the value of all responses on a given variable divided by the total number of responses (12.4)

Measurement– the process by which researchers describe and ascribe meaning to the key facts, concepts, or other phenomena they are investigating (9.1)

Median– the value that lies in the middle of a distribution of responses (12.4)

Meso-level– examining interaction between groups (1.1)

Micro-level– examining the smallest levels of interaction, usually individuals (1.1)

Mode– the most common response given to a question (12.4)

Moderator– the researcher tasked with facilitating the conversation in the focus group (13.4)

Multiple treatment design– beginning a new course of treatment or adding a new dimension to an existing treatment (15.2)

Multivariate analysis– quantitative analysis that examines relationships among more than two variables (12.4)

Mutual exclusivity– when a person cannot identify with two different attributes simultaneously (9.5)

Multi-dimensional concepts– concepts that are comprised of multiple elements (9.2)

N-

Natural experiments– situations in which comparable groups are created by differences that already occur in the real world (12.2)

Nominal– a level of measurement that is categorical and for which those categories cannot be mathematically ranked, though they are exhaustive and mutually exclusive (9.5)

Nomothetic research– a type of research that provides a more general, sweeping explanation that is universally true for all people (7.2)

Nonequivalent comparison group design– a quasi-experimental design similar to a classic experimental design but without random assignment (12.2)

Nonprobability sampling– sampling techniques for which a person’s likelihood of being selected for membership in the sample is unknown (10.2)

Nonresponse bias– bias reflected in differences between people who respond to a survey and those who do not respond (12.4)

Null hypothesis– the assumption that no relationship exists between the variables in question (3.1)

O-

Objective truth– a single truth, observed without bias, that is universally applicable

Observational terms– things that can be seen with the naked eye simply by looking at them (9.1)

One-group pre-/posttest design– a type of pre-experimental design that applies an intervention to one group and administers a pretest and posttest (12.2)

One-shot case study– a pre-experimental design that applies an intervention to only one group without a pretest (12.2)

Ontology– a set of assumptions about what is real (1.1)

Open coding– reading through each transcript, line by line, and makes a note of whatever categories or themes seem to jump out (13.5)

Open-ended questions– questions for which the researcher does not include response options (11.4)

Operationalization– a process by which researchers conducting quantitative research spell out precisely how a concept will be measured and how to interpret that measure (9.3)

Oral presentation– a verbal presentation of research findings to a conference audience (16.2)

Ordinal– a level of measurement that is categorical, has categories that can be rank ordered, and those categories are exhaustive and mutually exclusive (9.5)

Outcomes– the issues a researcher is trying to change in her clients (15.1)

Outcomes assessment– an evaluation designed to discover if a program achieved its intended outcomes (15.1)

Outputs– tangible results of the program process (15.1)

Overgeneralization– using limited observations to make assumptions about broad patterns (1.1)

P-

Panel survey– describes how people in a specific group change over time, asking the same people each time the survey is administered (11.3)

Paradigm– a way of viewing the world and a framework from which to understand the human experience (6.2)

Peer review– a formal process in which other esteemed researchers and experts ensure the work meets the standards and expectations of the professional field (2.2)

Periodicity– the tendency for a pattern to occur at regular intervals (10.3)

Placebo effect– when a participant feels better, despite having received no intervention at all (12.3)

Plagiarism– presenting someone else’s words or ideas as if they are your own (16.2)

Plausibility– in order to make the claim that one event, behavior, or belief causes another, the claim has to make sense (7.2)

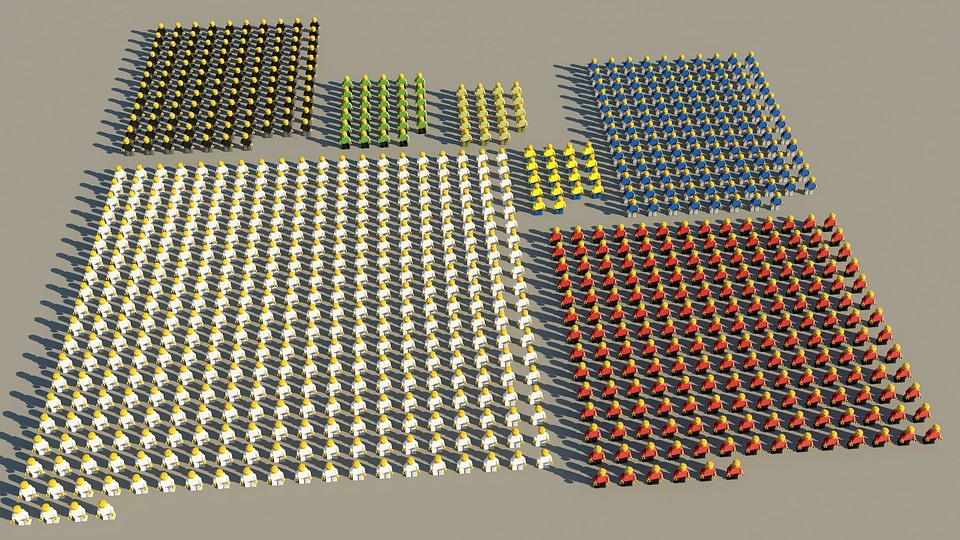

Population– the cluster of people about whom a researcher is most interested (10.1)

Positivism– a paradigm guided by the principles of objectivity, knowability, and deductive logic (6.2)

Poster presentation– presentations that use a poster to visually represent the elements of the study (16.2)

Postmodernism– a paradigm focused on the historical and contextual embeddedness of scientific knowledge and a skepticism towards certainty and grand explanations in social science (6.2)

Posttest– a measurement taken after the intervention (12.1)

Posttest-only control group design– a type of experimental design that uses random assignment and an experimental and control group, but does not use a pretest (12.1)

Practical articles– describe “how things are done” in practice (Wallace & Wray, 2016, p. 20) (2.2)

Practice wisdom– “learning by doing” that guides social work intervention and increases over time (1.1)

Predictive validity– if a measure predicts things, it should be able to predict in the future (9.4)

Pre-experimental designs– a variation of experimental design that lacks the rigor of experiments and is often used before a true experiment is conducted (12.2)

Pretest– a measurement taken prior to the intervention (12.1)

Process assessment– an evaluation conducted during the earlier stages of a program or on an ongoing basis (15.1)

Program– the intervention clients receive (15.1)

Primary source– published results of original research studies (2.2)

Probability proportionate to size– in cluster sampling, giving clusters different chances of being selected based on their size so that each element within those clusters has an equal chance of being selected (10.3)

Probability sampling– sampling approaches for which a person’s likelihood of being selected from the sampling frame is known (10.3)

Probe– a request for more information in qualitative research (13.3)

Process assessment– an evaluation conducted during the earlier stages of a program or on an ongoing basis

Purposive sample– when a researcher seeks out participants with specific characteristics (10.2)

P-value– a statistical measure of the probability that there is no relationship between the variables under study (3.1)

Q-

Qualitative methods– examine words or other media to understand their meaning (1.2)

Quantitative methods– examine numerical data to precisely describe and predict elements of the social world (1.2)

Quasi-experimental design– a variation of experimental design that lacks random assignment to experimental and control groups (12.2)

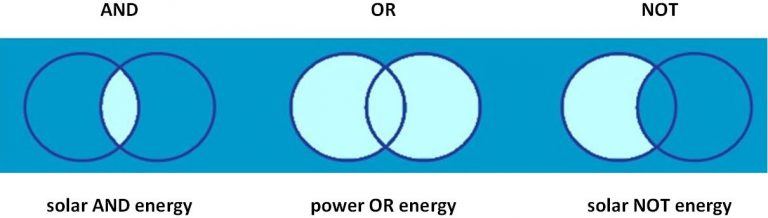

Query– search terms used in a database to find sources (2.3)

Quota sample– when a researcher selects cases from within several different subgroups (10.2)

R-

Random assignment– using a random process to assign people into experimental and control groups (12.1)

Random error– unpredictable error that does not consistently result in scores that are consistently higher or lower on a given measure (9.5)

Random selection– using a randomly generated numbers to determine who from the sampling frame gets recruited into the sample (10.3)

Ratio level– a level of measurement in which attributes are mutually exclusive and exhaustive, attributes can be rank ordered, the distance between attributes is equal, and attributes have a true zero point (9.5)

Recruitment– the process by which the researcher informs potential participants about the study and attempts to get them to participate (10.1)

Reductionism– when claims about some higher-level unit of analysis are made based on data from a lower-level unit of analysis (7.3)

Reification– assuming that abstract concepts exist in some concrete, tangible way (9.2)

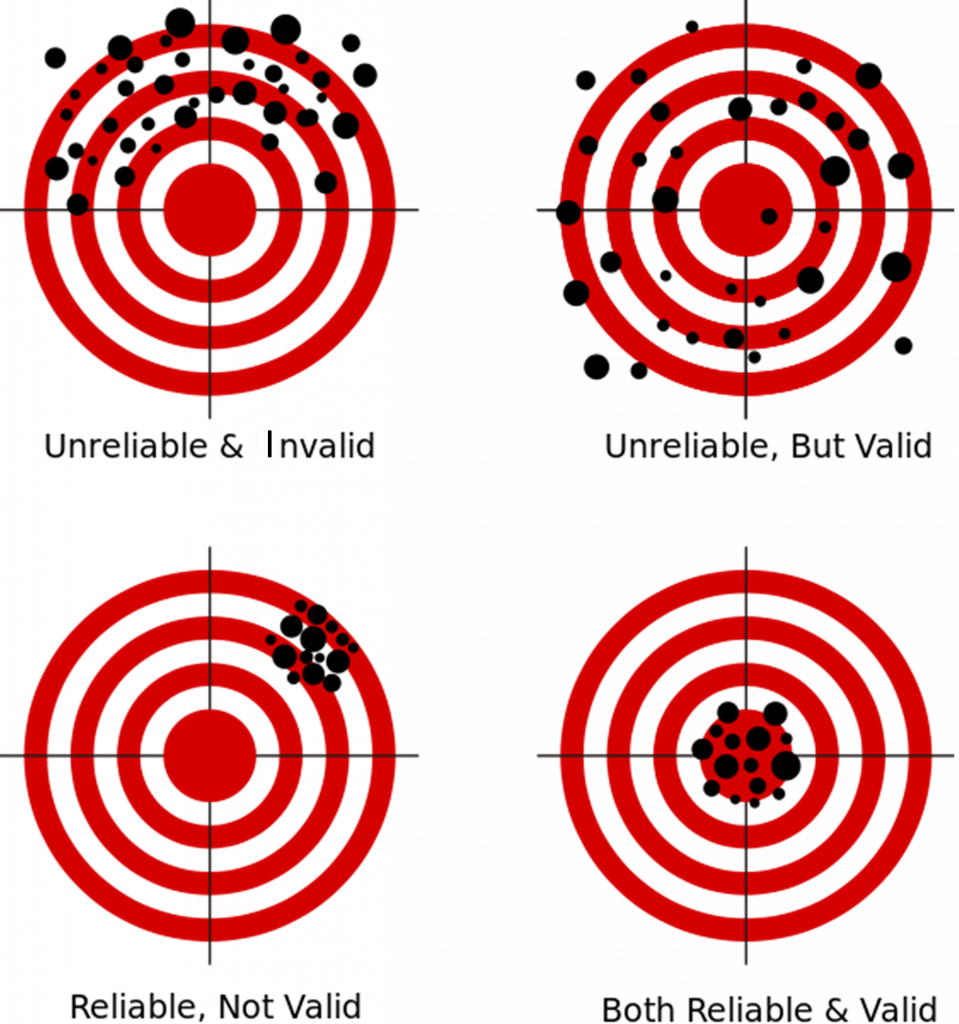

Reliability– a measure’s consistency (9.4)

Replication– conducting another researcher’s experiment in the same manner and seeing if it produces the same results (12.3)

Representative sample– a sample that resembles the population from which it was drawn in all the ways that are important for the research being conducted (10.3)

Reproducibility– the extent to which a researcher’s coding procedures will result in the same results when the same text is coded by different people (14.5)

Research methods– an organized, logical way of knowing based on theory and observation (1.1)

Response rate– the number of people who respond to a survey divided by the number of people to whom the survey was distributed (12.4)

Retrospective surveys– a type of survey that describes changes over time but are administered only once (11.3)

Roundtable presentation– presentations designed to stimulate discussion on a topic (16.2)

S-

Sample– the group of people who are successfully recruited from the sampling frame to participate in a study (10.1)

Sampling error– a statistical calculation of the difference between results from a sample and the actual parameters of a population (10.3)

Sampling frame– a real or hypothetical list of people from which a researcher will draw her sample (10.1)

Scale– a composite measure designed in a way that accounts for the possibility that different items on an index may vary in intensity (9.3)

Science– a particular way of knowing that attempts to systematically collect and categorize facts or knowledge (1.2)

Secondary data analysis– analyzing data originally gathered by another person or entity (14.4)

Secondary sources– interpret, discuss, and summarize original sources (2.2)

Selection bias– when a researcher consciously or unconsciously influences assignment into experimental and control groups (12.3)

Self-administered questionnaires– when a research participant is given a set of questions, in writing, to which they are asked to respond (11.3)

Semi-structured interviews– questions that are open ended and may not be asked in exactly the same way or in exactly the same order to each and every respondent (13.2)

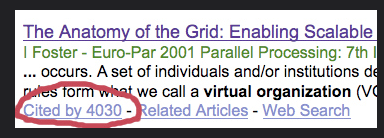

Seminal articles– classic works noted for their contribution to the field and high citation count (2.2)

Sequence– in a mixed methods study, refers to the order that each method is used, either concurrently or sequentially (7.4)

Signposting– words that identify the organization and structure of a literature review (4.3)

Simple random sampling– selecting elements from a list using randomly generated numbers (10.3)

Snowball sample– when a researcher relies on participant referrals to recruit new participants (10.2)

Social constructionism– a paradigm based on the idea that social context and interaction frame our realities (6.2)

Social desirability bias– when respondents answer based on what they think other people would like, rather than what is true (9.5)

Solomon four-group design– a type of experimental design that uses random assignment, two experimental and two control groups, pretests for half of the groups, and posttests for all (12.1)

Spurious relationship– when an association between two variables appears to be causal but can in fact be explained by some third variable (7.2)

Stability– the extent to which the results of coding vary across different time periods (14.5)

Stakeholders– individuals or groups who have an interest in the outcome of the study a researcher conducts (8.5)

Static group design– uses an experimental group and a comparison group, without random assignment and pretesting (12.2)

Statistical significance– the likelihood that the relationships that are observed could be caused by something other than chance (3.1)

Strata– the characteristic by which the sample is divided (10.3)

Stratified sampling– dividing the study population into relevant subgroups and then drawing a sample from each subgroup (10.3)

Subjective truth– one truth among many, bound within a social and cultural context

Survey research– a quantitative method whereby a researcher poses some set of predetermined questions to a sample (11.1)

Systematic error– when measures consistently output incorrect data, usually in one direction and due to an identifiable process (9.5)

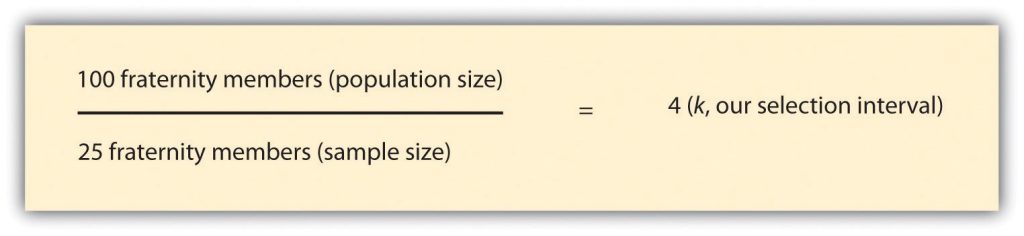

Systematic sampling– selecting every kth element from a list (10.3)

T-

Table– a quick, condensed summary of the report’s key findings (3.1)

Target population– a group of people whose needs your study addresses (8.2)

Temporality– whatever cause a researcher identifies must happen before the effect (7.2)

Tertiary sources– synthesize or distill primary and secondary sources, such as Wikipedia (2.2)

Testing effects– when a participant’s scores on a measure change because they have already been exposed to it (12.1)

Test-retest reliability– if a measure is given multiple times, the results will be consistent each time (9.4)

Theoretical articles– articles that discuss a theory, conceptual model, or framework for understanding a problem (2.2)

Theory– “a systematic set of interrelated statements intended to explain some aspect of social life” (Rubin & Babbie, 2017, p. 615) (6.2)

Theory building– the creation of new theories based on inductive reasoning (7.2)

Theory testing– when a hypothesis is created from existing theory and tested mathematically (7.2)

Time series design– a quasi-experimental design that uses multiple observations before and after an intervention (12.2)

Transcript– a complete, written copy of the recorded interview or focus group containing each word that is spoken on the recording, noting who spoke which words (13.5)

Treatment stage– the time in which the treatment is administered by the social worker (15.2)

Trend– a pattern in the data of a single-subjects design (15.2)

Trend survey– describes how people in a specific group change over time, asking different people each time the survey is administered (11.3)

True experiments– a group of experimental designs that contain independent and dependent variables, pretesting and post testing, and experimental and control groups (12.1)

Trustworthiness– the “truth value, applicability, consistency, and neutrality” of the results of a research study (Rodwell, 1998, p. 96) (9.4)

Typology– a measure that categorizes concepts according to particular themes (9.3)

U-

Unit of analysis– an entity that a researcher wants to say something about at the end of her study (7.3)

Unit of observation– the item that a researcher actually observes, measures, or collects in the course of trying to learn something about her unit of analysis (7.3)

Univariate analysis– quantitative analysis that describes patterns across just one variable (12.4)

Unobtrusive research– methods of collecting data that don’t interfere with the subjects under study (14.1)

V-

Validity– a measure’s accuracy (9.4)

Variable– refers to a grouping of several characteristics (9.5)

Vulnerable populations– groups of people who receive additional protection during IRB review (5.1)