Intro

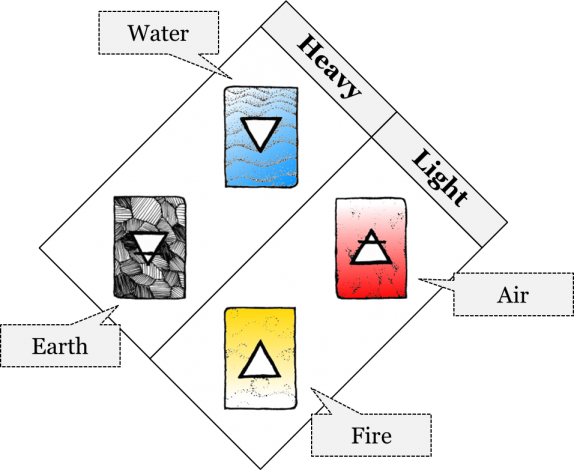

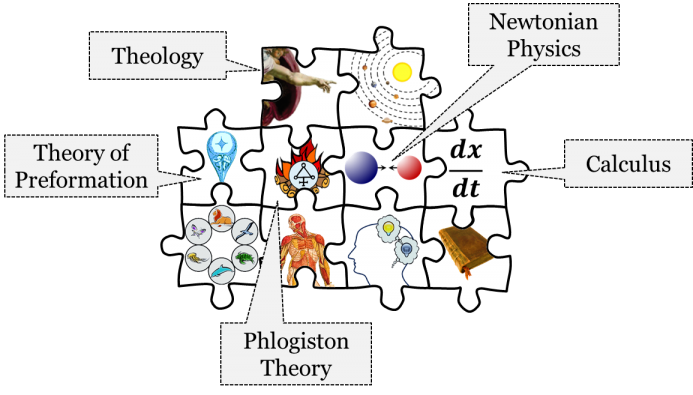

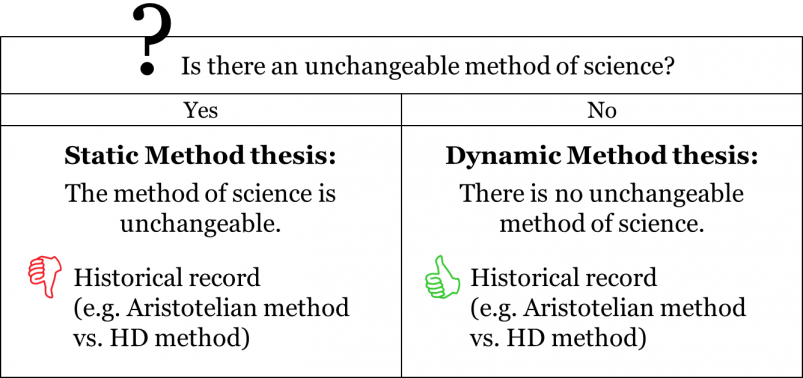

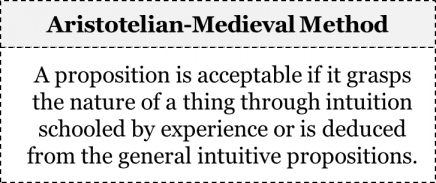

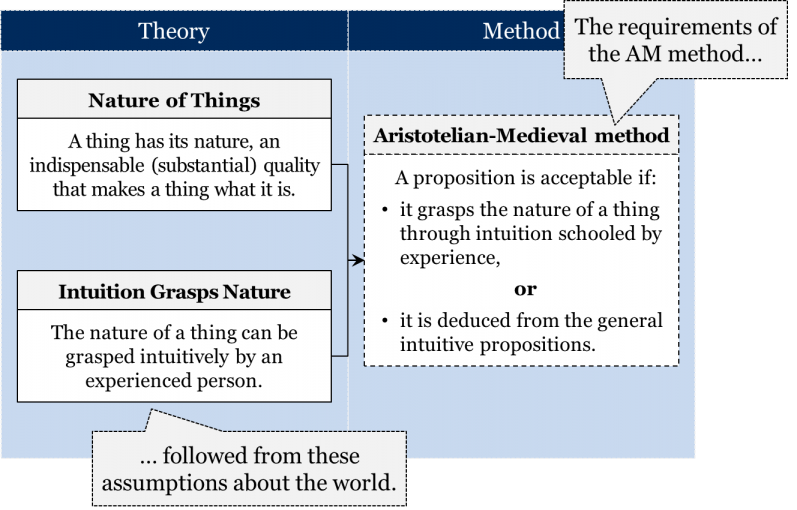

Usually, the seventeenth century is treated as an intellectually revolutionary period in the history of science. Filling the gap between the medieval Aristotelians and the massively influential Isaac Newton were the likes of Galileo Galilei, who tried and failed to convince the Catholic Church to accept a heliocentric cosmology, Robert Boyle, who experimented with gases in his air pump, and Pierre Gassendi, who renewed interest in ancient Greek atomic theory. However, what is often forgotten about all these theories is the context in which they were proposed; they were only ever pursued in the seventeenth century. In fact, as we learned in chapter 7, the Aristotelian-Medieval worldview was accepted by most scientific communities of Western Europe until the very end of the 1600s. So why didn’t the scientific communities of Western Europe accept any of the theories of these revolutionary figures? Simply, they all failed to satisfy the requirements of the then-employed Aristotelian-Medieval method.

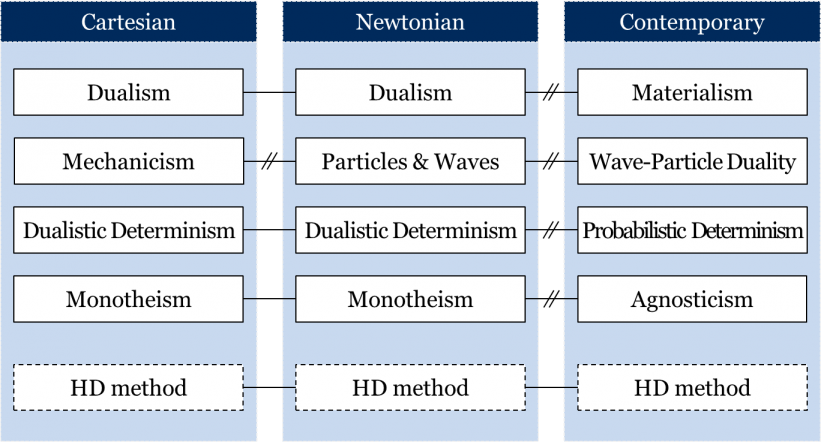

Well, all except for one. So far as we can tell, René Descartes (1596–1650), a French philosopher and mathematician, was the first to assemble an entire mosaic of theories that challenged the central tenets of the Aristotelian-Medieval worldview while, at the same time, satisfying the demands of the Aristotelian-Medieval method. By the beginning of the eighteenth century, textbooks and encyclopedias began to document and indicate the acceptance of this new worldview. We have come to call this the Cartesian worldview. It is the topic of this chapter.

Most of the key elements of the Cartesian mosaic were accepted or employed for a very short time: just four decades, from about 1700 to 1740. We say that this mosaic was accepted “on the Continent”, and by this we mean on the European continent; in places like France, the Netherlands, and Sweden, for example. This is to differentiate the acceptance of the Cartesian worldview “on the Continent” from the acceptance of the Newtonian worldview “on the Isles” – meaning in Britain (the topic of our next chapter), since both worldviews replaced the Aristotelian-Medieval worldview at the same time.

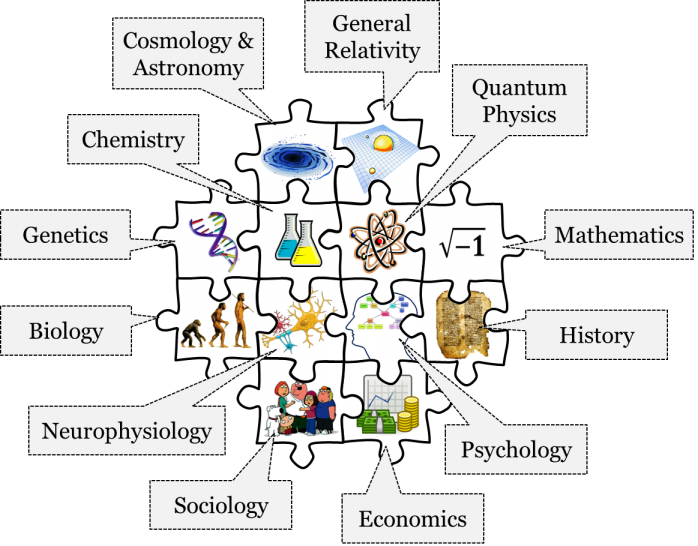

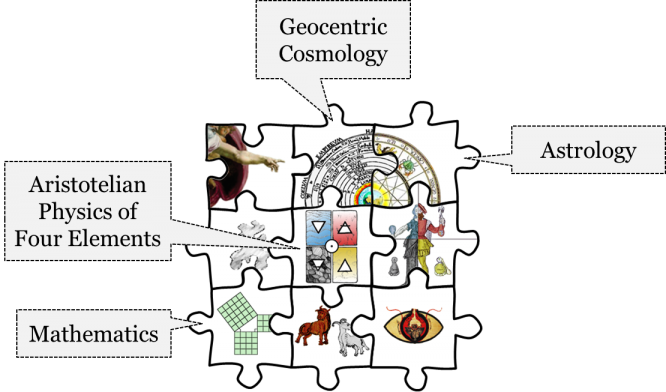

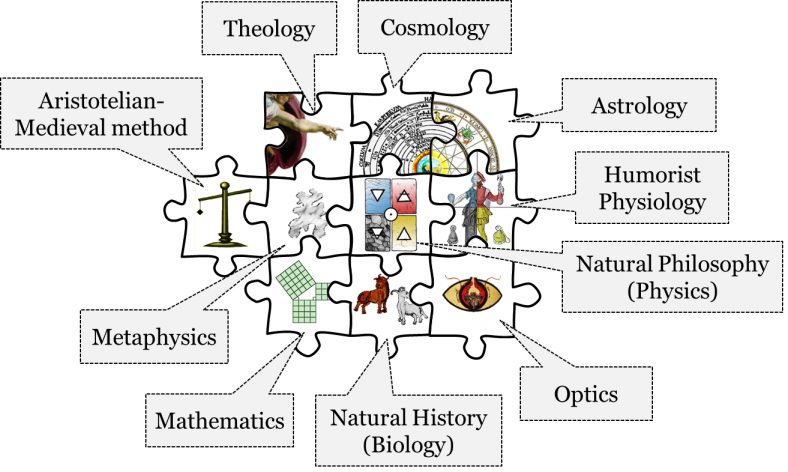

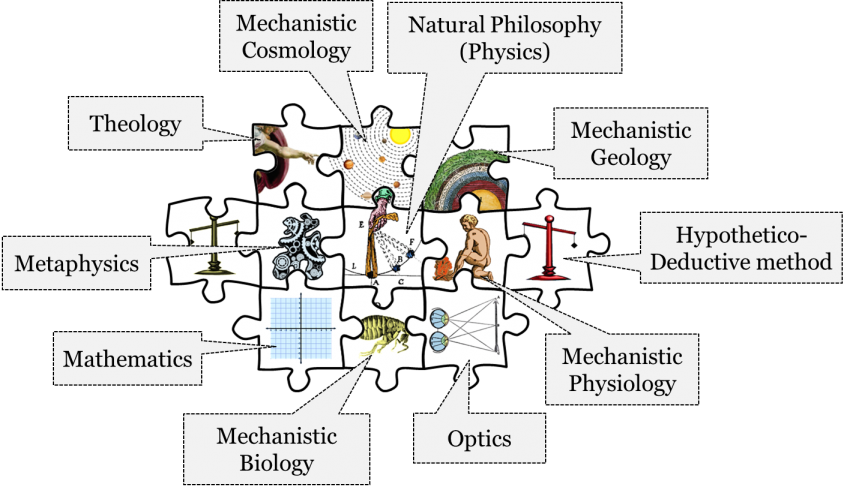

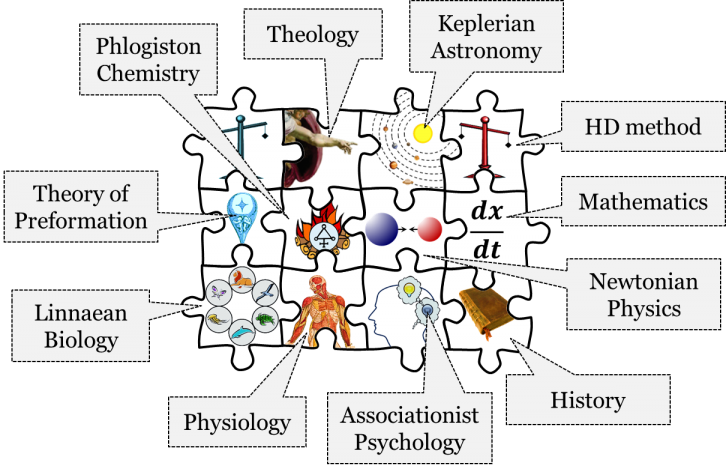

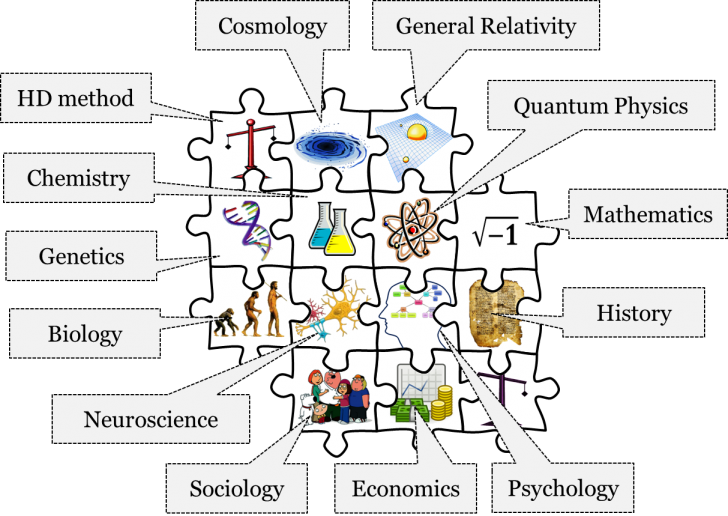

The key elements of the Cartesian mosaic include mechanistic metaphysics, theology, mechanistic physiology, natural philosophy or physics, mechanistic cosmology, mathematics, mechanistic geology, optics and biology, as well as the hypothetico-deductive method. You’ll notice the recurrent theme of mechanical philosophy, or mechanicism, in these elements, an idea fundamental to the Cartesian worldview:

In this chapter, we will elucidate some of the most important elements of the Cartesian mosaic and start to uncover the metaphysical principles or assumptions underlying these elements. Let’s begin with the metaphysics of the Cartesian mosaic, and what is perhaps Descartes’ most well-known quotation, cogito ergo sum, or “I think, therefore I am”.

Cartesian Metaphysics

Descartes first wrote these words, “I think, therefore I am”, in his Discourse on the Method in 1637. What Descartes was trying to do with this simple phrase was actually much more ambitious than proving his own existence. He was trying to create a foundation for knowledge – a starting point upon which all future attempts at understanding and describing the world would depend. And of course, to know anything requires a knower in the first place, right? In other words, we need a mind. Descartes recognized this, so he sought to found all knowledge on the existence of a thinker’s own mind.

To prove that his mind exists, Descartes didn’t actually start with the act of thinking. He started instead with the act of radical doubt: he doubted his knowledge of the external world, his knowledge of God, and even his knowledge of his own mind. The reason for this radical doubt was to ensure that he didn’t accept anything without justification. So, the first stop was to doubt every single belief he held.

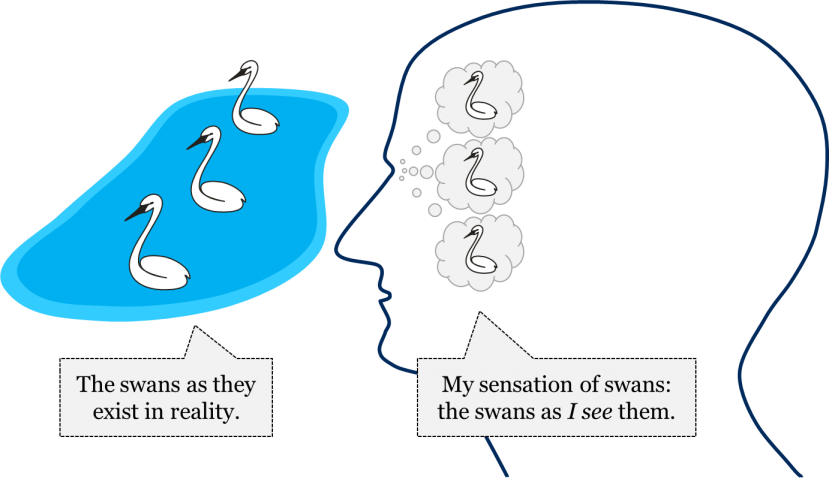

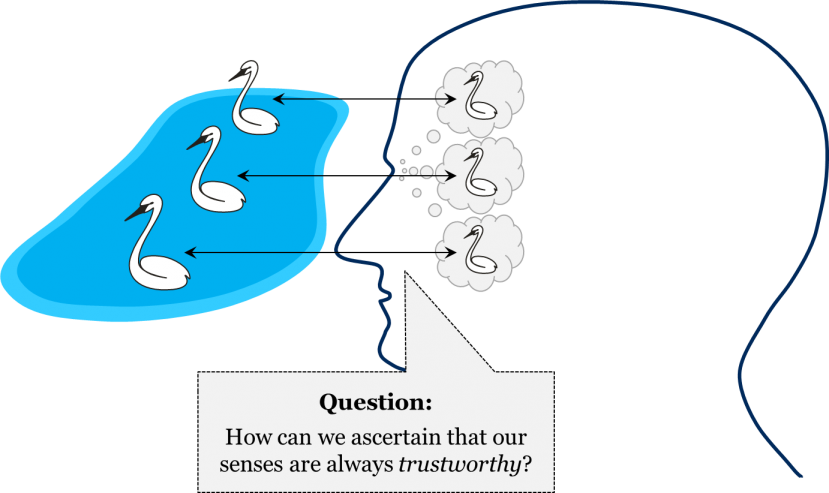

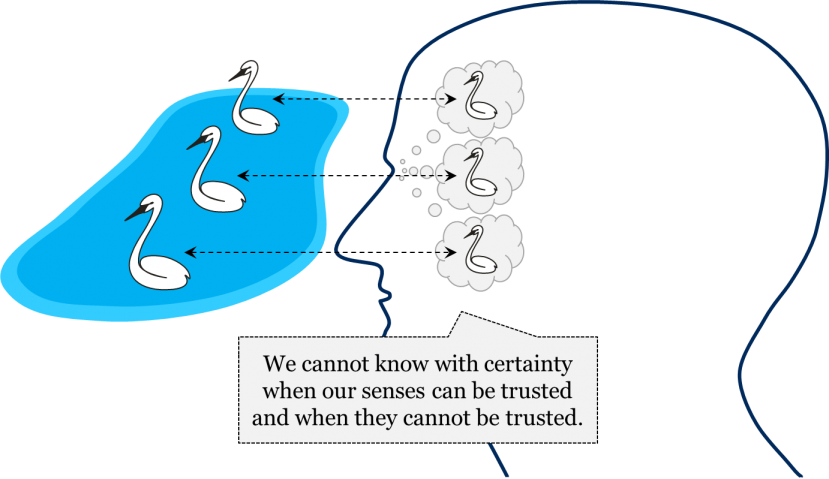

For one thing, Descartes understood that sometimes our senses deceive us, so we must doubt everything we experience and observe. He further understood that even experts sometimes make simple mistakes, so we should doubt everything we’ve been taught. Lastly, Descartes suggested that even our thoughts might be complete fabrications – be they the products of a dream or the machinations of an evil demon – so we should also doubt that those thoughts themselves are correct.

Yet, in the process of doubting everything, he realized that there was something that he could not doubt – the very act of doubting! Thus, Descartes could at least be certain that he was doubting. But doubting is itself a form of thinking. Therefore, Descartes could also be certain that he was thinking. But, surely, there can be no thinking without a thinker, i.e. a thinking mind. Hence, Descartes proved to himself the existence of his own mind: I doubt; therefore, I think; therefore, I am.

Importantly for Descartes, this argument is intuitively true, as anyone would agree that doubting is a way of thinking and that thinking requires a mind. Thus, according to Descartes, the existence of a doubter’s own mind is, for the doubter, established beyond any reasonable doubt.

It’s important to notice what Descartes is claiming to exist through this line of reasoning. He’s not suggesting that his physical body exists. So far, he has only proven to himself that his mind exists. If you think about it, the second “I” in “I think therefore I am” should really be “my mind” – hence, “I think, therefore my mind is”.

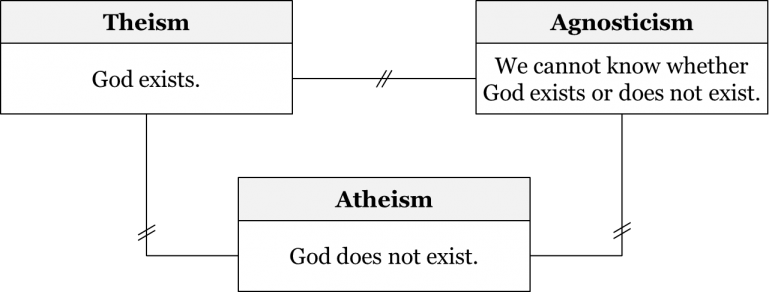

But, what about the existence of everything else? How does he know that, say, the buildings of the city around him truly exist? For this, Descartes turns to theology, a central piece of the Cartesian mosaic. You see, since our senses can deceive us, we need some sort of guarantor to ensure that what we perceive is, in fact, the case. For Descartes, this guarantor is none other than God. If Descartes can prove that God exists, then it will follow from God’s benevolence that the objects that appear clearly and distinctly to us actually exist in the external world.

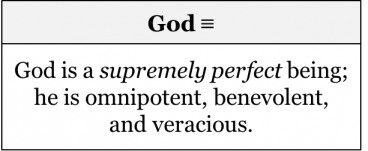

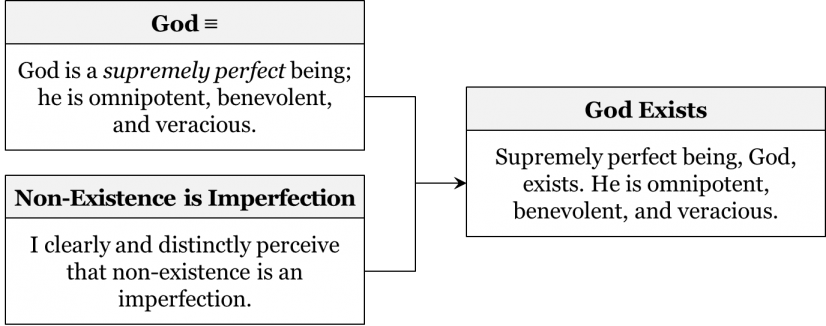

Descartes starts with a definition of God, as a supremely perfect being:

Theologians of the time would readily accept Descartes’ definition. After all, the idea of God as a supremely perfect being is implicit in all Abrahamic religions. So here Descartes simply spells out the then-accepted definition of God as a being of all perfections: he is omnipotent or perfectly powerful, he is omniscient or perfectly knowing, he is omnibenevolent or perfectly good, and so on. Any other perfection, God necessarily has by definition. If being omniungular, or having perfect nails, were a form of perfection, then God would have it.

Descartes then suggests that existence is part of being perfect. If God is perfect in all ways, then he must exist as well. But how does Descartes, or any Cartesian theologian, for that matter, know that existence is a requirement for perfection? Well, they would say that it would be impossible to be perfect without existing. Existence alone doesn’t make you perfect, but non-existence clearly makes you imperfect. Descartes suggests that we clearly and distinctly perceive this non-existence as an imperfection.

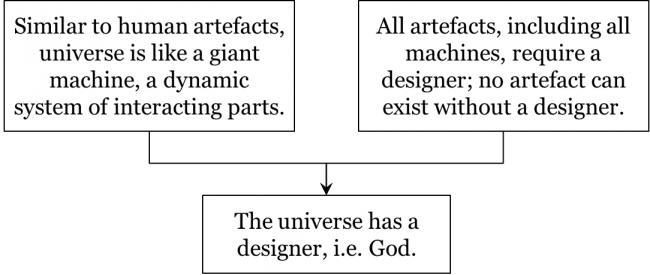

Therefore, according to Descartes, the most perfect being, i.e. God, must necessarily exist. This proof for the existence of God is also known as the ontological argument. It was first proposed by Saint Anselm, Archbishop of Canterbury centuries before Descartes. Briefly, the gist of the argument goes like this:

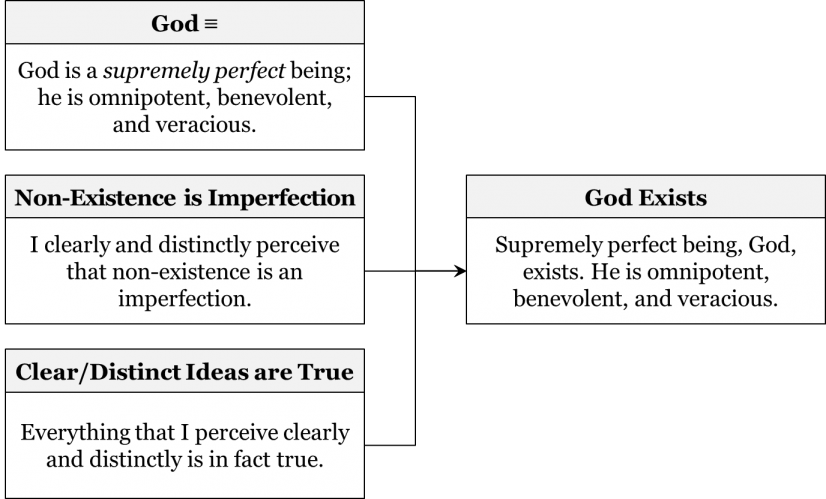

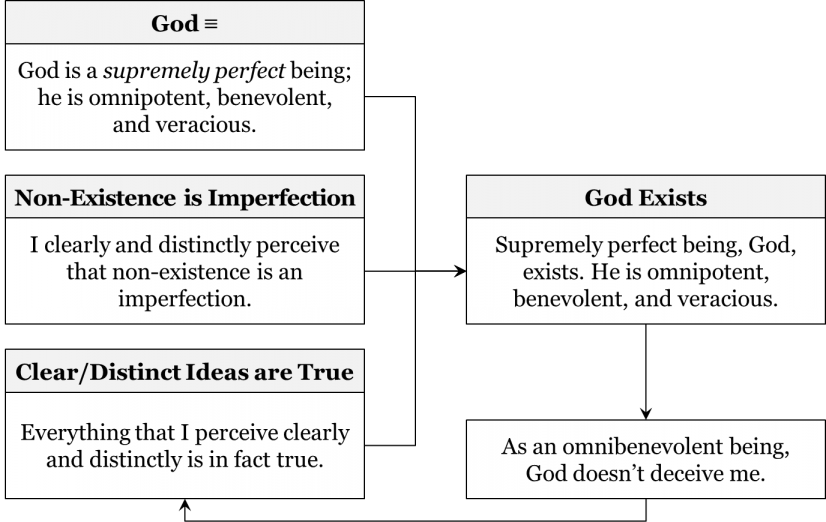

There are several objections to Descartes’ ontological argument – we’re going to consider only one of them. This objection is that the truth of the clear and distinct perception that non-existence is an imperfection is actually a circular argument. Specifically, God’s existence doesn’t follow merely from the definition of God and our perception that non-existence is an imperfection. There is a missing premise, which is that everything we perceive clearly and distinctly must necessarily be the case.

In fact, Descartes clearly accepts this premise, but he justifies it by pointing out that God is not a deceiver, from which follows that everything we perceive clearly and distinctly must be the case. Since God is all-good, he is not a deceiver. Therefore, everything we clearly and distinctly perceive must be true. Indeed, if God allowed our clear and distinct ideas to be false, he would be a deceiver, which would go against his very nature as a benevolent being.

So, the truth of Descartes’ clear and distinct perception that non-existence is an imperfection proves that God exists. The perfect goodness of God ensures that Descartes’ clear and distinct perception (that non-existence is an imperfection) is true. However, to prove that God exists, Descartes relies on the assumption that everything he perceives clearly and distinctly must be true. And, to justify this assumption, Descartes relies on God’s benevolence, and thus his existence. This is a circular argument: it is problematic because the truth of at least one of the premises relies on the truth of the conclusion.

The existence of such objections to Descartes’ ontological argument for the existence of God didn’t hurt the prospects of Descartes’ metaphysics because the community of the time already accepted God’s existence. This is not surprising because Christian theology was part of virtually every European mosaic.

Having established that God exists, Descartes then proceeds to proving the existence of the world around him. Descartes makes this argument in two steps: first, he shows that the world is external to, independent from, or not fabricated by his mind, and second, he shows that this external, mind-independent world is a material one.

To show that the external world is real and is not a figment of his imagination, Descartes invokes something called the involuntariness argument. He notices that his sensations come to his mind involuntarily, i.e. that he has no control over what exactly he perceives at any given moment. But, clearly, everything happening in his without the mind’s control should necessarily have its source outside his mind. Therefore, he clearly and distinctly perceives that something external to his mind exists. Since everything that he perceived clearly and distinctly must be true, it is the case something external to his mind exists.

As an example, Descartes suggests that we feel heat whether or not we want to, i.e. we feel it involuntarily. When the feeling of heat is outside our control, we suspect that it is not a product of our mind but instead the product of something external to our mind, like a fire. Since it’s clear to us that sensations come from something outside the mind, then, it’s also clear that things outside the mind must exist. But because we know that God is not a deceiver, everything we perceive clearly and distinctly must be true. Therefore, there exists something other than the mind.

Descartes still must show that this external world, which is the source of our sensations, is material. Descartes takes it as a premise that we have a clear and distinct perception that external things are material. He next refers to God’s benevolence to show that external things are therefore actually material. Indeed, if the source of our sensations were not material, God would effectively be deceiving us by allowing for a clear and distinct idea of something that is not the case. Clearly this is impossible because God is benevolent and not a deceiver. Thus, Descartes eliminates both the possibility that God himself, or that something else, like an evil demon, is causing his sensations. If external sensations were caused by either God or something else like an evil demon, then Descartes would require some God-granted faculty or capacity to recognize such causes. Imagine having Spiderman’s “spidey sense” for detecting danger, but for detecting God-caused or evil demon-caused sensations instead. This faculty would allow you to determine whether something you perceive is caused by God, an evil demon, or anything else immaterial. For instance, if you saw the Spiderman villain Green Goblin dancing on a table with this “spidey sense”, you would be able to tell whether this sensation was caused by the actual material Green Goblin dancing on the table, or whether this sensation was produced by God or some other immaterial being. But we don’t have this “spidey sense”. Instead, Descartes suggests that God has given him a very different capacity, one that causes him to believe that his sensations are caused by material things. Again, if Descartes believed that his sensations were caused by material things when they were in fact not, then God would be a deceiver. Since God is benevolent and not a deceiver, the only possibility that remains for Descartes is that sensations are caused by the external material world, and therefore, the external, mind-independent, material world exists.

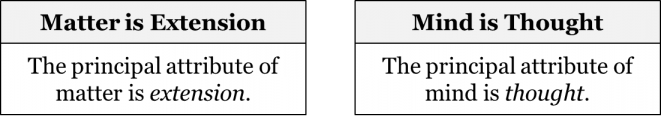

Now that Descartes has established the existence of both mind and matter, he can then establish what the indispensable properties of these two substances are. What are the qualities without which these substances are inconceivable?

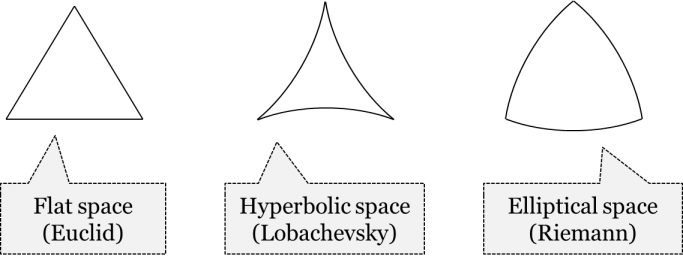

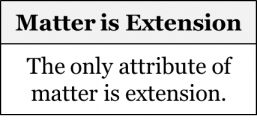

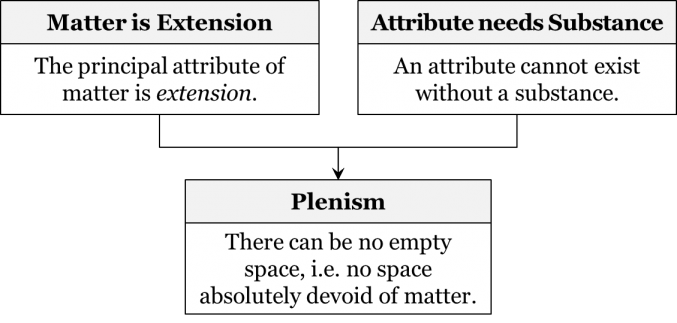

The indispensable property of mind is thought. For Descartes, it was impossible to conceive of a mind that lacked the capacity for thinking. What would it even mean for a mind to be non-thinking? The indispensable property of matter is extension, i.e. the capacity to occupy space. Just like a non-thinking mind, Descartes argues that it is impossible to imagine any material object that does not occupy some space. Does the mind occupy space? Can you pack it up in a box when moving apartments? Not at all, as the mind is an immaterial substance. Does a chair think? Nope. How about a table? Not at all. Chairs and tables merely occupy space, thus are extended substances, i.e. material.

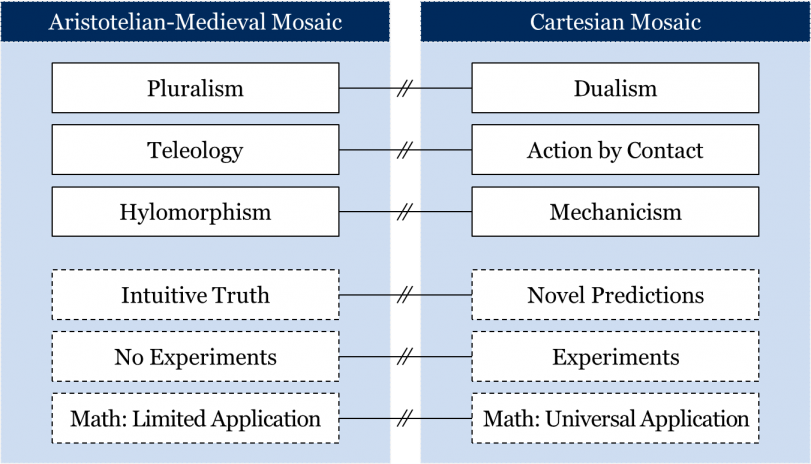

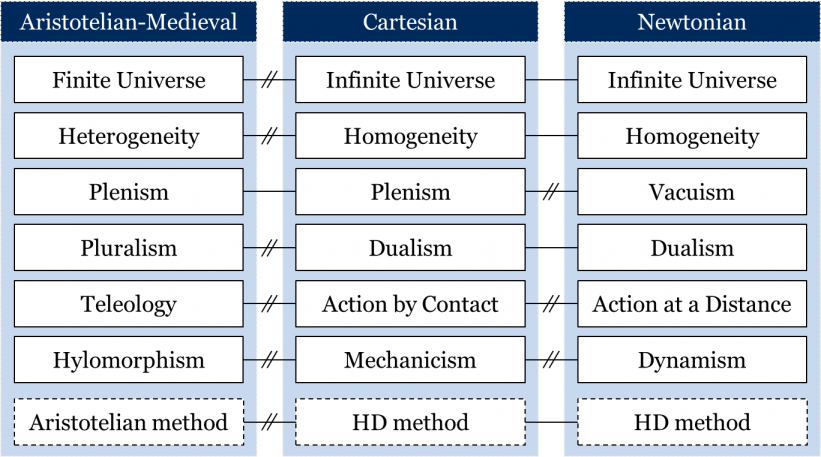

By identifying the indispensable properties of mind and matter, Descartes was strengthening the foundations of the Cartesian worldview. It is from these two fundamental principles that other important Cartesian metaphysical elements follow. Since metaphysical elements are central to distinguishing one worldview from another, we will now introduce some metaphysical elements from the Aristotelian-Medieval worldview alongside those of the Cartesian worldview. In doing so, we can more clearly understand the most significant differences between the two worldviews.

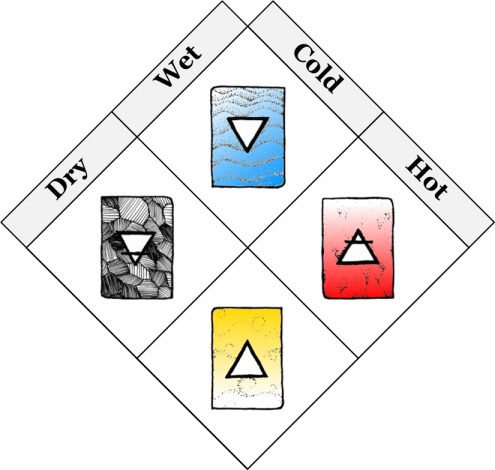

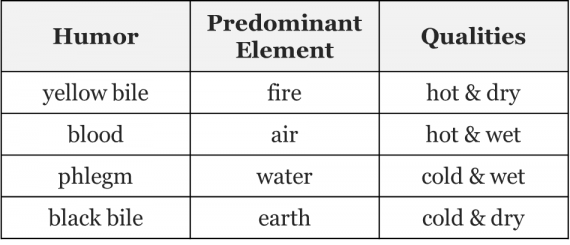

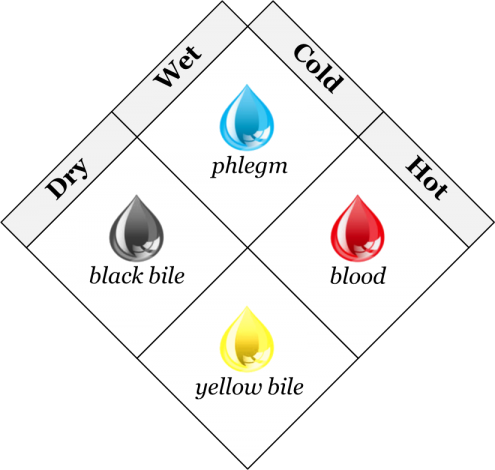

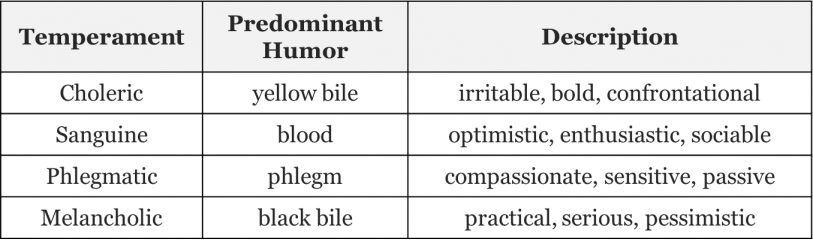

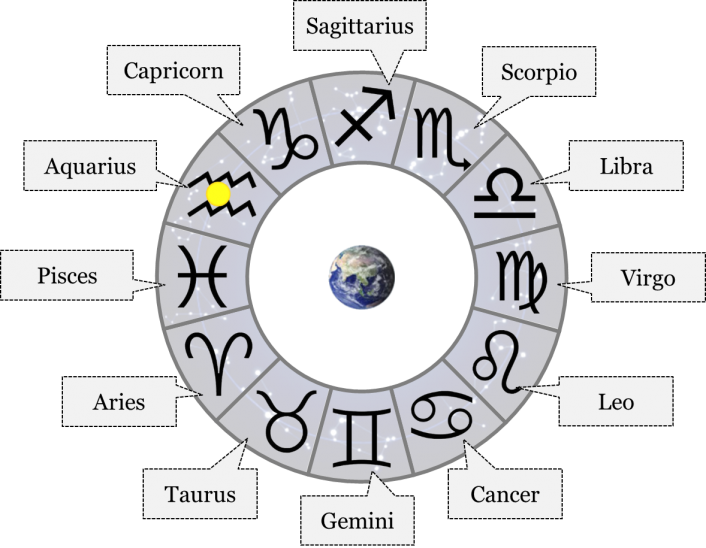

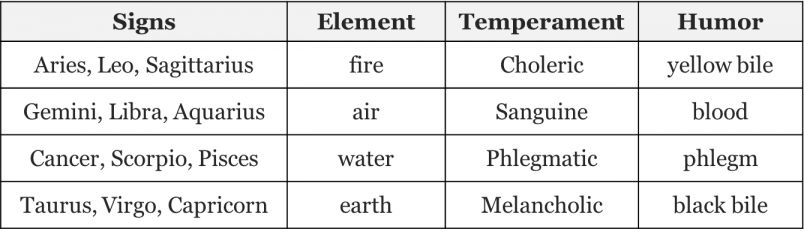

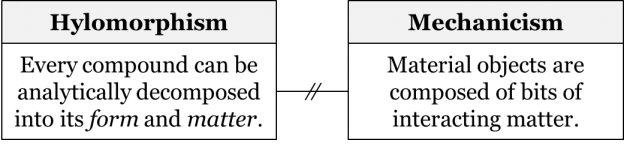

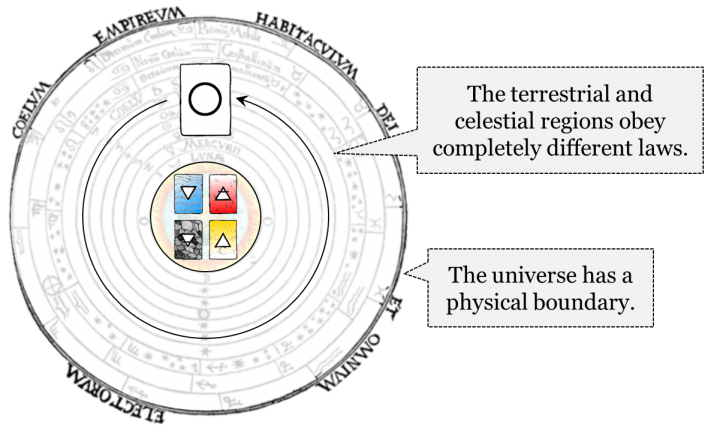

Aristotelians subscribed to an idea known as hylomorphism. Hylomorphism says that all substances can be analytically decomposed into matter (from the Greek word hyle meaning wood or matter) and form (from the Greek word morphē meaning form). We know what matter is. A form is essentially an organizing principle that differentiates one combination of matter from another. The idea behind hylomorphism is not that you can literally separate any substance into its constituent parts, like a salad dressing into oil and vinegar, but that, in principle or conceptually, any substance contains both matter and form. For instance, a human body is composed of both some combination of the four humors (its matter) and a soul (its form). For Aristotle, the soul is a principle of organization responsible for digestive, nutritive, and vegetative functions as well as thinking and feeling. It organizes the humors. Blood is composed of some combination of the four elements (its matter) along with what we might call “blood-ness” (its form). Similarly, a Maple tree composed of some combination of elements (presumably earth and water) and the form of “maple-tree-ness” which organizes these elements into a Maple tree.

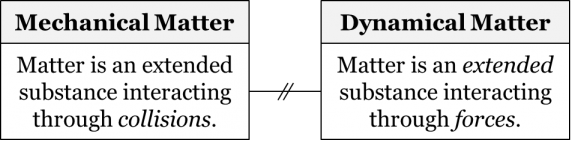

In the Cartesian worldview, the idea of hylomorphism was replaced by a new idea, mechanicism. Mechanicism says that, since the only attribute of matter is extension, all material things are composed solely of interacting material parts. Thus, every material thing could be understood as a mechanism of greater or lesser complexity. For instance, Descartes would describe the human body as a complex machine of levers, pulleys, and valves, or the heart as a furnace, causing the blood to expand and rush throughout the body. You might be wondering, what happened to the form of material things? Descartes actually doesn’t need forms. Since every material object could be explained in terms of the arrangement of extended matter in different ways, there was no need for a form to act as an organizing principle and do any arranging. The mere combination of constituent parts of a thing is sufficient, according to Descartes, to produce the organization and behaviour of that thing. Even the bodies of living organisms, including human bodies, could in principle be explained as complex arrangements of material parts. Digestion, development, emotions, instincts, reflexes and the like could therefore all be explained as the result of mechanical interactions alone. Descartes’ mechanicism was therefore in direct opposition to Aristotle’s hylomorphism:

Descartes mechanistically explained the physiological functions that Aristotle assigned to the soul. But he felt that the human capacity for reason and language, what Aristotle called the rational soul, could not be explained this way. Thus, Descartes supposed that the human mind, or soul, was completely immaterial. This leads us to the next key metaphysical element of the Cartesian worldview – dualism.

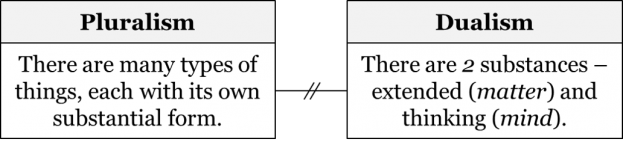

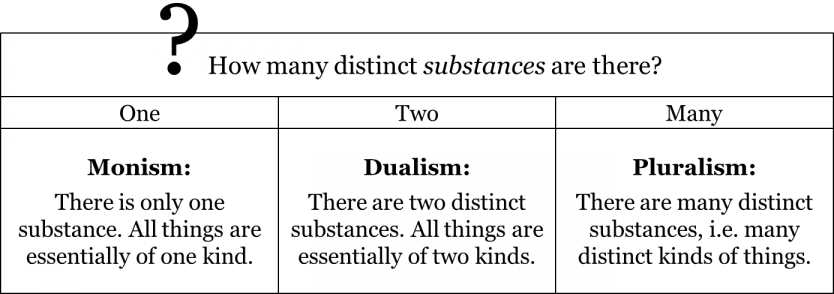

But in order to understand the idea of dualism, we should start from the Aristotelian stance on the number of substances, known as pluralism. According to Aristotelian pluralism, there are as many substances as there are types of things in the world. Since Aristotelians accepted that each type of thing had its own substantial form, for them there is a plurality of substances. Lions, tigers, and bears might all amount, materially, to being composed of some combination of the elements earth and water, but they are also substantially distinct through their forms of lion-ness, tiger-ness, and bear-ness, respectively.

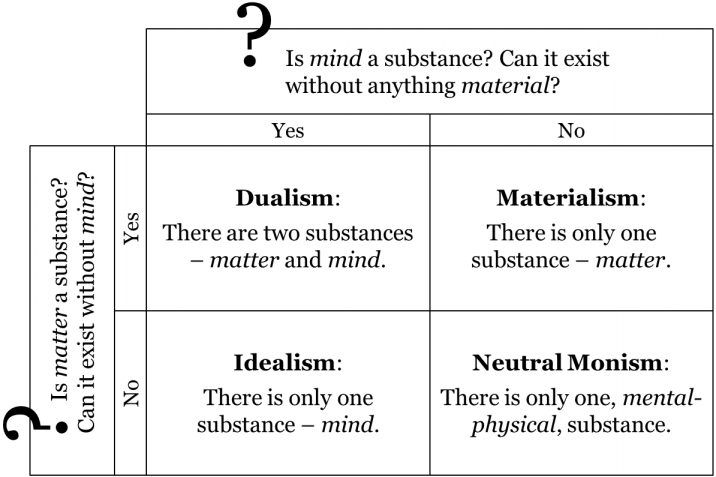

For Descartes, there is not a plurality of substances, but only two independent substances, matter and mind. This is the conception of dualism. Since Descartes also showed interest in sword fighting, you might call him a dualist and a duellist! Duelling aside, Descartes argued that extended, material substance is completely distinct from thinking substance. Among material things are those lions, tigers, and bears, as well as rocks, plants, and other animals. Thinking substances, on the other hand, included entities with minds but without extended bodies, entities like God and angels. Thus, his dualism was meant as a replacement to Aristotelian pluralism:

For Descartes, however, human beings remained a special case. While we are clearly material beings – having bodies extended in space – we also have the capacity for thought. Descartes felt that thought could not be explained mechanically, because all the machines known to him, such as clocks or pumps, inflexibly performed a single function, whereas human reason was a flexible general purpose instrument. As such, human beings were considered ‘citizens of two worlds’ because they clearly have a mind and occupy space.

The final metaphysical elements of the Aristotelian-Medieval and Cartesian worldviews to be considered here are those dealing with changes in objects. Why do material objects change from one shape to another, as a tadpole changes into a frog? Why do material objects move from one place to another, as in a rising flame? More generally, why do objects move or change?

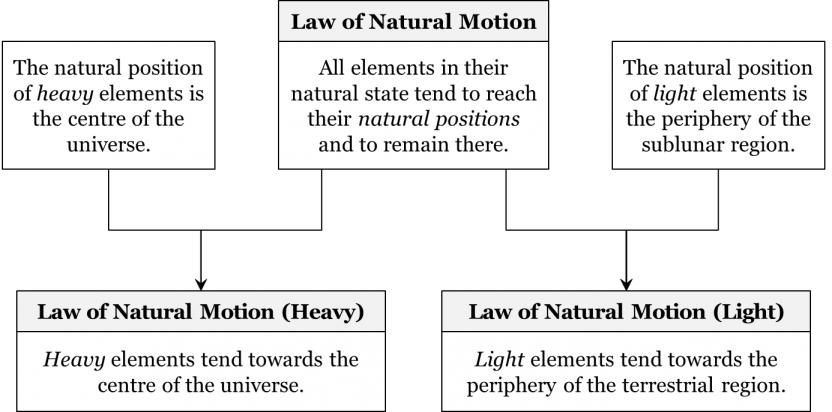

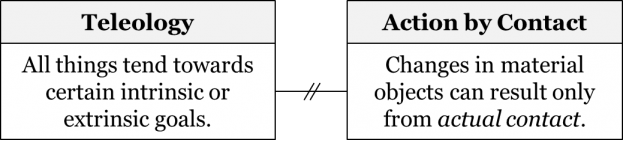

Aristotelians answered such questions with reference to the purpose, aim, or goal of these objects, also known as their final cause. Accordingly, they subscribed to the principle of teleology (from the Greek word telos meaning end, purpose, or goal), which says that all things tend towards certain intrinsic or extrinsic goals. According to Aristotelians, a frog changes from a tadpole to its amphibious state because it has an intrinsic or innate goal to live by water and land. A flame flickers upwards because of its intrinsic goal to move away from the centre of the universe. Some goals are also extrinsic, or human imposed. A pendulum clock, for instance, shows the correct time because that is the goal imposed upon it by a clockmaker. Importantly, each goal is dictated by the substantial form of the object. So, a frog will never become an opera singer, for it lacks the substantial form of an opera singer.

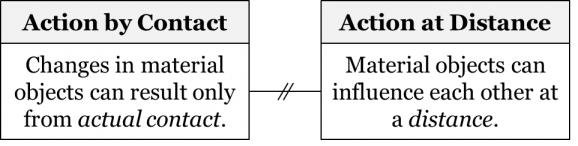

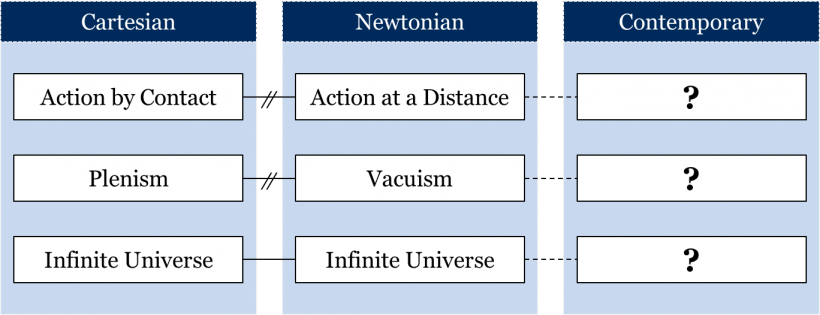

Cartesians, however, not only rejected the notion of a substantial form, but denied that goals, aims, or purposes played any part in the behaviour of material objects. For them, matter has only one primary attribute – extension. So how do extended, material substances move or change? In place of teleology, Cartesians accepted the principle of action by contact, believing that changes in material objects can only result from actual contact between bits of matter in motion. So, the tadpole becomes a frog following the mechanical rearrangement of bits of its constituent matter; the flame moves upward because bits of fire are colliding and exchanging places with the bits of air above it. Similarly, a pendulum clock is not driven by any extrinsic goals but shows the correct time merely due to the arrangement of its levers, clogs, springs, and other mechanical parts. Clearly, this idea of action by contact opposes the Aristotelian idea of teleology:

Let’s recap the key Cartesian metaphysical elements we’ve discussed so far in the chapter. For Cartesians, the principal attribute of mind is thought and of matter is extension. They also accepted the principles of mechanicism, that all material objects are composed of bits of interacting and extended matter; dualism, that there are two substances that populate the world – mind and matter; and action by contact, that any change in material objects only results from actual contact between moving bits of matter. These Cartesian principles replaced the Aristotelian principles of hylomorphism, pluralism, and teleology respectively.

If changes in material objects can only result from actual contact, then how might we make sense of changes in living organisms? To better understand this, we must consider the Cartesian view of physiology in more detail.

Cartesian Physiology

In the seventeenth century, a great interest was shown in the inner workings of the body and the physiology of living things. Slowly, anatomists and surgeons began questioning the humoral theory of the Aristotelian worldview. Though not an anatomist himself, Descartes, too, wrote about physiology in his Treatise on Man, describing the balance and imbalance of the humors in mechanical terms. In fact, Descartes viewed the human body as a machine of interconnected and moving parts, piloted by the human mind. Seeing the body as a machine makes even more sense when we consider the Cartesian principle of action by contact: again, if changes in material objects can only result from actual contact, then changes in living organisms must be caused by colliding bits of matter.

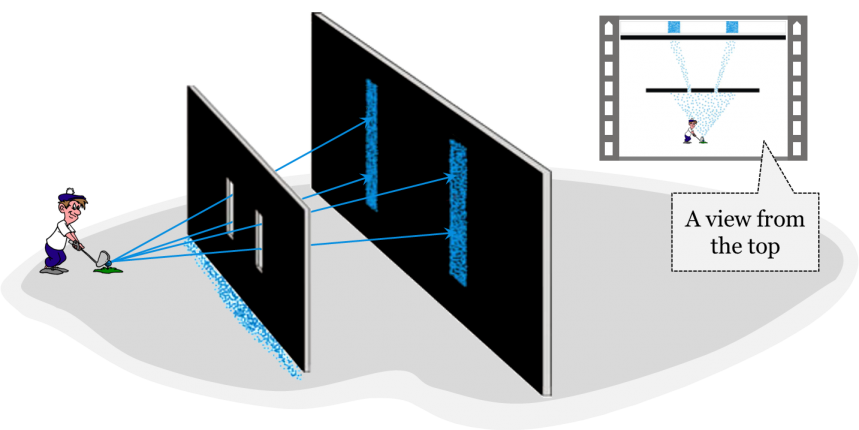

As an example, in an episode of the children’s cartoon The Magic School Bus, a very Cartesian view of the sense of smell is conveyed, although unintentionally so. In the episode, the class wants to better understand the olfactory system to figure out another student’s almost prize-winning concoction of scents. So, they shrink with their bus to a very, very tiny size, and fly up into the student’s nose. Once inside the nose, the class wants not only to smell what the student smells, but to see what she smells too. They all put on smell-a-vision goggles, which enable them to observe the particles of different types of smells float around and eventually land at specific spots inside the nose. Though the teacher, Ms. Frizzle, and her class were probably not really Cartesians, actual Cartesians would have accepted that smell works in a very similar way. They would have described tiny bits of matter, or the particles of a certain smell, landing somewhere inside the nose, and then mechanically transmitting that smell to the brain using tugs on a tiny thread running through the centre of the olfactory nerve. The same idea applies to other senses, like sight being the product of tiny bits of matter impressing on the eyes and then transmitting an image to the brain.

Cartesians also understood other organs in mechanical terms. Most obviously, they viewed the heart as a furnace, causing expanding heated blood to rush into the arteries and throughout the body. The blood was refined by its passage through blood vessels at the base of the brain into a fiery, airy fluid called animal spirits, which could flow outward through the nerves to the muscles. Note that this substance is simply a material fluid and has nothing to do with spirits in the spooky sense. Muscle contraction occurred when animal spirits flowed into a muscle and inflated it. Descartes even posited a system of valves to ensure that opposing pairs of muscles did not simultaneously contract.

If humans are citizens of two worlds, the worlds of matter and of mind, then how did Cartesians understand the interactions between these two separate substances? The problem is that, in order to interact, substances need to have some common ground. How can something immaterial, like the mind, affect something material, like the body, and vice versa? Surely there are no particles being transferred from the mind to the body because the mind doesn’t have any particles – it’s immaterial. For the same reason, the particles of the body cannot be transferred to the mind. Yet it is clear that the body and the mind act synchronously, there is a certain degree of coordination between the two. Consider the example of a contracting muscle. It is evident that we can control the bending of our arms; if you will your arm to move, it moves. Once the body receives a command from the mind to move, it proceeds purely mechanically: animal spirits flow through your nerves to your arm, and then cause the contraction of your bicep by inflating it. But how does a material body receive a command from an immaterial mind? In the Cartesian worldview, there wasn’t an accepted answer to this question. Instead, at least two major solutions were pursued – interactionism and parallelism.

According to interactionism, the mind and the body causally influence each other. It appears to be intuitively true that the two do in fact influence each other. After all, that’s what we seem to experience in everyday life. For Descartes, this interaction took place within the pineal gland: this is where the will of the mind was supposedly transferred into the motion of material particles. Movements of this tiny gland within the cavities of the brain were supposed to direct the flow of animal spirits accordingly. But Descartes’ contemporaries and even he himself came to realize that this doesn’t explain how the immaterial mind can possibly affect the material body. Since, according to their dualism, the two are supposed to be completely different substances – thinking and extended – the mechanics of their possible interaction remained a mystery.

The opposite view, called parallelism, suggested that the world of the mind and the world of matter exist parallel to one another, and there is no actual, causal interaction between them. They simply run in pure harmony with one another, like two synchronized clocks, which show the same time and appear as though there is coordination between them, while in reality, they’re merely preprogramed to show the right time. But how could two distinct substances act synchronously? According to one version of parallelism, this harmony between the two substances was pre-established by God, like the time on two different clocks being pre-established by a clockmaker. One problem with parallelism which left it a pursued theory was that it seemingly denied the existence of free will because it presupposed that both matter and mind are preprogramed.

Since both of these views had serious drawbacks, there was no accepted Cartesian answer to the problem of seeming body-mind interaction. Yet, this didn’t dissuade Cartesians from their dualism. A few mechanicists, like the French physician Julien Offray de La Mettrie were willing to question Descartes’ dualism and countenance the possibility that the mind itself was mechanical. La Mettrie’s argument for a mechanical mind was simple. How could mental processes like reason and language be affected by physical causes like a fever, or getting drunk if the mind itself wasn’t a physical mechanism? But a mechanical view of the mind didn’t become accepted until the twentieth century, when the advent of computers and neural network simulations made it possible to imagine how the mind could be a product of material processes. In the context of the Cartesian mosaic, mind-body dualism remained one of the central elements along with the ideas of mechanicism and action by contact. They believed that all material phenomena are explicable in terms of material parts interacting through actual contact.

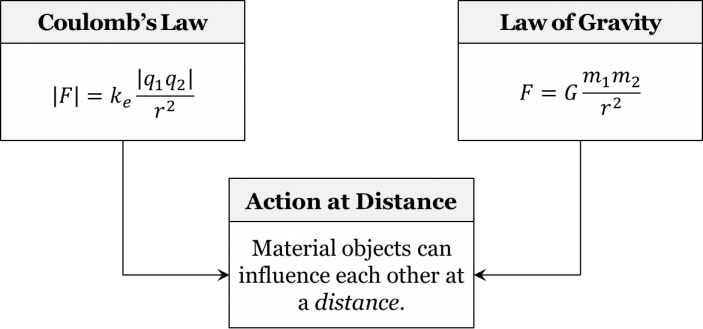

But is it possible to explain all phenomena through this mechanistic approach? While many material objects, like clocks, and analogous parts of the human body, like beating hearts and moving arms, can be readily explained as mechanisms working through action by contact, it’s not quite clear that all material processes are amenable to such a mechanistic explanation. For instance, how can such phenomena as gravity or magnetism be explained mechanistically? After all, two magnets seem to attract or repel each other without actually touching. To appreciate the Cartesian explanation of gravity and magnetism, we need a better understanding of Cartesian physics and cosmology.

Cartesian Physics and Cosmology

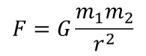

In his own times, Descartes’ writings formed an integrated system of thought. Among modern philosophers, Descartes is well-known for his epistemological and metaphysical writings, but his complex and ingenious physical and cosmological theories, which were an equally important part of his system, are known only to historians and philosophers of science. Foremost among his physical theories are his laws of motion, first set out in 1644 in his Principles of Philosophy.

Recall that the only capacity of material objects is to occupy space, i.e. to be extended, and that interactions between extended substances necessarily occur by contact. From the principle of action by contact, we can deduce Descartes’ first law of motion. The law states:

Every bit of matter maintains its state of rest or motion unless it collides with some other bit of matter.

The metaphor of billiard balls is most apt for appreciating Descartes’ first law. A billiard ball will stand still on a table up until the point that it is knocked in some direction by another billiard ball. Once knocked, the ball will continue moving until it hits another billiard ball or the edge of the table. According to Descartes, the same principle applies to all matter: every bit of matter stands still or continues moving until it contacts some other bit of matter.

Descartes’ second law of motion builds on the first. It states:

Every bit of matter, regarded by itself, tends to continue moving only along straight lines.

The law suggests that the unobstructed motion of a material object will always be rectilinear. A billiard ball, for instance, continues to move along a straight line until it reaches an obstacle. When it finally reaches the obstacle, it changes its direction, but then continues in another straight line. Compare this with a stone in a sling. As you swing the stone around, its motion follows a circular path because it is being obstructed by the sling, i.e. it is not regarded by itself. This is the gist of Descartes’ second law.

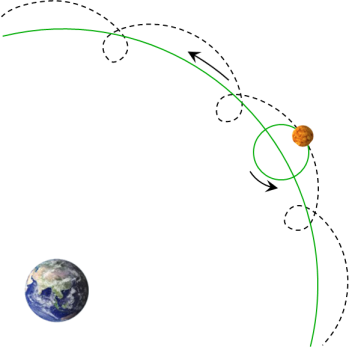

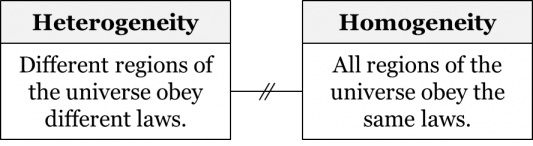

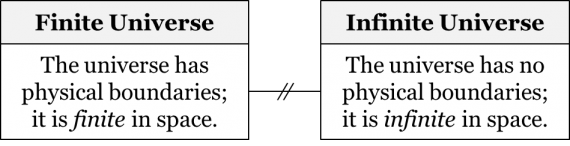

A question arises: how about those numerous instances of non-rectilinear motion that we come across every day? For Cartesians, the moon was made of the same sort of matter as things on Earth, but it travelled in a roughly circular path around it. And, what about the parabolic motion of a projectile? There doesn’t seem to be any obstruction in either case, and yet the motion is far from rectilinear. To answer this question, we need to appreciate the Cartesian take on gravity.

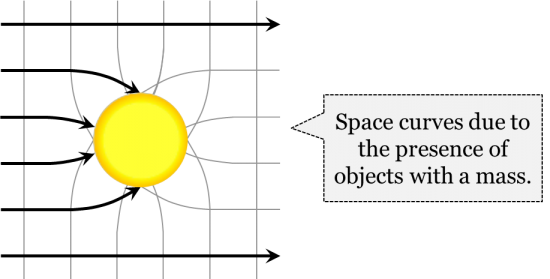

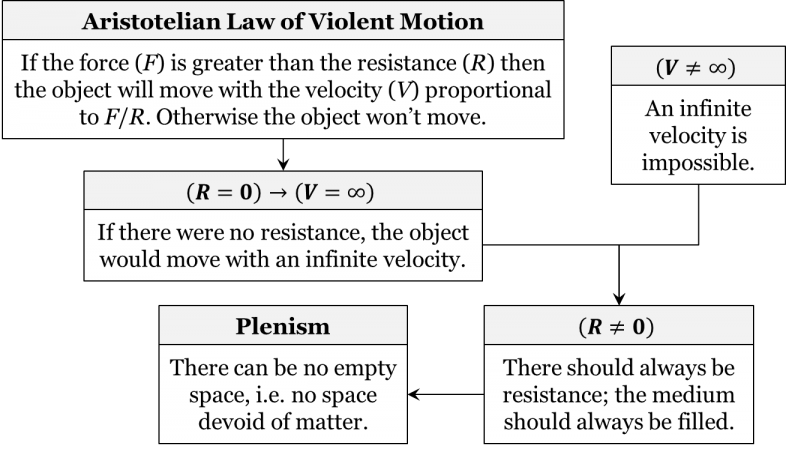

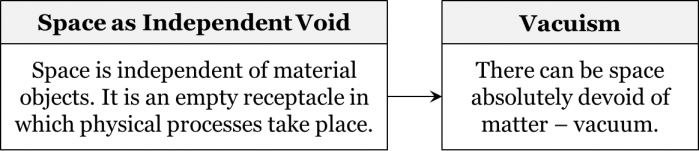

If extension is the attribute of matter, then, according to Descartes, extension cannot exist independently of material things. Thus, for Cartesians there is no such thing as empty space. Rather, Cartesians accepted the principle of plenism (from plenum (Latin) meaning “full”) according to which the world is full and contains no emptiness. In this sense, a seemingly empty bedroom – one without a bed, desk, chair, dresser, or posters of Taylor Swift – is not, in fact, empty; it is still full of invisible bits of matter, like those of air. In fact, the entire universe is full of matter, according to Cartesians. So, the seeming empty space between the moon and the earth, and the planets and stars is not actually nothing, it’s a whole lot of invisible matter. Descartes’ reasoning for this stance is that space is not a separate substance; it is an attribute of matter. But an attribute (a property, a quality) cannot exist without a certain thing of which it is an attribute. For instance, redness cannot exist on its own unattached to anything red; it necessarily presupposes something red. Similarly, extension or space cannot exist without anything material, because extension is just an attribute of matter.

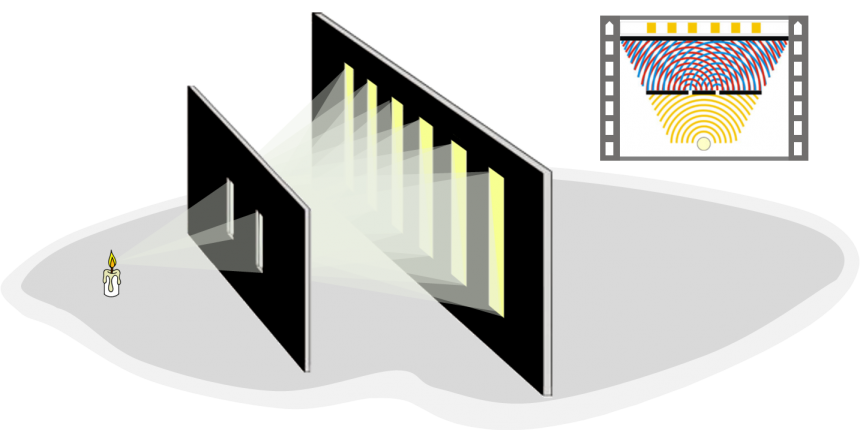

Since the universe is full, i.e. it is a plenum; the ideal of unobstructed, rectilinear motion described by Descartes’ second law becomes an unlikely occurrence. In practice, all motion turns out to be an interchange of positions, that is, all motion is essentially circular. Imagine two scenarios involving particles. In the first, empty space does exist. If a particle moves in this scenario, it can move in a straight line into some empty space and would leave a new empty space in its place. In the second scenario, however, empty space does not exist. If a particle moves in this plenum, it might be blocked by all of the particles around it. It is also impossible for this particle to leave any empty space upon vacating its initial location. Its only option is to exchange places with the particles that surround it. Neither particle can pass through the other, as they are both extended, so they do a little dance, spinning around each other until they occupy their new places. For Cartesians, motion along a straight line is what a bit of matter would do if it wasn’t obstructed. But the world being a plenum means that matter is always obstructed, and that actual motion always ends up being that of circular displacement.

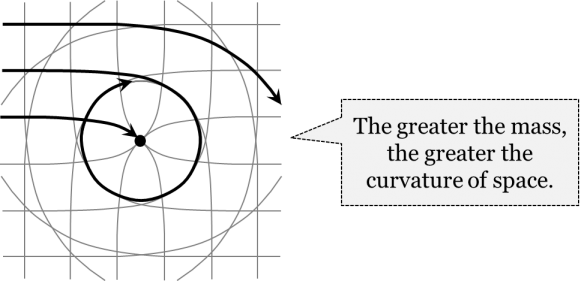

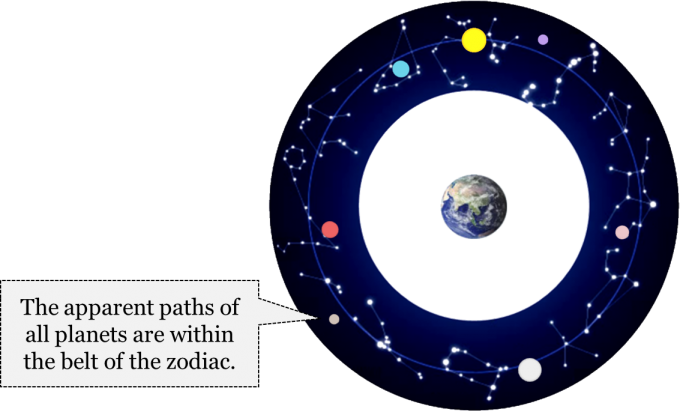

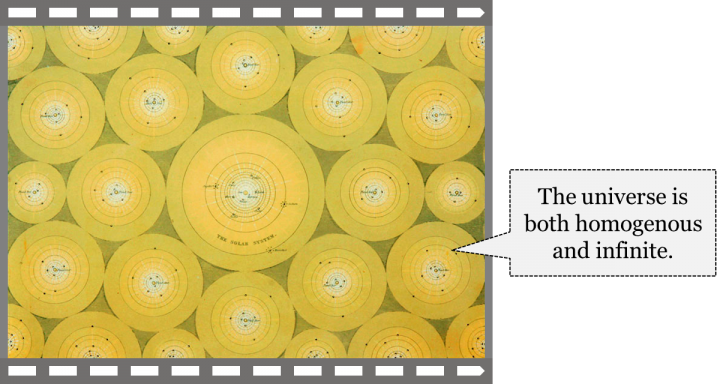

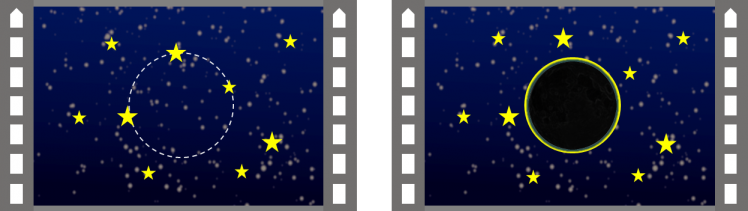

The idea that all motion is eventually circular is the basic principle underlying the Cartesians’ so-called vortex theory. Among many phenomena, this theory explains gravity, the revolution of moons and planets, and even the motion of comets. In a word, vortex theory says that all planets are lodged in the centre of their own vortex of visible and invisible matter, each of which is, in turn, carried about an even greater vortex centred on the sun. But the complexities of the theory warrant a closer look.

According to Cartesians, every star, like our Sun, revolves around its own axis. This revolution creates a whirlpool of mostly invisible particles around the star. As these particles are being carried around the star in this giant whirlpool, or vortex, they gradually stratify into bands, some of which later form into planets also carried by the vortex around the star. But because each of these planets can also rotate around its own axis, it creates its own smaller vortex. This smaller vortex can carry the satellites of a planet, which is how Cartesians explain the revolution of the Moon around the Earth, or Jupiter’s moons around Jupiter. The idea of vortices, for Cartesians, could potentially be extended beyond solar systems; there might be even greater vortices that carry individual stellar vortices. In sum, for Cartesians, the whole universe is like a huge whirlpool that carries smaller whirlpools that carry even smaller whirlpools, ad infinitum.

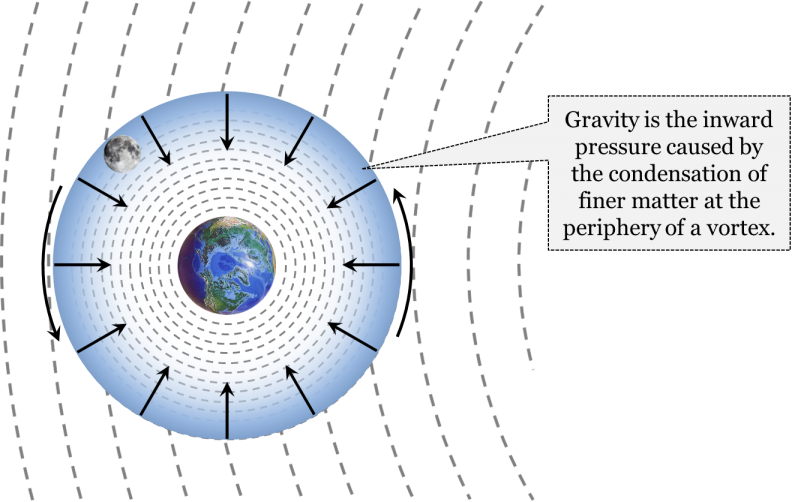

This vortex theory provides a simple mechanistic explanation of gravity. Cartesians accepted that the Earth stood in the centre of a vortex with its edges or a periphery extending to just beyond the moon. So, between the surface of the Earth and the far side of the moon are layer after layer of invisible bits of matter. Now, what happens to all of that matter when the Earth rotates around its axis? Every bit of matter is carried away from, or flees, the centre of the vortex. That is, the rotating Earth generates a centrifugal force. In effect, the innermost layer of invisible particles is constantly being pushed towards the periphery of the vortex. But the inner layers cannot leave any voids as they move away from the centre, since according to Cartesians there is no empty space. Since there is no empty space beyond the terrestrial vortex, the particles flying away from the Earth due to centrifugal force eventually accumulate at the periphery of the vortex, and thus create an inward pressure. Thus, Cartesians reasoned that it is only the lightest bits of matter that actually make it to the edge of the vortex. Meanwhile, the inward pressure pushes heavier bits of matter towards the centre of the vortex. According to Cartesians, this inward pressure is gravity. In essence, Cartesians described gravity mechanistically in terms of the inward pressure caused by the displacement of heavy matter, which is itself a result of the centrifugal force generated by a rotating planet at the centre of a vortex.

As an analogy, consider a bucket of water with a handful of sand in it. As you begin stirring the water inside the bucket, you notice the grains of sand being carried around the bucket by the whirlpool you’ve created. Initially, the grains of sand are spread throughout the bucket. Gradually, however, they begin to collect in the centre of the whirlpool, being displaced by the finer particles of water along the periphery. This is similar to what happens in any Cartesian vortex.

Of course, not all matter is moving back and forth through the ebb and flow of a vortex. Very noticeably, the Moon seems to maintain an average distance from the centre of the terrestrial vortex, while rotating around it. Descartes explained that the moon was in a position of relative equilibrium between the centrifugal force created by a rotating Earth and the inward pressure created by the accumulation of matter at the periphery of the vortex. He extended the same logic to the Earth’s steady distance from the Sun.

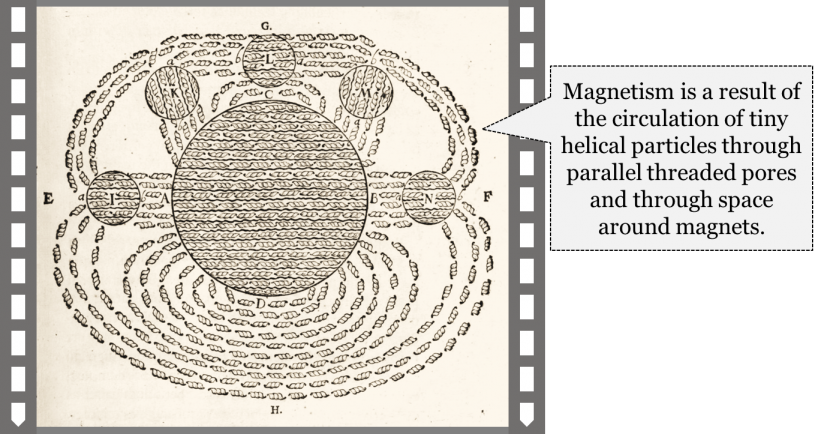

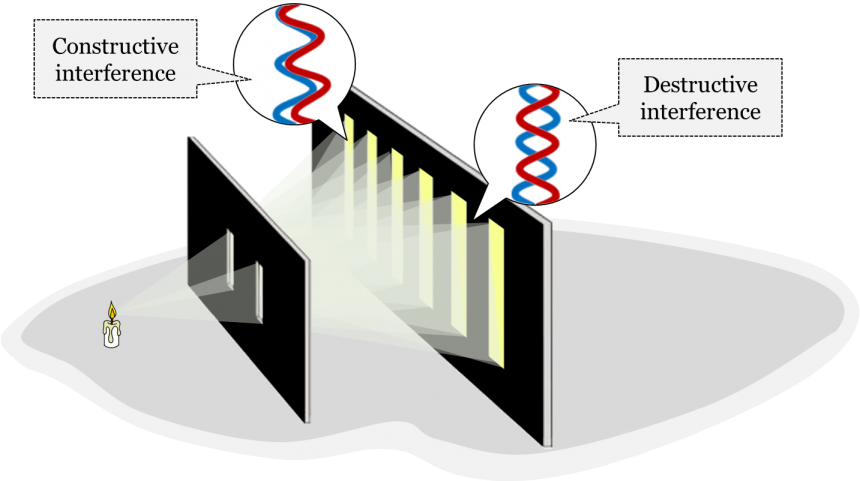

Another natural phenomenon seems to challenge the mechanistic worldview of the Cartesians. This is magnetism. Seventeenth- and eighteenth-century physicists had observed and experimented with magnetic materials like lodestones for quite some time. They commonly placed iron filings around a lodestone to reveal its magnetic effects. At face value, magnets seem to be affecting iron without any actual contact, at a distance. But, how was this phenomenon accounted for by Cartesians?

The Cartesian explanation of magnetism starts with the idea that there are numerous parallel pores running between the north and south poles of any magnet. Let’s take the Earth as an example. Imagine parallel tunnels running straight through the Earth, but tunnels so tiny that they’re invisible to the naked eye. Each and every one of these tunnels or pores is corkscrew-shaped, and constantly passing through them is a stream of invisible, also corkscrew-shaped particles. The stream of particles runs in both directions: straight from the North to the South Pole, then out and around the Earth back up to enter from the North Pole again, and vice versa.

Cartesians accepted the continuous flow of these corkscrew-shaped particles as the mechanistic cause of magnetic effects. Were Descartes to take, for instance, a lodestone, and observe that it turns to align with the Earth in a certain way, he would claim that the lodestone behaves like a magnet because it has the same corkscrew-shaped pores as the Earth. For Cartesians, the aligning of the lodestone with the Earth is in reality the turning of the lodestone until the corkscrew-shaped particles can flow smoothly through both the Earth and the lodestone.

Descartes explained numerous observations of magnetic phenomena with this mechanistic idea. Let’s take the attraction, and then the repulsion, between two magnets as examples. For Descartes, two magnets with opposite poles aligned would attract one another for a couple reasons. First, since the magnets were already emanating corkscrew particles in the same direction, it was easy enough for their streams of corkscrew particles to merge, one with the other. Second, as soon as both magnets shared the same stream of corkscrew particles, those particles would displace any air particles from between the magnets, swiftly pushing the magnets even closer together.

The same poles of two magnets repelled one another for a couple reasons as well. First, the opposing streams of corkscrew particles pushed the magnets in opposite directions, like two hoses spewing water at each other. Second, Descartes suggested that the pores running through each magnet also contained one-way valves or flaps, which opened if the corkscrew particles passed through the pore in the right direction but closed if not. Magnets repelled when the corkscrew particles met a closed valve and pushed the magnet away.

In summary, Cartesians accepted the following physical and cosmological theories: they accepted Descartes’ first and second laws of motion, which state that matter maintains a state of rest or motion unless it collides with some other bit of matter, and that it moves along a straight line when unobstructed, respectively. Cartesians accepted the metaphysical principle of plenism, that space is a secondary attribute of matter and, as a result, there is no such thing as empty space. Since the universe is full or a plenum, unobstructed motion becomes unrealistic, meaning that all motion is, in practice, circular motion. Finally, we discussed that the Cartesian views of gravity and magnetism were necessarily mechanistic, amounting to the products of vortices and corkscrew particles respectively.

Cartesian Method

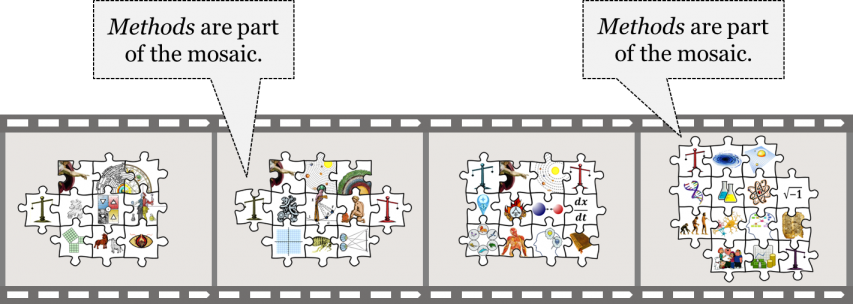

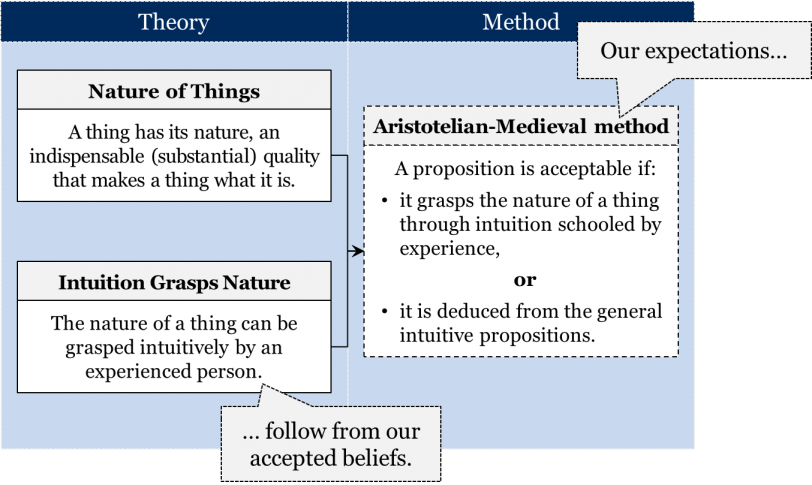

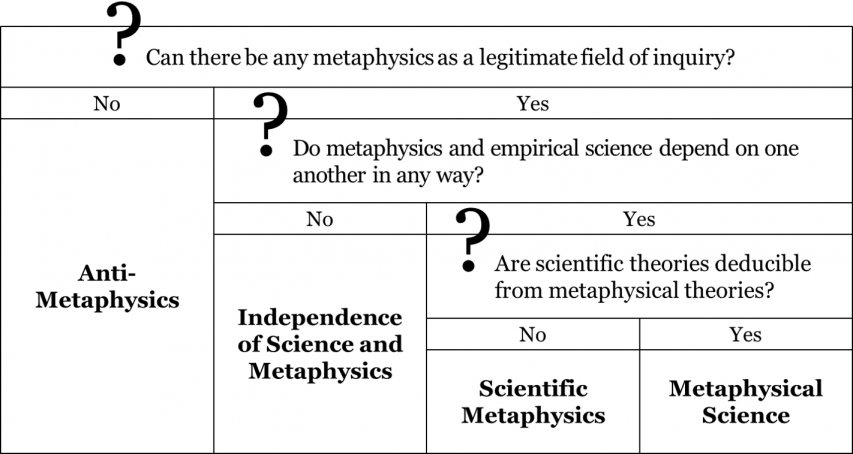

Up until this point, we’ve gone over numerous metaphysical assumptions and accepted theories of the Cartesian worldview. What remains is to look at the methods Cartesians employed to evaluate their theories.

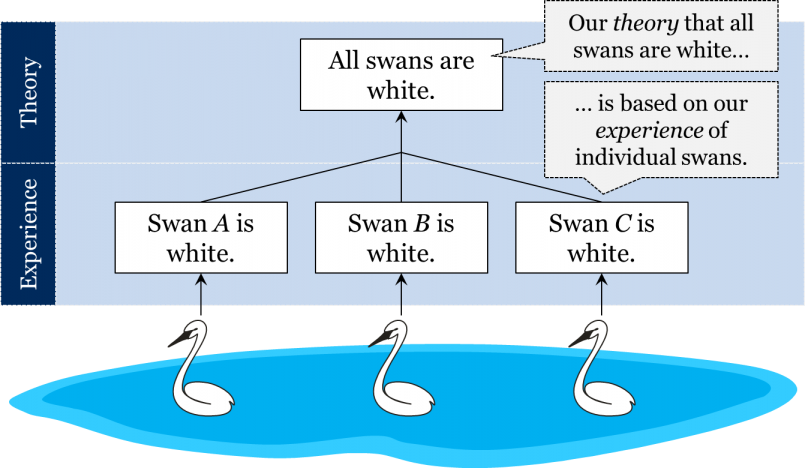

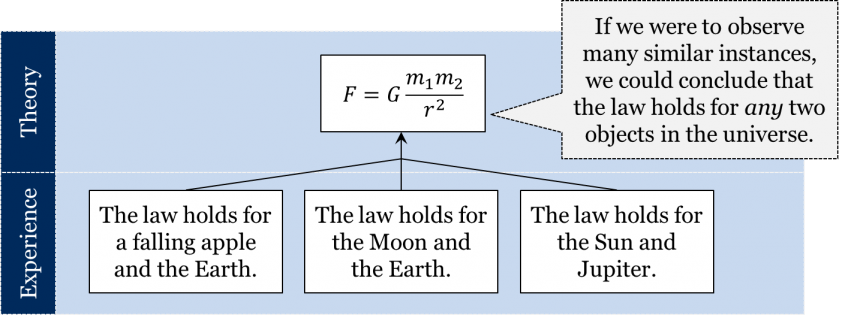

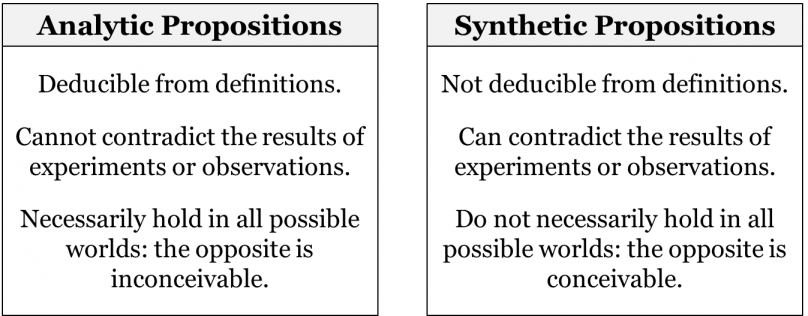

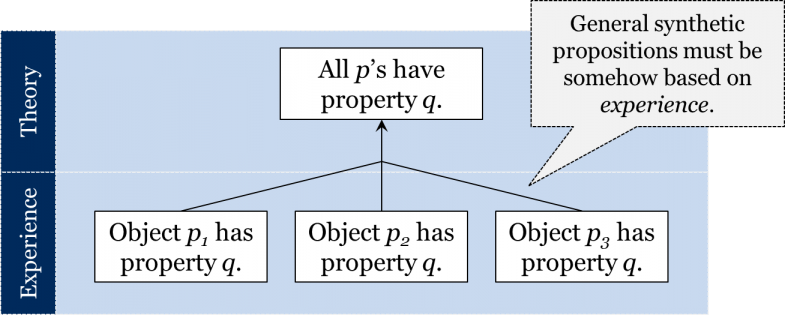

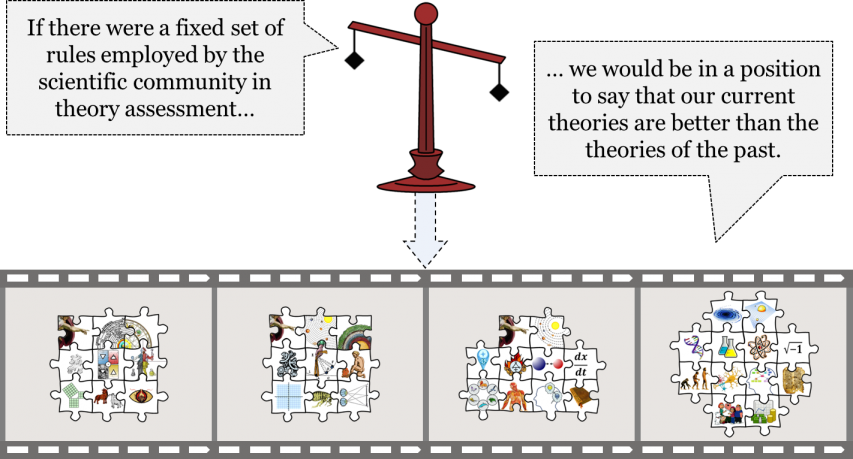

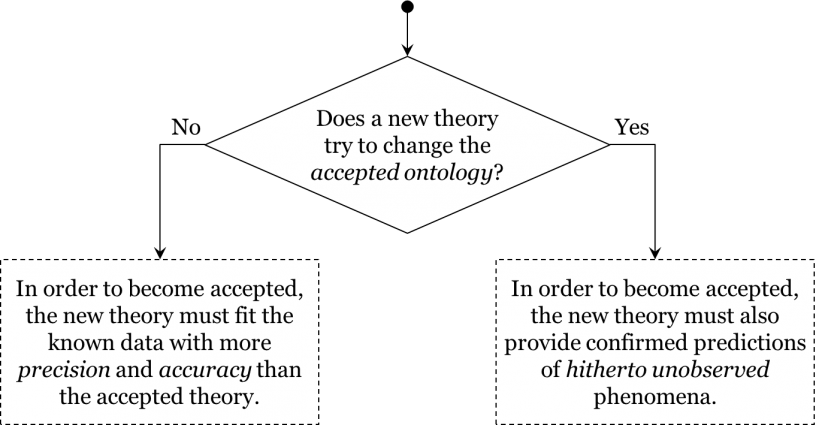

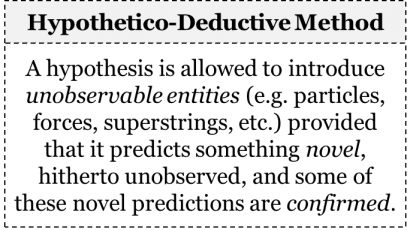

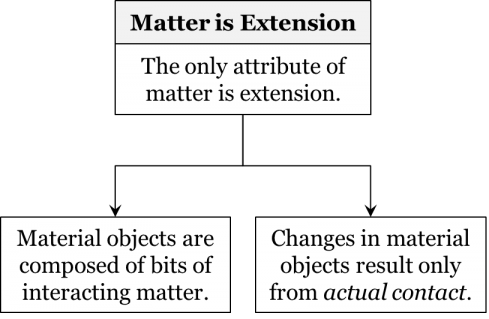

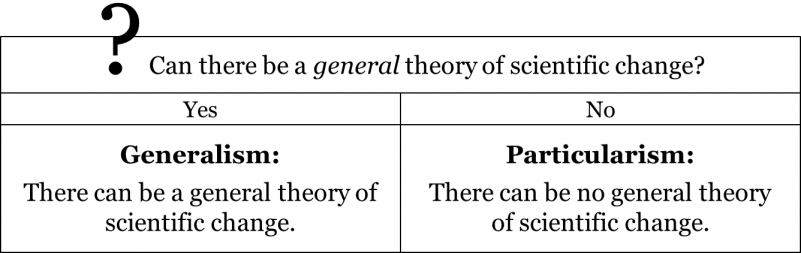

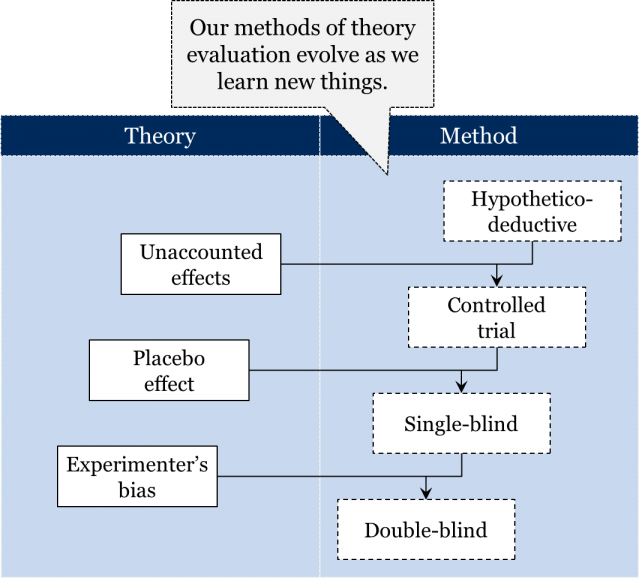

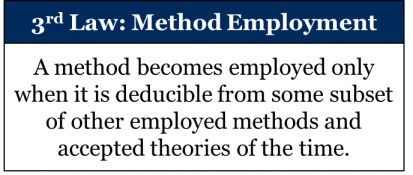

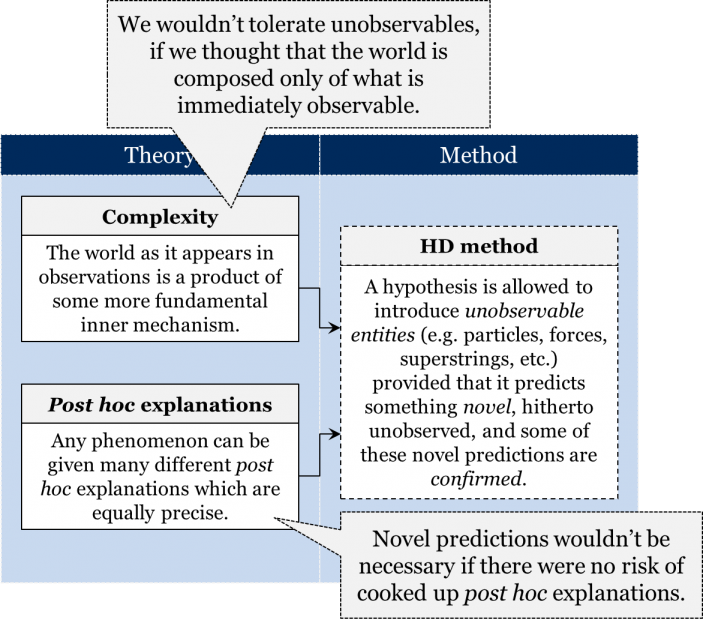

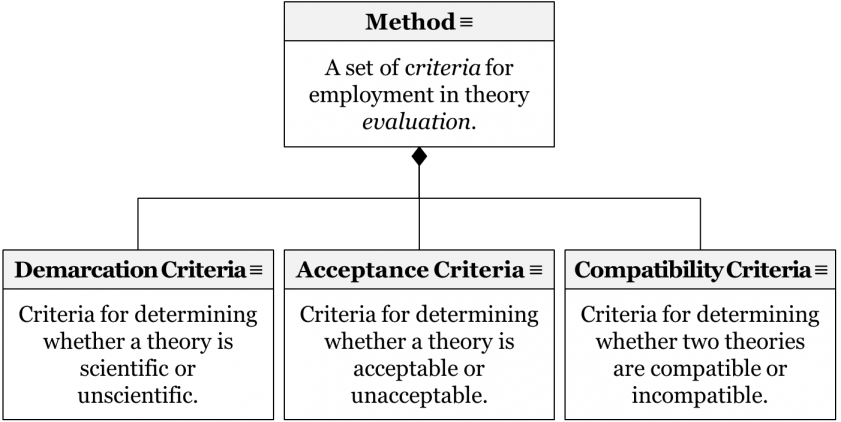

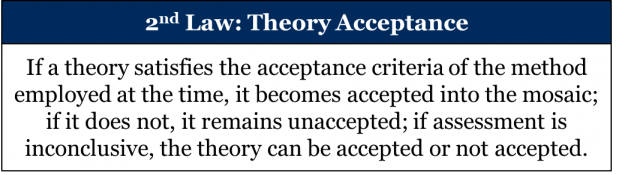

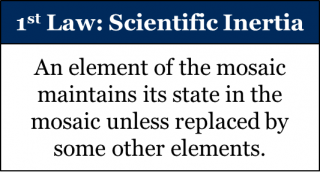

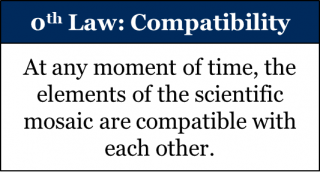

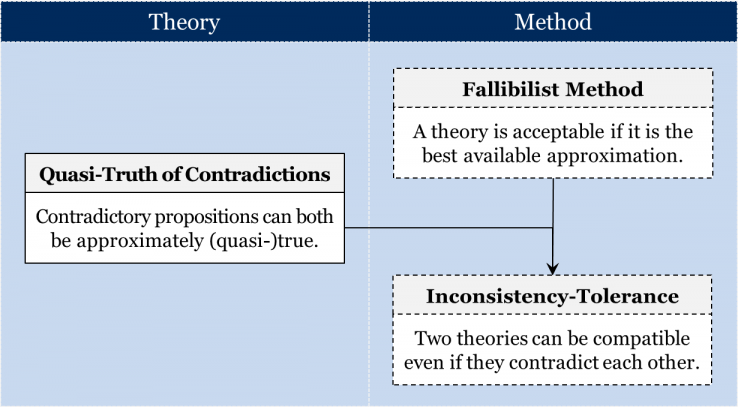

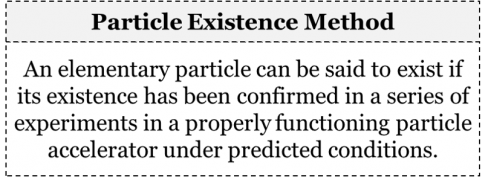

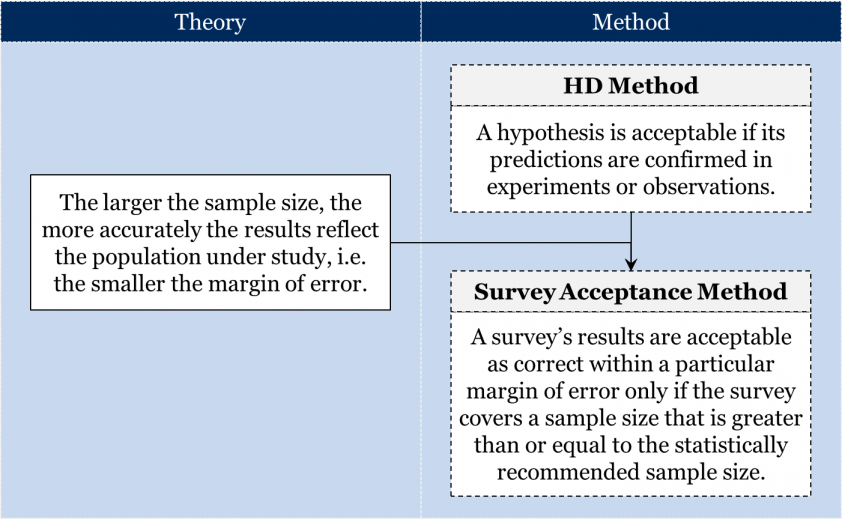

We first discussed the Cartesians’ transition from the Aristotelian-Medieval (AM) method to the hypothetico-deductive (HD) method in chapter 4, but let’s quickly review. Two important ideas follow or are deducible from the Cartesian belief that the principal attribute of matter is extension. One, any phenomenon can be produced by an infinite number of different arrangements of bits of extended matter. This first deduction opens the door to the possibility of post-hoc explanations. Two, all other attributes or qualities of matter, like colour, taste, or weight, are secondary attributes that result from different arrangements of bits of matter. This second deduction implies that the world is far more complex than it appears in observations. Both of these ideas, the principles of post-hoc explanations and complexity, became accepted as part of the Cartesian worldview, which satisfied the requirements of the AM method. The acceptance of the Cartesian worldview resulted in a change to the method by which theories were evaluated. By the third law of scientific change, the HD method becomes employed because it is a deductive consequence of the principles of post-hoc explanations and complexity.

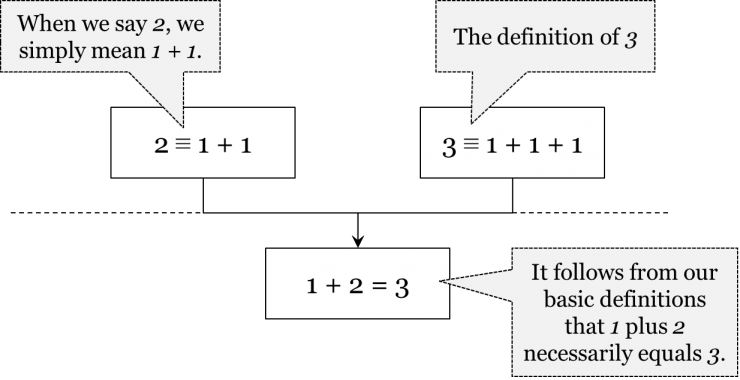

While Cartesians did reject the AM method as the universal method of science, they continued to employ a method of intuition in certain cases. Specifically, Cartesians continued to expect intuitive truths for formal sciences, like logic and mathematics, and for identifying their general metaphysical principles. For example, the existence of the mind is accepted because it’s shown to be intuitively true by means of Descartes’ “I think, therefore I am”. Similarly, the principle that matter is extension is accepted because it also appeared to be intuitively true.

In the Cartesian worldview, the method of intuition can only reveal so much: it can only help unearth the most general characteristics of mind and matter, including the principles of logic, mathematics and metaphysics. But when it came to the explanations of more specific phenomena, the method of intuition was not thought to apply. Instead, the requirements of the HD method were employed when evaluating the explanations of specific phenomena.

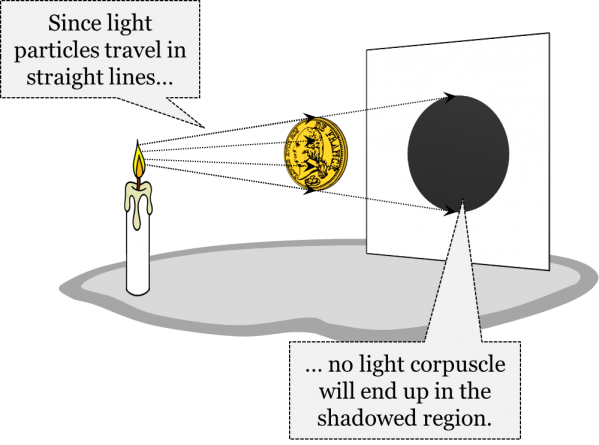

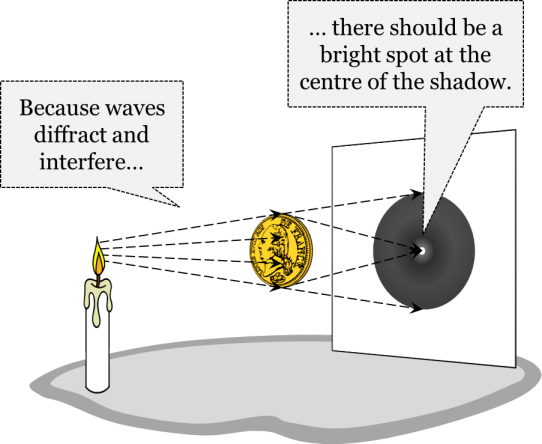

Thus, any hypothesis about the inner structure of this or that process would require a confirmed novel prediction. Again, this is because any phenomenon can be explained by hypothesizing very different internal structures. For instance, the Cartesian theories of gravity or magnetism, with their not-so-intuitive vortices and corkscrew particles, would be unlikely to satisfy the requirements of the method of intuitive truth. Instead, their acceptance in the Cartesian worldview depended on the ability of these theories to successfully predict observable phenomena.

But the requirement for confirmed novel predictions was only one of the requirements of the time. There were additional requirements that theories in different fields were expected to satisfy. These distinct requirements have to do with the underlying assumptions of their respective worldviews. Let’s consider three of them.

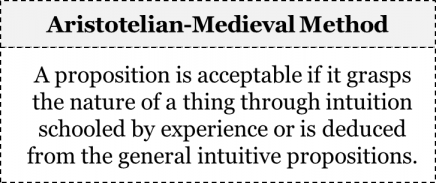

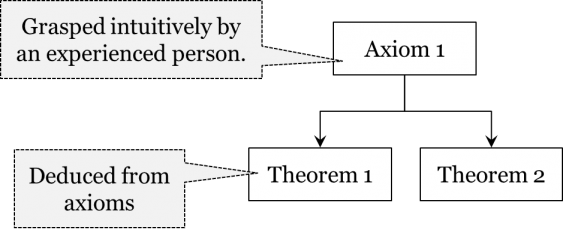

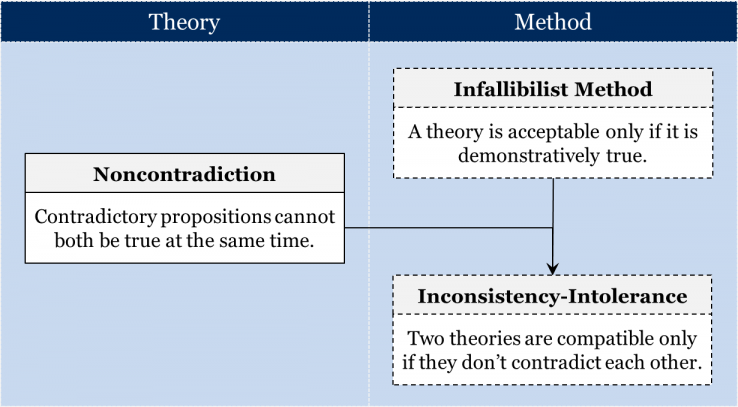

We know from chapter 7 that Aristotelians accepted that every non-artificial thing has its own nature – some indispensable quality that makes a thing what it is. This belief shaped one of the requirements of the AM method – namely, the requirement that any genuine explanation must reveal a thing’s nature.

Cartesians, however, accepted a different fundamental assumption about the world. They accepted instead that every material thing is essentially akin to a mechanism, albeit often very complex. Thus, they required that any genuine explanation be a mechanical one. That is, Cartesians employed a mechanical explanations-only method. Consequently, even those phenomena which seemed non-mechanical and suggested the possibility of action at a distance would be considered mechanical in reality.

Say, a Cartesian at home alone observes a door slam shut on its own. She did not witness the mechanism by which the door shut – there was no other person present, nor any gust of wind. Still, as a good Cartesian she will only accept that some mechanical process led the door to close, lest she undermines the fundamental assumptions of her worldview.

There’s an important caveat to the Cartesian requirement for mechanical explanations: it only requires mechanical explanations for material processes. As dualists, Cartesians would have a different method for mind-processes, or thoughts. Since thought is the principal attribute of mind, and since the fundamental science of thought is logic, then for Cartesians any explanation of mind-processes must be logical and rational.

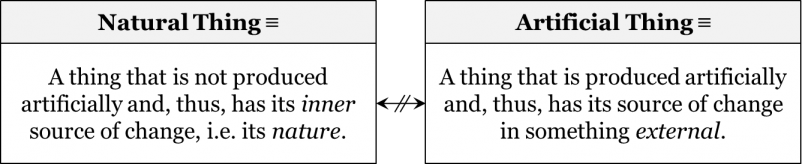

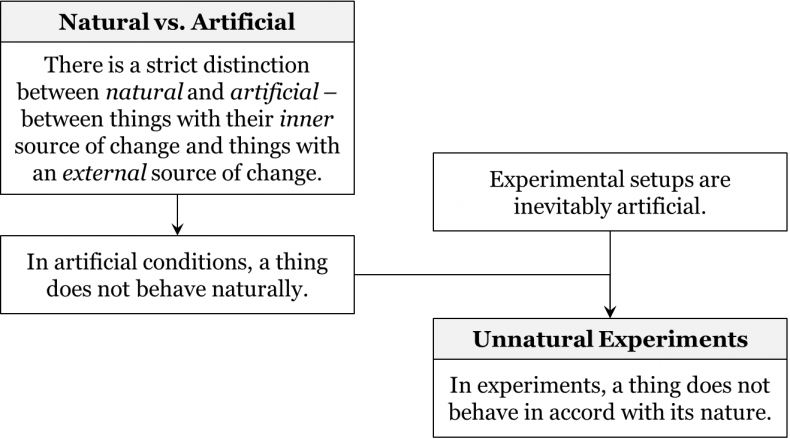

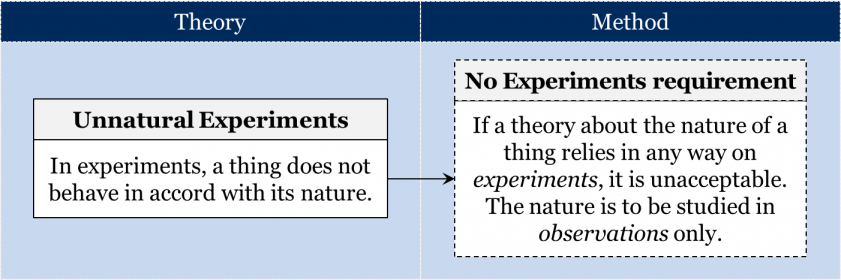

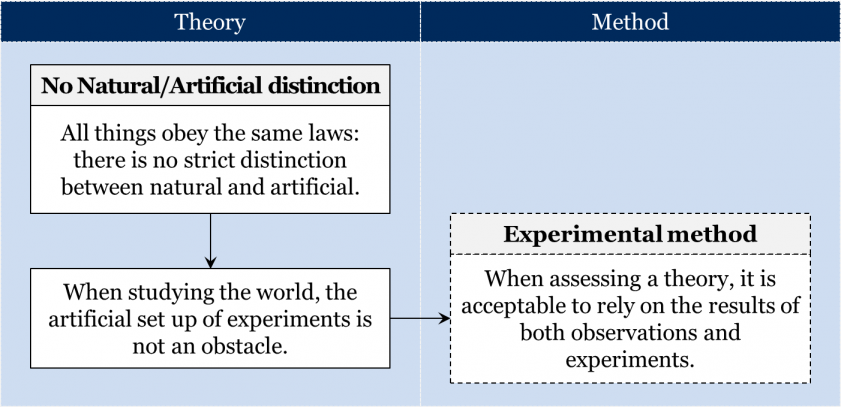

Additionally, Aristotelians clearly distinguished between natural things and artificial things. A natural thing, for Aristotelians, was a thing with an inner source of change. Anything not made by humans would be natural, as it would have a certain nature which would be the internal source of change for that object. For instance, a rock’s quality of heaviness would be its inner source of change that would naturally direct the rock towards the centre of the universe. Similarly, an acorn’s tendency to grow into an oak tree would be its inner source of change. Artificial things, on the other hand, are guided by something external to them, and don’t have an inner source of change. A ship travels from port to port due to the commands of the captain and the actions of the crew. A clock shows the right time because it has been so constructed by its maker. Aristotelians accepted a strict distinction between natural and artificial things. Because the two have very distinct sources of change, explanations of natural and artificial things would have to be very different. This is the reason why Aristotelians didn’t think that the results of experiments could reveal anything about the nature a thing under study. Experiments, by definition, presuppose an artificial set up. But since the task is to uncover the nature of a thing, studying that thing in artificial circumstances wouldn’t make any sense, according to Aristotelians. You can certainly lock a bird in a cage, but that won’t tell you anything about the nature of the bird. Thus, the proper way of studying natural phenomena, according to Aristotelians, was through observations only.

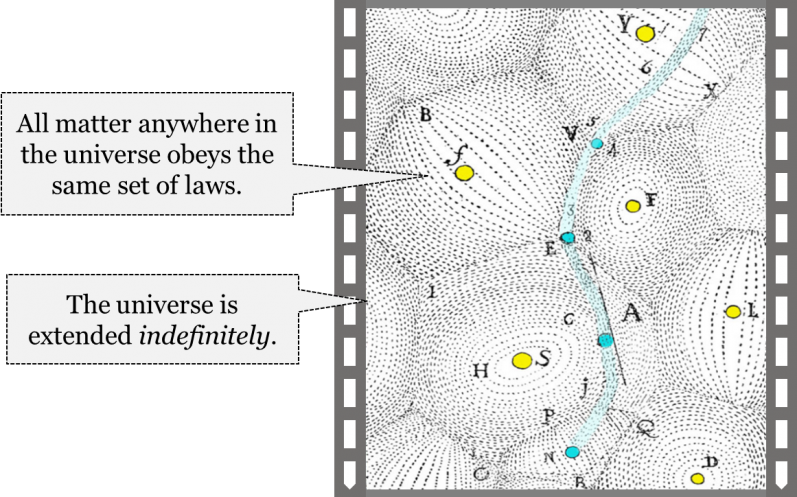

In contrast, Cartesians rejected the distinction between natural and artificial things, and, consequently, were very interested in experiments. For them, all matter is merely a combination of bits of interacting matter. Therefore, both artificial and natural things obey exactly the same set of laws. Since one aim of science is to explain the mechanism by which these bits of matter interact, it is now allowed to rely on experiments when uncovering these mechanisms. In other words, Cartesians employed an experimental method, which states that when assessing a theory, it is acceptable to rely on the results of both experiments and observations.

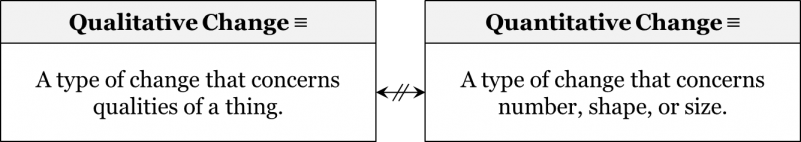

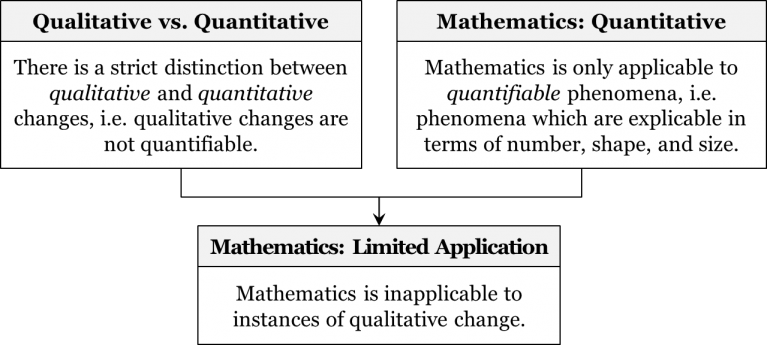

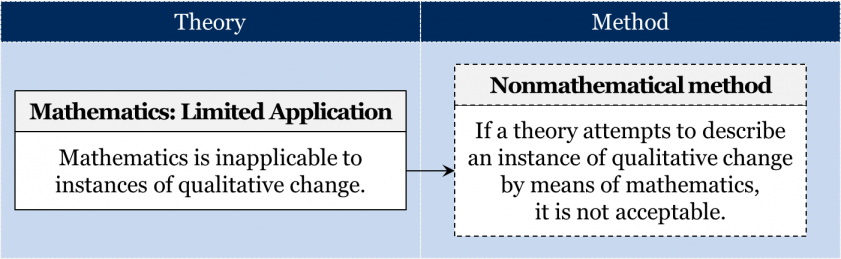

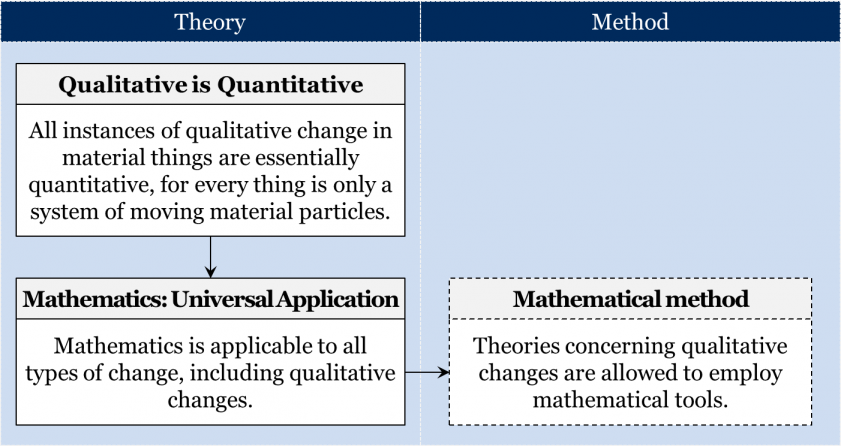

Another important distinction implicit in the Aristotelian-medieval worldview was that between quantitative and qualitative changes. A change was said to be qualitative if it concerned the qualities of a thing. For instance, when someone learns to read and write, she acquires a new quality. Similarly, when a caterpillar transforms into a butterfly, it thus acquires a host of new qualities, such as the ability to fly. In contrast, a change is said to be quantitative if it concerns number, shape, or size, i.e. something expressible in numbers. But because mathematics can only be applied to quantifiable phenomena, Aristotelians also believed that mathematics cannot be applied to instances of qualitative change. This brings Aristotelians to the non-mathematical method: if a theory about certain qualitative changes uses some mathematics, it is unacceptable. This explains why, in the Aristotelian mosaic, mathematics was only permitted in those fields of science which were concerned with quantitative phenomena, and had virtually no place in their biology, physiology, or humoral theory.

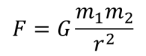

Cartesians rejected the strict distinction between quantitative and qualitative change. Again, Cartesians accepted that all matter is simply a combination of bits of interacting parts. So, any instance of qualitative change is in reality the rearrangement of these parts – a rearrangement that can be measured and expressed quantitatively. As long as qualitative changes remained indistinguishable from quantitative ones, mathematics could, in principle, be used to describe any material process whatsoever. That is, Cartesians accepted that mathematics now had universal application, and accordingly employed a mathematical method.

Let’s recap this section on the methods employed in the Cartesian worldview. Foremost, Cartesians transitioned from employing the AM method to employing the HD method, requiring confirmed novel predictions to accept any claim about the inner, mechanical structure of the world. They did continue to employ the method of intuition, but in a more limited way than the Aristotelians – only to evaluate the fundamental principles of logic, mathematics, and metaphysics. Cartesians also required that all explanations of material processes be mechanistic, that the results of experiments be considered when evaluating theories, and that mathematics is universally applicable.

Summary

The following table compares some of the key metaphysical assumptions of the Aristotelian-Medieval and Cartesian worldviews we have discussed so far:

We will revisit both the Aristotelian-Medieval and Cartesian worldview in the next two chapters and discuss some of their other metaphysical assumptions.