Digital Privacy: Leadership and Policy by Lorayne Robertson and Bill Muirhead is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License, except where otherwise noted.

Ontario Tech University

Oshawa, ON, Canada

Digital Privacy: Leadership and Policy by Lorayne Robertson and Bill Muirhead is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License, except where otherwise noted.

1

Title: Digital Privacy: Leadership and Policy

Editors: Lorayne Robertson & Bill Muirhead

Front cover—design by Chris D. Craig, photos by P. Deligiannidis (tech) and J. Dent (privacy).

Format: eBook

First published in 2022 through the University of Ontario Institute of Technology. Note: Ontario Tech University is the brand name used to refer to the University of Ontario Institute of Technology.

Recommended APA 7 citation:

Robertson, L., & Muirhead, B. (Eds.). (2022). Digital privacy: Leadership and policy. Ontario Tech University.

This project is made possible with funding from the Government of Ontario and through eCampusOntario’s support of the Virtual Learning Strategy. To learn more about the Virtual Learning Strategy visit: https://vls.ecampusontario.ca.

Data Privacy: Leadership and Policy © 2022 by Lorayne Robertson and Bill Muirhead (Eds.) is licensed under CC BY-NC-SA 4.0

|  |  |

2

Dr.’s Robertson and Muirhead, supported by eCampus Ontario and Ontario Tech University (UOIT), believe that education should be readily available to everyone, which means supporting the creation of accessible, open, and free educational resources. Wherever possible, the Digital Privacy open textbook adheres to levels A and AA of the Web Content Accessibility Guidelines (WCAG 2.0, 2.1) of perceivable, operable, understandable, robust, and conformance (https://www.w3.org/TR/WCAG21/). Pressbooks was chosen for its commitment to built-in accessibility, outlined at https://pressbooks.org/accessibility/. Further, we completed Coolridge et al.’s (2018) Checklist for accessibility during the final editing phase of book construction (available upon request).

1

This chapter will assist students to:

Throughout the chapters of this book on digital privacy in education, we will argue that digital privacy is a topic that is important to study and understand. First, we want to raise awareness of the breadth of surveillance in society today and to encourage students to question how much surveillance is normative and if they can accept that. In later chapters, we discuss the mechanisms for behavioural tracking and explain the mechanisms through which advertising is tailored to us as individuals and the magnitude of the corporate monopoly on curated advertising and content. We encourage students to take a critical stance and ask how comfortable they are with this level of exploitation of our summative individual preferences.

It is important to acknowledge that many students have not lived during a time when digital technologies were not pervasive. They may have almost no knowledge of what it feels like to be a private person. They may have no understanding that enables them to question how they are internalizing and acting out social norms established through the dictates of social comparison.

People seeking digital privacy want the ability to ensure that the collected information about them can only be used for the purposes to which they have agreed and at the time that the information was collected. This may seem to be a lofty ideal, but it is an essential part of the vision for digital privacy in education. Canadian youth are beginning to explore their personhood, and they have the right to define who they are as adults without having to respond to the images and information about their youth or their formative moments.

Solove (2004) argues that the digital biographies that are being created about our personhood at present are unauthorized, partially true, reductive in nature and represent “impoverished judgements” of ourselves (p. 48). It is unfortunate that Canadians have to live with online biographies that do not allow them to unveil only those parts of themselves that they choose to show to others when they choose to do this. It is also unfortunate that the present system of digital privacy does not allow people access to the full digital dossiers that have been collected about them. Solove argues that privacy decisions are made for people who are frequently excluded from the process. Likewise, he argues that choices to relinquish data are no longer actual choices in today’s economy (Sololve, 2004). We encourage students accessing this Digital Privacy: Leadership and Policy e-book to raise questions about these issues and the need for general policies for data protection in Canada.

Privacy is both a social construct and a historical construct. By this, we mean that our understanding of privacy is a collection of ideas that have taken shape over time. The context impacts the definition of privacy. At one time, privacy meant something quite different than it does today. Consider the scenario from an earlier era where a family takes pictures from an analog camera. The pictures are developed and saved in a box or an album. The parents had the security of knowing that these pictures of their children would only be shared with their consent. Their daughter did not have to worry that her three-year-old self would be seen in a later decade and shared with others unless she chose to share that physical copy of the photo. Privacy in this context has an expectation of privacy unless consent to share has been given.

Now fast track to the present where people have become digital persons (Solove, 2004). Details about people and choices in their lives are preserved permanently in giant databases that track their behaviour. In their wallets are credit cards, loyalty cards, health cards, bank cards and other cards that track and record where they are and what they are doing. Computers and devices routinely track every aspect of people’s preferences and what they have searched online. Digital surveillance not only occurs online but increasingly cameras track our movements and this data is too digitized and linked to online profiles. If people are on social media, other people and not just computer databases are following their day-to-day actions and reactions. Now they are not only physical people but, according to Solove (2004), they have become digital persons complete with digital dossiers compiled by computer networks.

Nonetheless, we define digital privacy as an expectation of privacy unless informed consent has been given. This definition asserts that even digital persons can exercise some discretion with respect to how and where they share their digital information.

Life online has become much more routine since the declaration of the global pandemic in 2020. For the first time, students spend more time online outside of school than in school. The spread of the internet has become global but still concentrated. In the top 38 countries reporting any internet usage, more than 80% of those populations report being online (Internet users, 2016). This internet participation brings with it both affordances and risks, and some of the risks are to privacy and security. Another key construct to consider is that of personally identifiable information or PII. This is information that is used to identify a person distinctly—it is linked to a person’s identity. Some elements of PII include name, country of origin, race, religion, age, gender identity, and sexual orientation. Other information can include education, health, criminal history and employment history. People are identified through symbols such as a phone number or postal code as well as biometric data such as fingerprints and blood type.

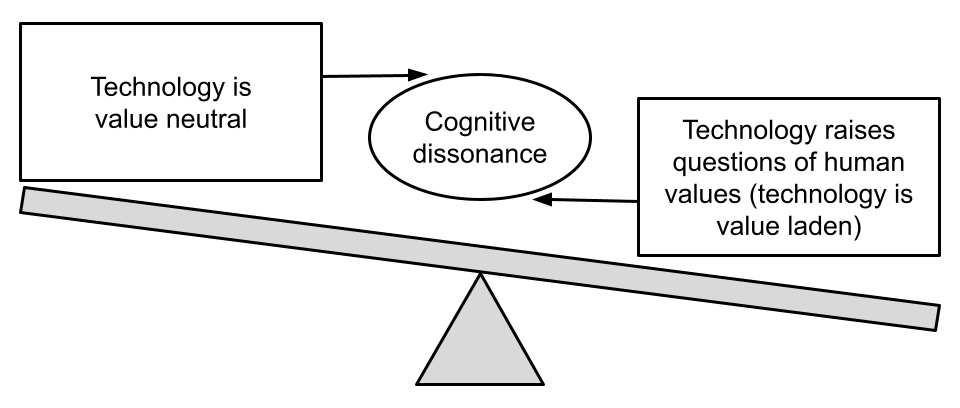

To put digital privacy in a very simplistic sense: technology facilitates the collection, distribution and storage of PII. Commerce seeks to maximize PII collection and use it to target advertising to specific groups. Consumers want to know that their privacy rights are protected despite the reality that they freely provide their PII. This creates a privacy paradox, which is one of the constructs that we study in this course.

This e-book is designed to accompany students and instructors undertaking the course: Digital Privacy: Leadership and Policy. There are four modules in this 12-week course and each module is intended to take the same amount of time: three weeks. Each module can stand on its own or be reviewed as a stand-alone resource.

The four modules of the Digital Privacy: Leadership and Policy course are:

We have designed this course with a moderate degree of structure because some research (e.g., Eddy & Hogan, 2014) and our own experience tell us that courses with a mid-level of structure are more helpful to a wider range of students. Here are the mid-level structural elements that have been designed into the course:

In the next section, we explain each of these elements of deliberate pedagogical design.

The learning outcomes for the Digital Privacy: Leadership and Policy course are listed in the course outline. Keen observers will notice that each of the learning outcomes has been addressed at least ten times throughout the course’s four modules. In simple terms, here are some of the outcomes.

Upon completion of the course, students should be able to:

Our goal in designing the course was to have students return continuously to these learning outcomes multiple times. Accordingly, the outcomes are reflected in all of the course modules, readings and assignments.

The Digital Privacy: Leadership and Policy course is designed to have elements that are studied by students before, during and after class. According to Eddy and Hogan (2014), moving much of the information transmission to before class has been found to free up 34.5% more time during class to reinforce major concepts, higher-order thinking and study skills. This strategy allows students to spend as much time as they need to fully prepare for class. Engaging students in preparing for the course in advance of the class promotes academic achievement and is significantly helpful for learners who are first-generation post-secondary students (Eddy & Hogan, 2014). Below is a quick overview of how this works.

Before class: Students review the slides for the class and study the assigned readings in order to prepare for class. In this way, when they come to the online, synchronous class, they have accessed the knowledge for that topic and are better prepared for discussions and other in-class activities. The readings for this course have been carefully selected to align with the course learning outcomes. Knowing that some students are keenly interested in this topic, we have provided additional readings at multiple points. Pre-class preparation may also include videos and activities.

During class: The Instructor assumes that the students have reviewed the slides and they are familiar with the readings. Accordingly, the instructor spends less time providing information so that more of the class time is dedicated to:

After class: Students are assigned coursework to complete after class. This includes preparation for the next week and assignments.

Distributed learning (also called the pacing effect) is an element of moderate structure in a course that paces students’ learning. It is the opposite of cramming at the last minute and hoping to pass the course. Better learning occurs when the learning opportunities are spaced apart rather than happening close together. When learning is spaced apart, it is more likely to have the students’ attention and they are more likely to connect it to other contexts (Carpenter, 2020). Students spread out the time they spend on a concept with pre-reading activities, in-class assignments, and post-class reviews. This has a direct impact on how well they perform in the course (Eddy & Hogan, 2014). Distributing the learning also allows the students to cycle back to the key concepts in a course frequently.

This course has some cumulative assignments related to the case study. Students craft a plan for a case study and receive feedback. Throughout the course, they identify policies related to their case study and seek solutions. They present their case study to the other students and then use this case study as the basis for their final, collaborative paper. Giving early feedback helps to build a student’s sense of safety that they are “on the right track.” The second assignment builds on the first, and the final assignment is a culmination of their learning in the course. Rich asynchronous feedback with synchronous discussions between the instructor and student that mimic the established office hours of old combine to create an environment where instructor and students are engaged in a context of learning together and exploring in unison.

More and more, instructors are moving away from teacher-centred pedagogies, where the instructor is the source of information. In this course, we rely on the students to research topics thoroughly before class and come to class with a good understanding of the topic and key questions. In this way, students can add their prior experience to the discussions. It is important to design learning activities in the course where students interact and share knowledge.

Students in online courses are encouraged to share their cameras and their voices so that others can get to know them. Garrison (2009) describes social presence as “the ability of participants to identify with the community (e.g., course of study), communicate purposefully in a trusting environment, and develop interpersonal relationships by way of projecting their individual personalities” (p. 352). Garrison (2011) explains further that, “where social presence is established, students will be able to identify with the group, feel comfortable engaging in open discourse, and begin to give each other feedback” (p. 33). We would argue that social presence is a key element to deeper cognitive engagement, critical thinking and student success.

Another deliberate element of the design of this course is collaboration. Johnson et al. (1994) first defined collaborative learning through the concept of positive interdependence. This is where group members assume responsibility for ensuring that the group is successful and students, in turn, can depend on other group members to do their part. This course was designed to encourage students to develop both social presence and collaboration skills.

Earlier models of online learning had fewer opportunities for collaboration in real-time. Today’s technologies allow students to participate in courses and share dialogue, video and images. They can use multiple communication channels simultaneously, such as using the Chat as a backchannel to ask questions or affirm comments in a way that does not interrupt the flow of the discussion in class. Google’s G-Suite allows students to collaborate in real-time for document creation or asynchronously. Some students use quasi-synchronous forms of chat, such as WhatsApp, that allow real-time responses or responses with a short delay. According to Dalgarno (2014), these affordances allow students to engage more deeply with the topic.

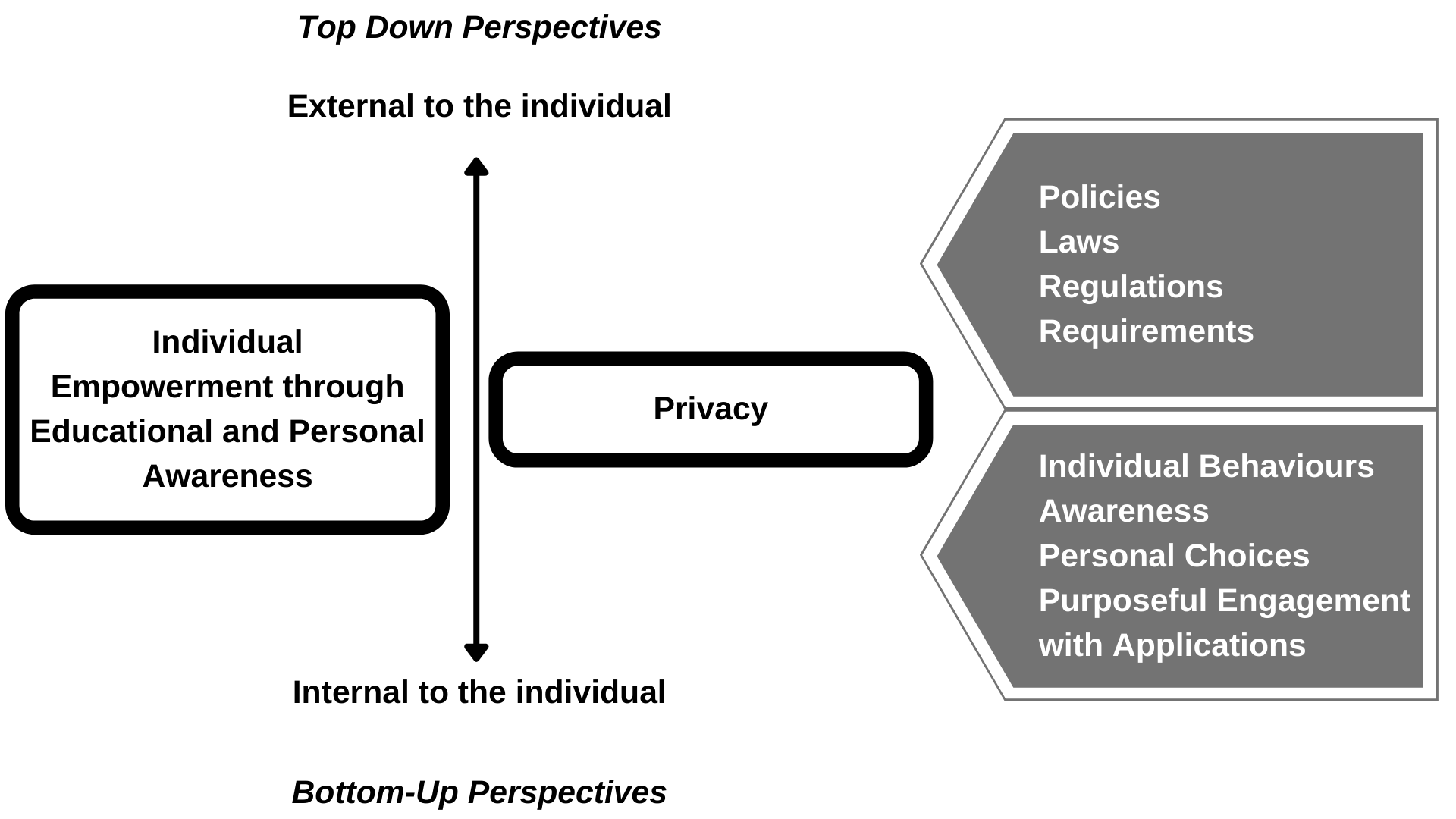

In this text, the authors have taken a critical stance toward interrogating the concepts of digital privacy and surveillance in education. We have recognized that, as Apple (1999) has stated, much of our understanding has come from the dominant cultural groups in society. Kincheloe (2008), a critical pedagogy scholar, argues that we have assumed that what we have learned in school and the academy is inherently what is “best” for students. Critical scholars know, however, that our schooling history had gaps and omissions. There are other ways of knowing and other experiences that were left out of traditional education. This preferential treatment of colonial, patriarchal, monocultural knowledge was presented as “neutral” but it has negatively impacted the education of members of marginalized groups. Processes considered “best practices” may not be the most inclusive educational practices. In order for change to happen, teachers must become researchers and question past assumptions. Educator-researchers also need to interrogate past practices where teachers were considered the deliverers of information that may have represented a singular reality or an oversimplification of issues that require complex complicated considerations. One key to questioning dominant perspectives is to see how power is linked to the political economy and to examine its effects on individuals who are at different social locations (Kincheloe, 2008). Accordingly, in this e-book, the authors examine digital privacy in education in its complexity and encourage educator-researchers to interrogate assumptions within what Garrison (2011) has described as a community of inquiry.

Freire’s (2007) pedagogical approach encourages students not to accept that “what is” is the way that it will always be (p. 84). His pedagogy encourages the cultivation of a critical consciousness in his students, where they seek to understand the social, political and economic contradictions. Freire encouraged individual empowerment for social change (Freire, 2007). We take a similar approach to student empowerment in the Digital Privacy: Leadership and Policy course and in this accompanying e-book. In Chapter 2, for example, Robertson and Corrigan question the dominant narratives surrounding youth surveillance in education and encourage instead an approach where students, parents and schools share responsibilities. In Chapter 4, Robertson and Muirhead present a critical policy analysis framework that encourages educator-researchers to examine whose perspectives have been considered and whose are missing in policy design and implementation. Policy paradoxes in Chapter 6 are inherently complex and contested. In the final chapter, Case Studies, we encourage teacher-researchers to explore digital privacy cases in their complexity and feel empowered to call for change and renewal.

Here is a brief summary of the topics and the organization of this e-book:

In the Introduction, we describe and define Digital Privacy as a construct. We explain the pedagogical foundations of the e-book and introduce four pedagogical themes that underpin all of the modules of the course.

In Chapter 2, we discuss why Digital Privacy is important for education settings and talk about the roles of teachers, learners, boards, colleges, and institutions of higher education in digital privacy. Some key terms are introduced, such as Duty of Care while we talk about the vulnerability of students online.

In Chapter 3, the focus is on Case Studies, defining them and explaining how they work. Characteristics of great case studies are reviewed, and students can find more ideas about how to present a case study.

Chapter 4 introduces students to Critical Policy Analysis, which is a framework for reviewing policies and procedures. In this chapter, the authors explore policy definitions and look at the processes by which policies are enabled. We examine who enacts policies and how policies take on a life of trajectory of their own. The authors also present a Critical Policy Analysis framework that helps students examine the fairness of a policy.

In Chapter 5, we examine key elements of Policies and Privacy Legislation. We examine some key definitions shared by policies and compare policies in different jurisdictions (Canada, the United States and Europe). We also look at some examples of policies.

In Chapter 6, we attempt to unpack the Privacy Paradox by defining it. We look at the factors involved in enabling privacy paradoxes and how they reflect our priorities and our values. We also look at the corporate harvesting of data and dig deeper into corporate ownership of social media platforms and how they monetize access to data. In this chapter, students are encouraged to reflect on their own levels of privacy and look at some tools for minimizing their exposure to online risk. Next, we look at the principles of Privacy by Design. We delineate some privacy competencies and discuss how educators might protect themselves and their students when working online and in social media.

Chapter 7 delineates Digital privacy tools and technologies for communication and helps students become familiar with how to manage their digital footprint, how to protect their privacy while web browsing and raising awareness of digital privacy risks in the home.

In Chapter 8, Today’s Devices and Tomorrow’s Technologies, the author discusses the digital privacy implications for the use of smart devices, wearable technologies, the Internet of things (IoT) and other emerging technologies for the risks and threats to digital privacy.

Chapter 9: Educational Leadership for Digital Privacy encourages students to put it all together and reflect on the different ways that they can show leadership in digital privacy. Students will be encouraged to consider the key takeaways from the course and how they will affect their future practices in education, personal life and professional practice. In consideration of the future, students will be asked to consider how to share the responsibility to protect students’ digital privacy.

Chapter 10 – Case Studies and Scenarios are “under construction”, as it will be authored by students who design case studies for this e-book. Every year, selected case studies that are sent to the authors can be added to this e-book.

Apple, M. W. (1999). Power, meaning, and identity. Peter Lang.

Carpenter, S. K. (2020). Distributed practice or spacing effect. Oxford Research Encyclopedia of Education. https://doi.org/10.1093/acrefore/9780190264093.013.859

Dalgarno, B. (2014). Polysynchronous learning: A model for student interaction and engagement. In B. Hegarty, J. McDonald, & S.-K. Loke (Eds.), Rhetoric and reality: Critical perspectives on educational technology. Proceedings ascilite Dunedin 2014, 673–677. https://ascilite.org/conferences/dunedin2014/files/concisepapers/255-Dalgarno.pdf

Eddy, S. L., & Hogan, K. A. (2014). Getting under the hood: How and for whom does increasing course structure work?. CBE—Life Sciences Education, 13(3), 453–468. https://doi.org/10.1187/cbe.14-03-0050

Freire, P. (2005). Pedagogy of the oppressed (30th-anniversary edition). Continuum International. https://envs.ucsc.edu/internships/internship-readings/freire-pedagogy-of-the-oppressed.pdf

Garrison, D. R. (2009). Communities of inquiry in online learning: Social, teaching and cognitive presence. In P. L., Rogers, G. A., Berg, J. V. Boettcher, C. Howard, L. Justice, K. D. Schenk (Eds.), Encyclopedia of distance and online learning (2nd ed.) (pp. 352-355). IGI Global.

Garrison, D. R. (2011). E–Learning in the 21st century: A framework for research and practice (2nd Ed.). Routledge.

Internet users by country (2016). (2016, July 01). Internet Live Stats. (n.d.). https://www.internetlivestats.com/internet-users-by-country/

Johnson, D. W., Johnson, R. T., & Holubec, E. J. (1994). The New Circles of Learning: Cooperation in the classroom and school. Association for Supervision and Curriculum Development.

Jorgensen, C. (2021, January 13). Child home schooling [Photograph]. Unsplash. https://unsplash.com/photos/leyUrzdwurc

Kincheloe, J. L. (2008). Critical pedagogy primer. Peter Lang.

Robertson, L., & Muirhead, B. (2019, April). Unpacking the privacy paradox for education. In A. Visvizi, & M. D. Lytras (Eds.), The international research & innovation forum: Technology, innovation, education, and their social impact (pp. 27-36). Springer, Cham. https://doi.org/10.1007/978-3-030-30809-4_3

Solove, D. J. (2004). Digital Person: Technology and privacy in the information age. New York University Press. https://scholarship.law.gwu.edu/cgi/viewcontent.cgi?article=2501&context=faculty_publications

2

This chapter will assist students to:

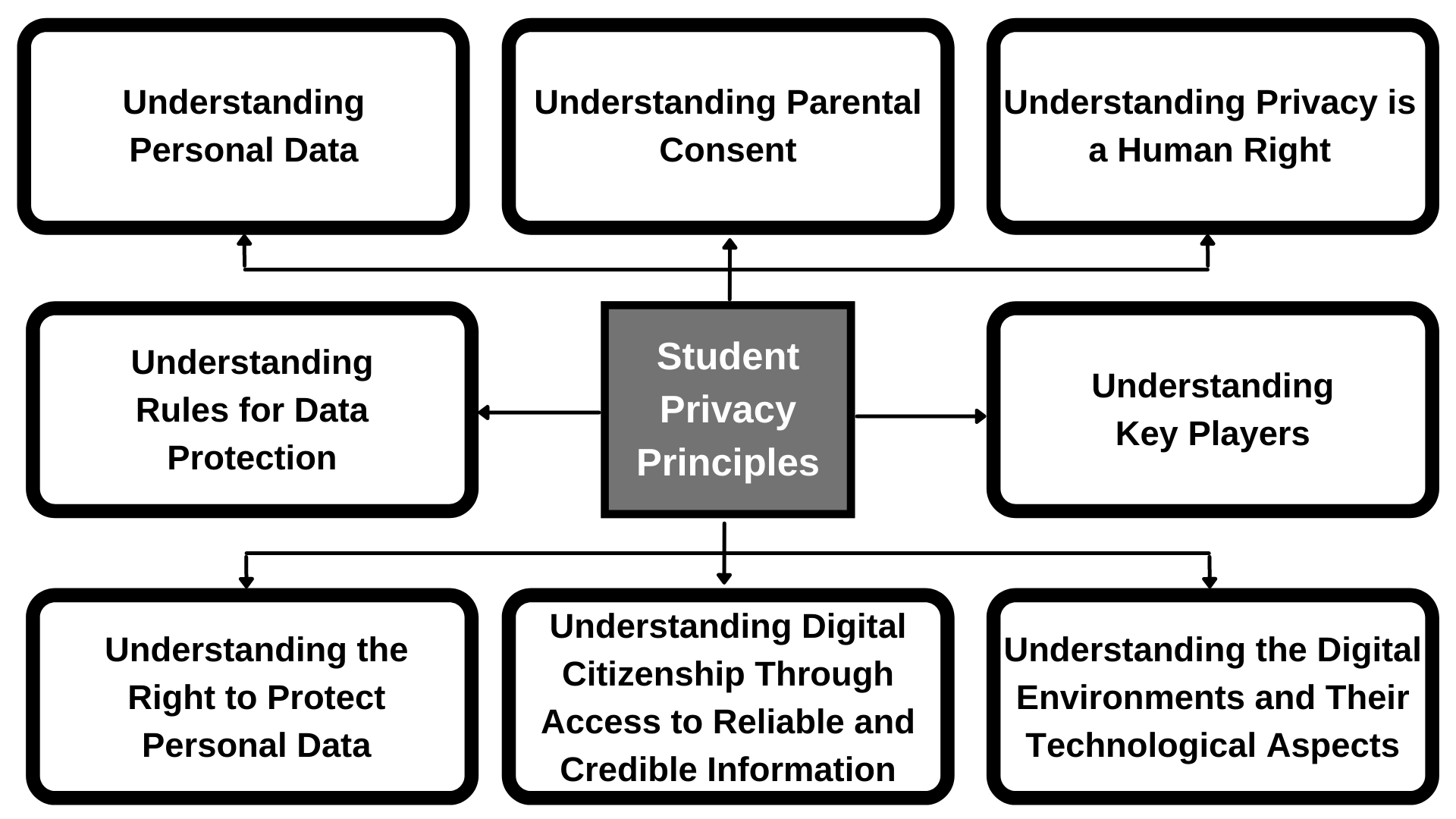

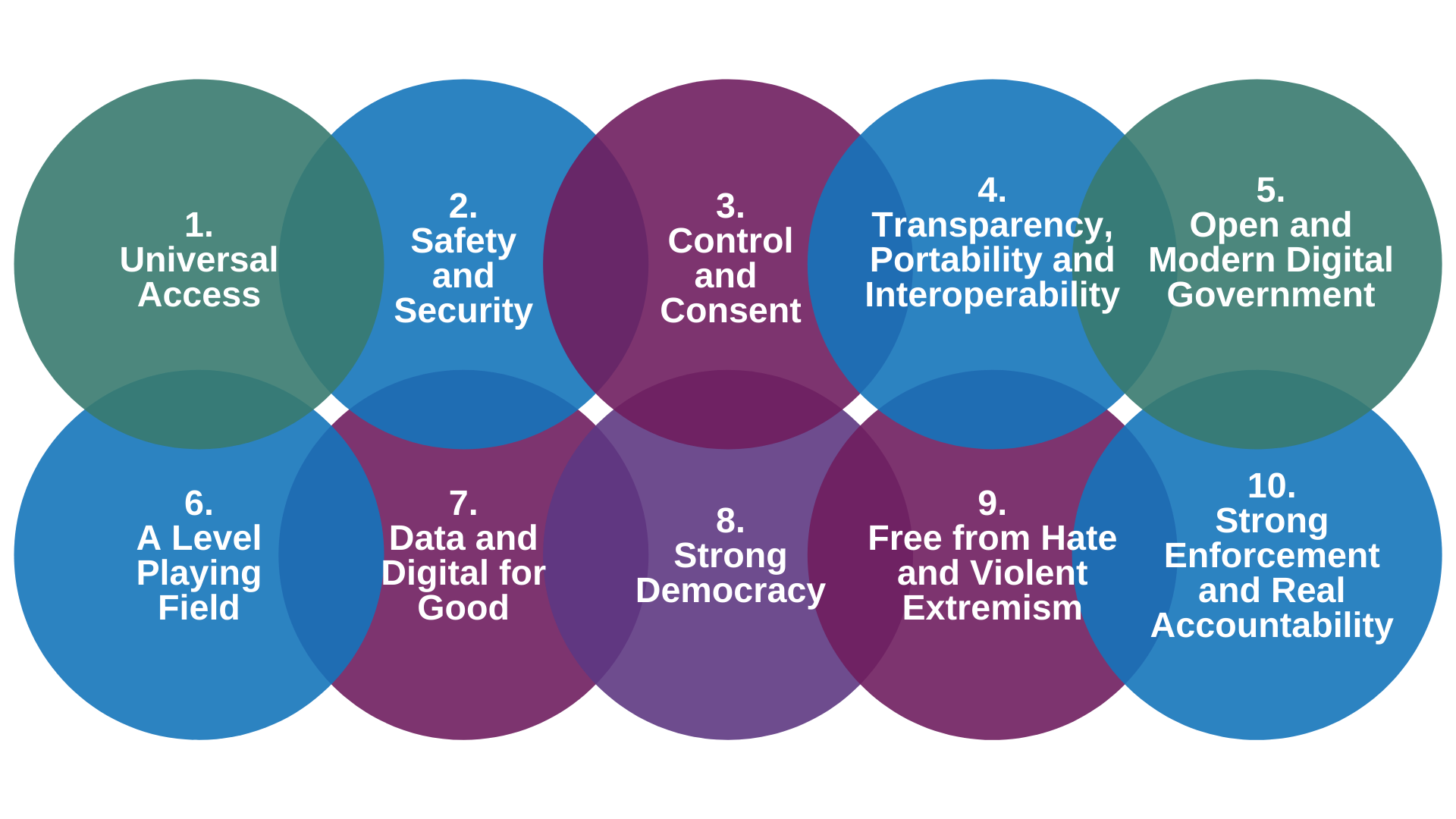

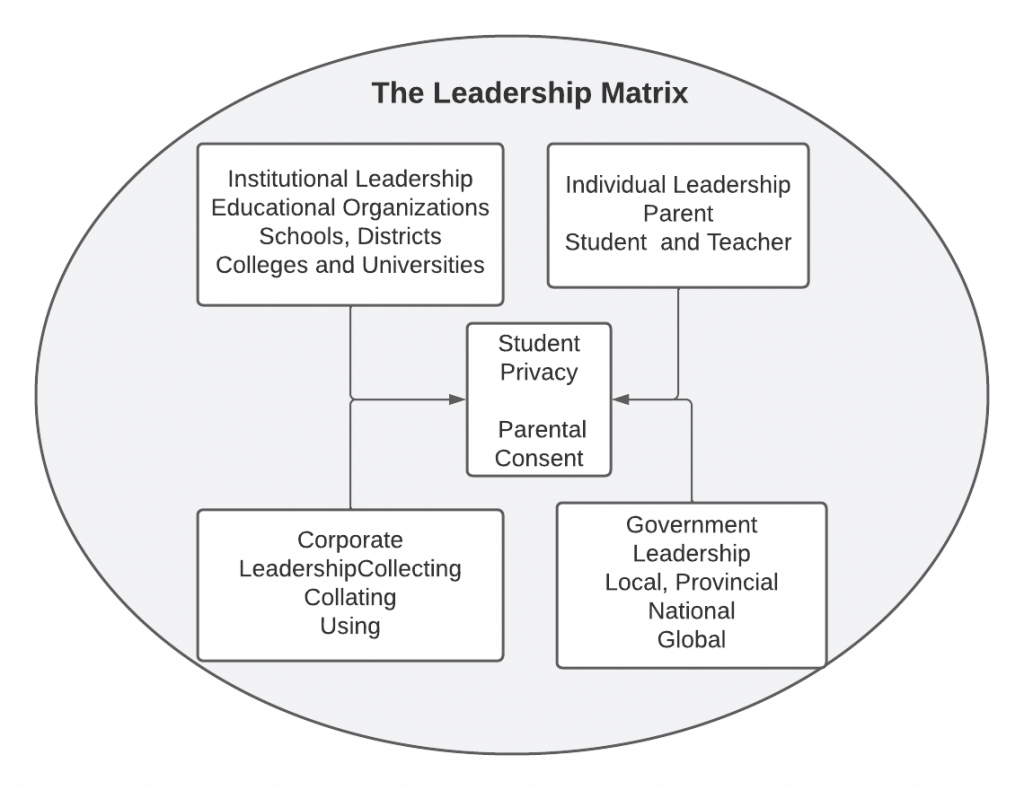

In this chapter on digital privacy in education, we explore how digital technology and artificial intelligence have greatly expanded both the opportunities for internet use and for increased digital surveillance. We examine the educational implications of data surveillance and consider the role of schools in an era that is characterized by the steady creep of passive data collection. Secondly, we ask questions of educators regarding the types of curriculum and policy responses that are needed. Finally, we argue for a much more critical examination of the role of digital privacy and surveillance in schools. Figure 1 outlines our reflection of student privacy principles in this chapter.

Figure 1.

Student privacy principles.

Digital privacy solutions for students are multi-faceted. Students need to understand what constitutes personal data and understand that privacy is a human right. They need to understand the context of the present digital environment, and that there are multiple players in the system who are looking to have access to their data or looking to protect their data. Students also need to acquire skills of digital citizenship so that they understand that information can be curated and mediated. They need to know how to access credible, reliable information. Finally, students need to understand why it is important to protect their personal information and why there are rules for data protection. Students of vulnerable ages need to know how to work with their parents’ supervision in order to protect their data. In sum, the overall picture for digital privacy in education is complicated!

Key topics in this chapter are as follows:

In 2020, Canada was in the throes of a global pandemic, and Canadians were required to work from home and attend school remotely. Many changes in Canadians’ internet usage occurred in the five years that led up to the pandemic and there were more changes during the pandemic. Statistics Canada (2021) reports that many Canadians considered the internet to be a lifeline during the first waves of the pandemic. Four out of five Canadians shopped online, and their online spending rose significantly (Statistics Canada, 2021). Four out of five Canadians watched streamed content, with 38% reporting that they watched more than ten hours in a typical week (Statistics Canada, 2021). Not surprisingly, more than two-thirds of Canadians used the internet to monitor their health (Statistics Canada, 2021). The statistics were clear also for young Canadians; the vast majority (98%) of young Canadians reported that they were using the internet (Statistics Canada, 2021). Smartphone use also increased with 84% of Canadians reporting that they used a smartphone to communicate (Statistics Canada, 2021). Almost half of this group reported that they check their phone every half hour (Statistics Canada, 2021).

The prevalence of devices such as laptops, Chromebooks, and cell phones with their related applications, as well as the emergence of smart home devices have done more than simply change the technology landscape. As technology has increasingly become such a big part of people’s lives, the result has been a gradual decrease or erosion of Canadians’ privacy. This is happening for multiple reasons. First, the users lack the time to read, understand and give informed consent, which we would define as consent with full awareness of the risks involved. The Office of the Privacy Commission defines meaningful consent as a knowledge of what information is being collected, the purpose for the collection, how the information will be shared, and understanding of the risks and consequences. Secondly, privacy agreements are long and convoluted. Third, with so many Canadians accessing the internet on their phones, and so many young people using the phone for social media, gaming and instant messaging, the fine print of privacy policies read on phones is so small that it almost necessitates click-through rather than a detailed study of the implications of agreeing to a disclosure of privacy. Even the Canadian Internet Registration Authority requires the download of an app and a series of instructions in order to provide users with screening tools for pop-up advertising on devices.

A critical examination of digital privacy requires stepping back and taking a deeper look at what is happening. Parents and educators also need to analyze what is happening, as they are the role models and imprinters for their children and students who navigate the digital world. We argue in this chapter that Canadians must consider multiple threats to individual privacy. Some of these threats include the collection and marketing of personal data. Other threats involve increasing surveillance of youth by trusted adults without consideration of the implications of over-surveillance for their individual privacy.

In Canada, The Office of the Privacy Commissioner (OPC) was making recommendations to protect the online privacy of Canadian children as early as 2008 (OPC, 2008). The Privacy Commissioner urged providers of content for youth to ensure that young people visiting websites could read and understand the terms of use. The Privacy Commissioner website focuses on two aspects of online activity in youth: personal information protection and online identity and reputation. No legislation, however, has been presented in Canada to protect the information of children and youth, leaving children’s privacy largely unprotected as it relates to educational content and policy. Central concepts of digital privacy such as digital footprint, digital dossier and digital permanence have not found their way into everyday curriculum policies.

Confounding the issue of digital privacy protection for children and adolescents is the patchwork of policy solutions. For example, Canada has devolved responsibility for education to the provinces and territories, which design operational and curriculum policies (Robertson & Corrigan, 2018). In Ontario, however, municipalities and cities oversee the protection of personal information. The Municipal Freedom of Information and Right to Privacy Act (MFIPPA; 2021) defines personal information as recorded information that can be used to find someone’s identity. This recorded information about an individual includes the following areas and more: a) race, origin, religion, age, gender, sexual orientation, or marital status; b) educational, medical, psychiatric, criminal, or employment history; c) any identifying number or symbol; and d) address, telephone, fingerprints, blood type, and name. There is little in the MFIPPA policy to indicate that it has been updated to the digital realm, although it does acknowledge that a record could be electronic (1990, p. 3). MFIPPA states that institutions shall not use personal information in their custody (S. 31) unless they have consent (Robertson & Corrigan, 2018).

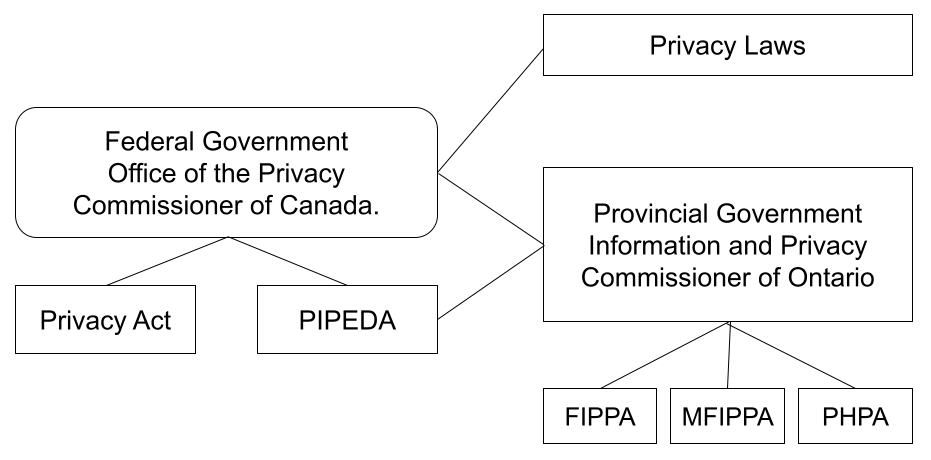

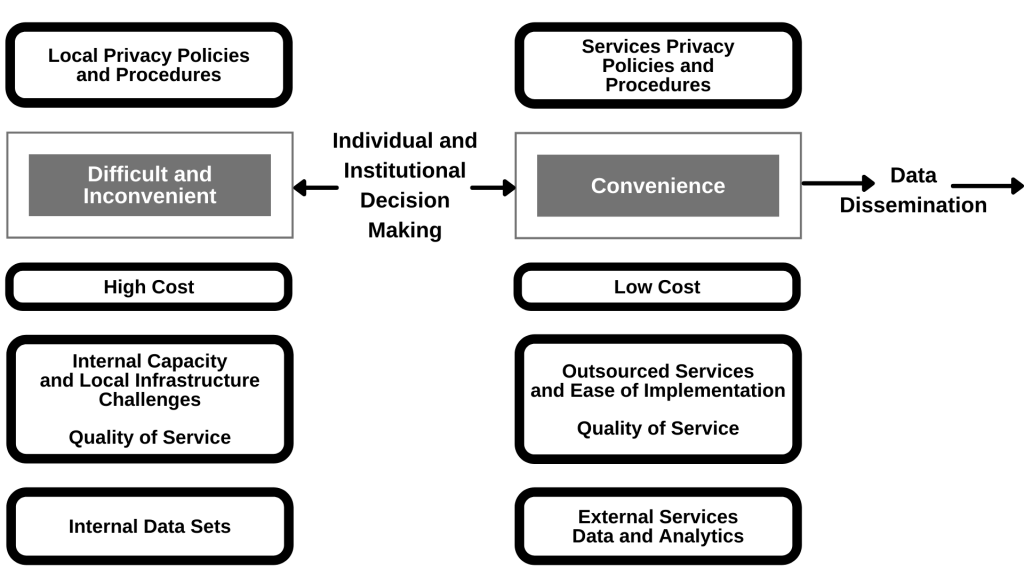

In summary, the context of digital privacy in Canada is one where reliance on the Internet for education, work and leisure is increasing. Digital/mobile applications require consent to use them, but the consent forms are lengthy and difficult to decipher. A patchwork of national, provincial and municipal services oversees privacy. The office of the Privacy Commissioner of Ontario provides advice, but there is little coordination evident between the offices overseeing privacy and the provincial designers of curriculum and operations in education. There appears to be an agreement in policy that personal information should be protected, but there has been no pathway forward to design comprehensive privacy protection.

Another key aspect of the present context is that of surveillance, which has become so much a part of our lives that it has quite literally become a backdrop to the everyday. In this section of the chapter, we discuss the collection of human experiences as behavioural data as one form of surveillance. In addition, we review how surveillance can also be packaged and sold as student safety measures.

Recently, a colleague purchased potato chips with cash at a convenience store. The next day, when he received pop-up advertisements for chips on his home computer, he wondered if it could be a coincidence. Since he did not use a bank card or a credit card for the initial purchase, he thought his purchase was anonymous. He was unaware of the hidden workings of surveillance cameras in stores and how his phone and other devices were tracking his whereabouts. He was also unaware that data about his purchasing habits and location were potentially being captured and shared without his express consent. He was certainly not aware that data related to his purchasing habits were being sold and shared.

The New York Times Privacy Project made the claim that location data from sources hidden in mobile phone apps have made an open book of the movements of millions of Americans, as it is recording who and where they visit and for how long. Some of the data is recorded by unregulated and unscrutinized companies (Thompson & Warzel, 2019). Similar claims have been made in Canada that Google and Facebook are tracking users’ search data for marketing purposes (Dangerfield, 2018). Key critical questions need to be asked and answered regarding the rights of individuals to privacy and the growing apathy and immobility to challenge digital surveillance for corporate gain.

The Center for Democracy and Technology in the United States has recently raised issues of pervasive student surveillance on school-owned computers distributed during emergency remote learning. In a letter to the US Senate, they suggest that,

Student activity monitoring software can permit school staff to remotely view students’ computer screens, open applications, block sites, scan student communications, and view browsing histories. It may utilize untested algorithmic technology to flag student content for review, and security flaws have also permitted school personnel to access students’ cameras and microphones without students’ permission or awareness. (Venzke & Laird, 2021, p. 1)

Haskins (2019) argues that almost five million students in the US are being watched online in schools by the surveillance industry and students do not have the opportunity to opt-out of being watched. She reports that Gaggle uses a combination of artificial intelligence and human moderators to track students’ emails and their online work, including messages that come into the cloud-based learning site from social media. One of the concerns she raises is that LGBTQ words are on the banned words list. She also raises the overall concern that students are subject to relentless inspection. She questions if the cost of this surveillance reflects the real priorities of school districts (Haskins, 2019).

Hankerson et al. (2021) report that surveillance of student devices treats different groups of students differently. Students using the school’s digital devices are monitored more than students with personal devices. Students from poverty are less likely to own personal devices and are therefore monitored more. Local education authorities in the United States are seeking student activity monitoring vendors to surveil students in the name of protection of digital privacy. Similarly, Feathers (2019) reports that, in the United States, schools use snooping tools to ensure student safety, but some studies looking into the impact of these tools are showing that they may have the opposite effect—such as damaging trust relationships and discouraging communities of students (e.g., LGBTQ) who look for help online.

Despite these reports, a survey for the Center for Democracy and Technology (2021) in the United States finds that parents and teachers acknowledge the privacy concerns, but they see that the benefits of student activity monitoring outweigh the risks (Grant-Chapman et al., 2021).

Fisk (2016) has raised different but equally important concerns about the surveillance of youth. As they have increasingly documented their lives online (e.g., through Instagram, TikTok, etc.), adult surveillance of their lives has increased to monitor many of the previously unsupervised spaces in their lives. Fisk has identified pedagogies of surveillance (p. 71) that observe, document and police the behaviours of youth. Parents, for example, use the tracking devices on their children’s phones or fitness apps to monitor their whereabouts. Internet safety presentations are designed to destabilize parents’ awareness of what youth are doing online and sell internet safety to parents and guardians. A critical examination of these practices asks: Who profits from these youth surveillance initiatives? Who are the winners and the losers? Do young people have a right to know when they are being monitored online?

Palfrey et al. (2010) at the Harvard Law school argue compellingly that youth need to have an opportunity to learn about digital privacy and acquire digital privacy protection skills. They explain in the following way,

We also need to begin the conversation with a realization that adult‐driven initiatives – while an important piece of the puzzle – can only do so much. Youth must learn how to handle different situations online and develop healthy Internet practices. Through experience, many youths are able to work out how to navigate networked media in a productive manner. They struggle, like all of us, to understand what privacy and identity mean in a networked world. They learn that not everyone they meet online has their best intentions in mind, including and especially their peers. As with traditional public spaces, youth gain a lot from adult (as well as peer‐based) guidance. (Palfrey et al., 2010, p.2)

There are mixed messages with respect to who has the responsibility to teach and reinforce internet safety guidelines to protect students’ privacy. MediaSmarts, which is a Canadian nonprofit, reports in one study (Steeves, 2014) that more students said that they learned about internet safety from their parents than from the school. Students say that their teachers are more likely to help them in other ways, such as helping them search for information online and how to deal with cyberbullying than to teach them about digital privacy. The reality is that parents cannot assume that teachers are addressing digital privacy and teachers cannot assume that parents are doing this. Digital privacy education is a shared responsibility.

Students may not know that their digital footprint, which is the list of all the places they have visited online, is searchable and can be connected back to them. Some of the data is collected actively, through logins. Some of the digital footprint, such as which websites are visited and for how long, is collected passively as they are web surfing—this data is called clickstream data because it shows where a user has navigated online (Solove, 2004). Another means of passive data collection is through cookies or tags which are small sets of codes that are deployed into the user’s computer when they visit a website.

One company capitalizes on clickstream data via DoubleClick, which accesses cookies on the user’s computer, looks up the person’s profile and then sends advertisements targeted specifically for that person in milliseconds. Hill (2012) explains how one company, Target, looked over customers’ purchases and predicted whether or not they might be pregnant. After a father complained that his daughter was receiving targeted baby product ads, the company began disguising this targeted advertising by putting ads for lawn equipment beside the baby ads. In 2004, DoubleClick had more than 80 million customer profiles (Solove, 2004). This changed significantly when Google purchased DoubleClick.

According to Lohr (2020), the acquisition of DoubleClick by Google in 2007 was a significant game-changer for Google because Google in 2007 was one-tenth of the size that it became by 2020. At present, Google owns the leading browser globally with accompanying email, meeting space and other software, but the source of most of its tremendous profits is its advertising (Lohr, 2020). The dominance of Google has led to investigations by the Justice Department (Geary, 2012; Lohr, 2020) and the filing of an antitrust lawsuit on its search engine in October 2021. Hatmaker (2021) reports an additional antitrust lawsuit over Google Play was filed in July 2021.

According to Geary (2012), DoubleClick (Google) operates two categories of behavioural targeting. For the first, a website owner can set a DoubleClick cookie to track which sections of their website you are browsing. DoubleClick also tells advertisers how long the ad is shown and how often it will appear to the user. Secondly, Google runs Adsense, where different publishers pool the information from browsers; this is third-party advertising. The two systems can work together to categorize the person’s ad preferences. Categories are established to help advertisers target directly to you. As a user, you can visit Google’s ad preferences manager to see how your preferences have been categorized.

Students also may not understand digital permanence, which is the understanding that data posted online is very difficult (impossible) to delete. Students may not be aware that inappropriate or thoughtless messages posted today can impact future employment. They may also not be thinking that humour to a pre-teen may not be the humour they will appreciate when they are older. Some studies show that young people do care about privacy and want to protect their information (Palfrey et al., 2010; Steeves, 2014). The issue remains, however, that there is no clear national direction or consensus on the protection of personal information that also limits the degree of passive surveillance permitted by law.

The collection of information about us without our consent is not a new phenomenon. According to Solove (2004), in the 1970s, the American government started selling census data tapes. They sold the information in clusters of 1500 homes with only the addresses (no names). Marketing companies matched the addresses using phone books and voter registration lists (Solove, 2004). Within 5 years, they had built databases for over half of American households (Solove, 2004). In the 1980s, they wanted to add psychological kinds of information about beliefs and values, which led to the creation of taxonomies of people groups who had income, race, hobbies and values in common. By 2001, the marketing of these databases, which allows advertisers to target your mail, email or phone through telemarketing, grossed over $2 trillion in sales (Solove, 2004). Yet, it is not possible to sign up for a bank account or a credit card without offering up much of your personal information (Solove, 2004).

Zuboff (2020) argues that a new economic logic is now in place, which has been relatively unchallenged through policy. She defines surveillance capitalism as a practice that claims that human experience is free material for hidden commercial data extraction, prediction, and sales. These practices are allowed to proliferate because the dangerous illusion persists that privacy is private (Zuboff, 2020). In surveillance capitalism, human experience is captured by different mechanisms, and the data are reconstituted as behaviour. This data capture is allowed to continue when customers give up pieces of themselves and when pieces of their information are taken from them without their knowledge. This concentrates wealth, knowledge and information in the hands of a few for profit. For example, Facebook produces 6 million predictions every second for commercial purposes. No one could easily replicate Facebook’s power to compile data (Zuboff, 2019).

According to Van Zoonen (2016), the general public is complicit in releasing their information. Despite indicating that they have privacy concerns, they use simple passcodes and share these codes among devices. They share their personal information on social media sites and, in general, while they do not believe that their nationality, gender or age is sensitive information, they are increasingly concerned about how data might be combined for personal profiles. They want to weigh the purpose of the data collection and assess whether the benefits outweigh the risks. The request for too much data, for example, might outweigh the benefits (Van Zoonen, 2016).

The protection of personally identifiable information for youth is even more important because they are learning the skills toward understanding consent. Also, schools may be unknowingly complicit in providing third-party access to student information through educational apps. It makes sense, therefore, that schools should guide students in the reasons behind protecting their digital privacy and help them to understand it (Robertson & Muirhead, 2020).

Some key lessons about digital privacy for Canadian students in the 21st century to understand include:

Digital technologies are advancing at such an unprecedented speed that neither the curriculum nor the general policy guidelines in education can keep pace, resulting in curriculum and policy gaps surrounding digital privacy in education. The protection of personally identifiable information (PII) has a different level of importance for youth because there are greater risks for their safety and their age may make them less able to give informed consent. Without a clear understanding, schools and school districts might be unknowingly complicit in providing third-party access to student information through educational apps. It makes sense to put in place an expectation that students who use technology in schools also need opportunities to gain an understanding of digital privacy. In the United States, for example, the Children’s Internet Protection Act requires schools that receive funding for technology must also provide students with education about online behaviour (Federal Communications Commission, 2020).

A Global Privacy Enforcement Network (GPEN) was established in 2010 (GPEN, 2017) composed of 60 global privacy regulators. These experts cautioned that teaching platforms that are internet-based can put students at risk for the disclosure of their personal information. In a 2017 review, GPEN found that most online educational apps required teachers and students to provide their emails to access a particular educational service or app, thereby providing a link to their PII. Only one-third of the educational apps reviewed allowed the teachers to create virtual classes where students’ identities could be masked (GPEN, 2017). Although teachers complied with requests to provide the students’ actual names, they found that it was difficult to delete these class lists at the end of term. While most of the online educational services restrict access to student data, almost one-third of the educational apps reviewed in the GPEN sweep did not provide helpful ways for students to opt-out or to block third party access to their data (GPEN, 2017) taking away their right to make a privacy decision, let alone an informed privacy decision.

The findings of the GPEN sweep are understandable given the speed at which educational apps have proliferated. The curriculum has not been able to keep pace. There are a number of key understandings that have not been a part of the school curriculum for generations of digital users. According to a Canadian policy brief (Bradshaw et al., 2013), here are some examples:

In the present era, it is sometimes hard to distinguish between these two. For example, if a person wishes to use the store’s wifi, they might click-through the privacy agreement without reading it. By doing this, they are agreeing to broader data capture, such as where they pause in the store to look at merchandise. Some third-party applications that collect student data may require parents to click through the privacy agreements. These agreements tend to be long and difficult to understand. This prevents parents from gaining a clear and concise explanation of the implications of sharing their children’s data.

Loyalty apps: Students need to be made aware that loyalty cards collect more than the points that they advertise in order to draw in customers. McLeod (2020) reported that his coffee order app had located his whereabouts both at his home and work address. This occurred when he was in Canada and on vacation. Through combinations of networks, the app tracked him throughout the day and night—in total reporting his location 2,700 times in five months. He had incorrectly assumed that the app was working only when he was ordering his morning coffee (MCleod, 2020).

These examples illustrate that understanding consent is a central concept in understanding digital privacy.

PIPEDA: Canada’s regulatory guidelines for obtaining meaningful consent are outlined in the Personal Information Protection and Electronic Documents Act (PIPEDA; 2016). This legislation regulates privacy for the private (commercial) sector in Canada and is not specific to education or youth. In comparison, the American Children’s Online Privacy Protection Rule (COPPA; 1998) designates the age of 13 as the minimum age for young persons to have an online profile. This legislation was designed to protect young internet users, but recent research shows that there are many underage users on the internet, raising questions about whether or not legislation is the answer (Hargittai et al, 2011).

There is a gap in the national legislative direction in Canada that is designed to protect the personal information of all Canadians, including young people. There are also key understandings that should become part of basic education on internet use.

| While PIPEDA does not provide privacy guidance, it regulates the commercial sector through key principles for fair information practices. These are as follows: Notice: Users should be informed when information is collected, for what purpose, how long it will be used, and how it will be shared. Choice: Users should have a choice about whether or not they share their information. Access: Users should be able to check and confirm their information on request. Security: The users’ information should be protected from unauthorized access. Scope: Only the required information can be collected. Purpose: The purpose for collecting the information should be disclosed. Limitations: There should be a time limit on how long the information will be held. Accountability: Organizations should ensure that their privacy policies are followed. |

In California, a Shine the Light (2003) law requires list brokerages to tell people on request where they have sold their personal information (Electronic Privacy Information Center, 2017). To the best of our knowledge, no similar legislation exists to protect the digital privacy of Canadian children and adolescents. Chen examined the digital divide in Ontario schools and notes, “to date there is no national policy on digital learning in place” (Chen, 2015, p.4). Without a systematic approach to digital learning, the school districts are left to define digital curriculum learning outcomes on their own. While education falls to the provinces and territories, the Annual Report of the Information and Privacy Commissioner of Ontario (IPC) (2020) does not give direction to education in Ontario. The Ontario Government report: Building a Digital Ontario (2021) does not address digital privacy in education. Recently, the Ontario government has made clear that digital privacy policies and terms of use are the responsibility of local school districts (Information and Privacy Commission for Ontario, 2019; Ministry of Education, 2020).

One province in Canada, Ontario, has written a policy (Bill 13) that holds students accountable for their online activities if they impact other students negatively. Bill 13: The Accepting Schools Act (2012) requires schools to address harassment, bullying, and discrimination using a number of interventions that include suspension and expulsion. Specifically, it identifies cyberbullying behaviours, such as the online impersonation of others, and makes digital bullies subject to the consequences enacted in the legislation, including suspensions, expulsions, and police intervention. While the scope and sanctions of the Accepting Schools Act have given schools the authority to respond to cyberbullying and online aggression, it does not focus on or include language that develops the digital citizenship of students. It also does not address the professional development of teachers who, ten years after its assent, now teach students who are even more immersed in the technologies that can lead to school-based consequences.

The Organization for Economic Co-operation and Development (OECD) has 38 member countries, including Canada. They have published a Typology of Risks (OECD, 2021) for children in the digital environment. There are content, conduct and contact risks as well as consumer risks. They identify cross-cutting risks as those that include all four of the risk categories. The three cross-cutting areas are Privacy Risks, Advanced Technology Risks, and Risks on Health and Wellbeing (OECD, 2021).

Recognizing that the teaching of digital privacy is a shared responsibility, the Office of the Privacy Commissioner of Canada (OPC) (2022) has produced materials to encourage this shared responsibility. There is a graphic novel, Social Smarts: Nothing personal!, a downloadable resource in which a phone guides a student through the online world. It is aimed at youth 8-10 years old. In addition, the OPC co-sponsored a global resolution on children’s digital rights [PDF]. There is a highly informative blog post for parents entitled Having a Data Privacy Week ‘family tech talk’ with suggestions on how to protect child privacy and recommendations for how to have tech talks.

There have been other, international responses regarding the shared responsibility to keep children safe online. In the UK they have created a data protection code of practice (OPC, 2022), called the Age Appropriate Design Code, which requires internet companies to respect children’s rights, act in their best interest, and make products safer for children.

There are some key considerations that can frame discussions about digital privacy in schools.

Duty of Care: Teachers and administrators have a duty of care under the Ontario Education Act that requires them to be positive role models for students and act as a kind, firm and judicious parent would act to protect them from harm. This standard of care varies depending on the type of activity and the age of the students. The younger and less experienced students would require closer supervision (Berryman, 1998). The protection of personally identifiable information (PII) for youth has a different level of importance because there are greater risks for their safety and their age may make them less able to give informed consent.

Policy Patchwork: First, educators need to be aware that they are operating in a policy forum that has multiple policy designers at different levels such as the school, educational authority or district level, provincial level and national level. For example, the Office of the Privacy Commissioner of Canada has lessons for students in Grades 9-12 that are based on privacy principles from the Personal Information Protection Acts that guide Alberta and British Columbia, An Act Respecting the Protection of Personal Information in the Private Sector from Quebec, as well as PIPEDA (a national Act, described earlier in this chapter). The lesson is designed to teach students about their rights to privacy. It provides a self-assessment for students to understand how well they understand privacy and shows them how to make a privacy complaint.

Rights to privacy: Secondly, students need to be informed about the degree of surveillance operating somewhat unregulated at present and how this has implications for their safety as well as their right to information (e.g., news and websites) that has not been curated for them. If the provincial curriculum policies do not require students to learn about the protection of PII, the implications of their digital footprint and digital permanence, then school districts or authorities will need to find ways to address this gap. The curriculum should be based on the provision of information about the right to privacy, how students can take action to protect their personal information and their rights to recourse when they are being monitored or the information (news) they seek is being curated. For example, the PVNCCDSB, an Ontario school district, has developed a Digital Privacy Scope and Sequence from Kindergarten to Grade 12 that supports student privacy alongside the acquisition of digital skills. From the importance of using correct passwords to curating a digital footprint, students learn the skills with the instruction of

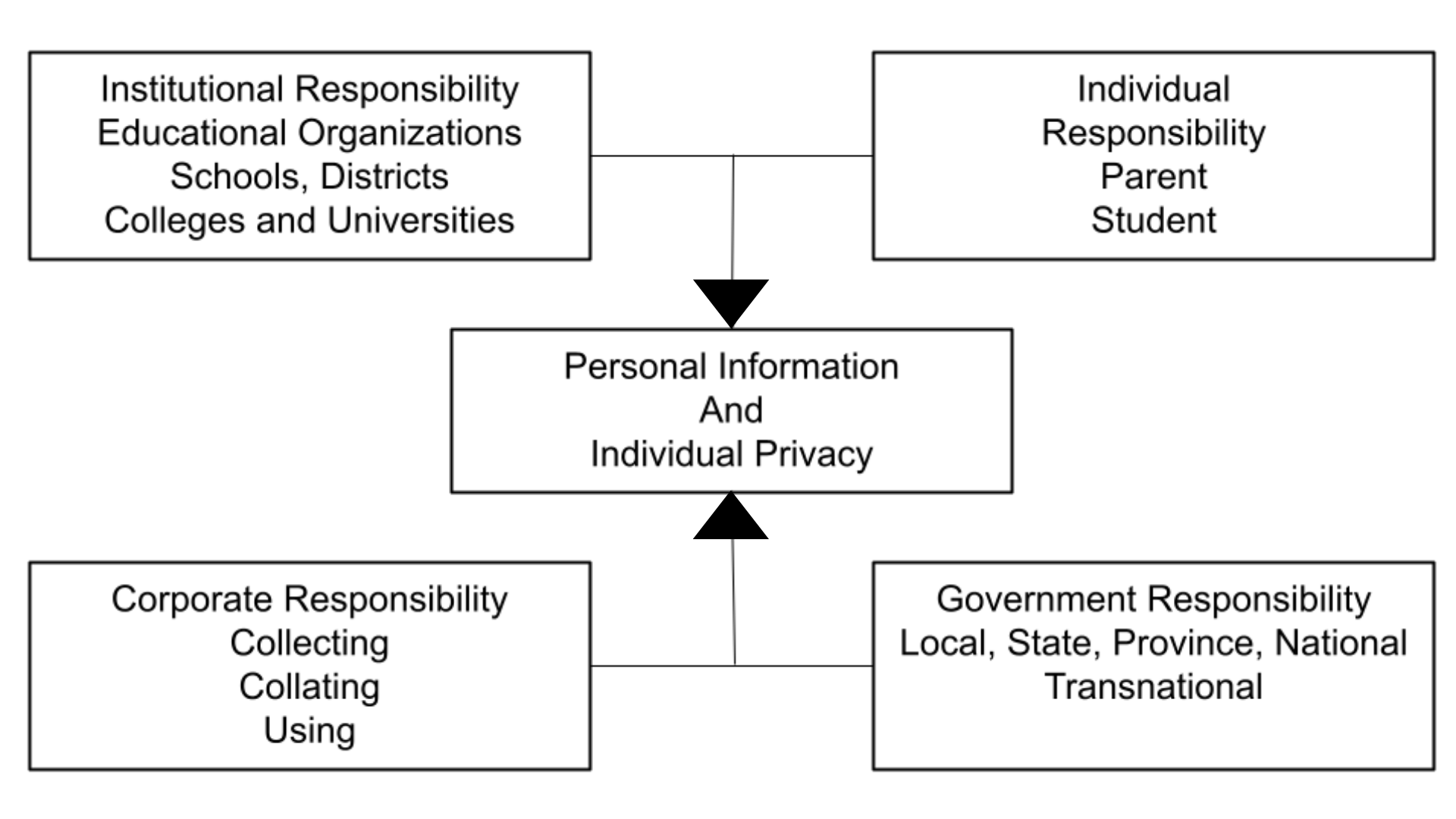

Shared responsibility: In this chapter, we have advocated that the protection of personal information and individual privacy is a shared responsibility. First of all, the educational institutions, such as the school districts or school authorities, the colleges and universities share an institutional responsibility to create policies that are clear and understood by their students. Corporations that use data for marketing purposes need to be more transparent about how they collect data and provide easy opt-out solutions. Governments have the responsibility to create digital privacy policies that protect citizens from corporate profit and overreach. Finally, individuals, parents, students and educators have the responsibility to educate themselves on the topic of digital privacy.

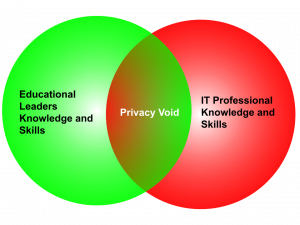

Figure 2.

A consortium of international data protection commissioners wrote a framework for teaching about data protection, the International Competency Framework on Privacy Education. Their intent was not to put the responsibility for teaching about data protection on the schools, but they wanted to share their expertise by developing a framework of digital competencies (International Working Group on Digital Education, (IWG), 2016).

The commissioners did not match the framework to specific legislation but designed the competencies so that they would function irrespective of jurisdiction. Their goal was to create an international base of common knowledge and skills on digital privacy for education and distribute this information for the benefit of students and schools. This framework focuses on empowering the digital student and encourages them to work with a responsible adult. It takes into consideration research that youth do care about their privacy (e.g., Palfrey et al., 2010) and that they need to work together with concerned adults such as their parents and teachers to build their skills in a digital era. (Robertson & Muirhead, 2020).

Here is a summary of the nine guiding principles in this International data competency framework:

We encourage educators who are reading this chapter to discuss their level of comfort with the types of surveillance of youth and adolescents that seem increasingly similar to the surveillance methods used by the police in crime shows on television. Educators need to step back and consider carefully their level of comfort with presentations at schools that imply that parents are not capable or are too busy to help their children and adolescents discern safe and unsafe online spaces. While we would not question a specific type of internet surveillance tool that is available to parents, we have questions about whether or not it is a good idea for parents to receive daily copies of young people’s online transactions. We also want to raise questions about the rights of surveillance organizations to out students before they are ready to disclose their sexual orientation or gender preference to their families. We encourage educators not to accept forms of surveillance and curation of content uncritically.

Secondly, we find that there are holes in the protection net for students and teachers surrounding digital privacy. Teachers are encouraged by the pamphlets from the Information and Privacy Commissioner to gain consent before posting students’ pictures and they are reminded to follow school district policies (Information & Privacy Commission, 2019). These guidelines, however, lack the specifics and the understandings implicit in the GPEN International Working Group Digital Education competencies (IWG, 2016) in areas such as digital permanence, digital footprint and the potential impact of data (re)combination on privacy and choice.

Accepting Schools Act, 2012, S.O. 2012, c. 5 – Bill 13. (2012, June 19). Chapter 5: An act to amend the education act with respect to bullying and other matters. Queen’s Printer for Ontario. https://www.ontario.ca/laws/statute/s12005

Akyurt, E. (2020, March 30). In a gray sweater on a laptop facemask [Photograph]. Unsplash. https://unsplash.com/photos/hkd1xxzyQKw

Berryman, J. H. (1998, December). Duty of care. Professionally Speaking, 1998(4). https://professionallyspeaking.oct.ca/december_1998/duty.htm

Bourdages, G. (2017, December 11). Person in front of red lights [Photograph]. Unsplash. https://unsplash.com/photos/WDbuusPOnkM

Boyd, D., Hargittai, E., Schultz, J., & Palfrey, J. (2011). Why parents help their children lie to Facebook about age: Unintended consequences of the ‘Children’s Online Privacy Protection Act.’ First Monday, 16(11), Article 3850. https://doi.org/10.5210/fm.v16i11.3850

Bradshaw, S., Harris, K., & Zeifman, H. (2013, July 22). Big data, big responsibilities: Recommendations to the office of the privacy commissioner on Canadian privacy rights in a digital age. CIGI Junior Fellows Policy Brief, 8, 1-9. https://www.cigionline.org/publications/big-data-big-responsibilities-recommendations-office-privacy-commissioner-canadian

Chen, B. (2015). Exploring the digital divide: The use of digital technologies in Ontario Public Schools. Canadian Journal of Learning and Technology / La Revue Canadienne De l’Apprentissage Et De La Technologie, 41(3), 1-23. https://doi.org/10.21432/T2KP6F

Children’s Online Privacy Protection Act of 1998, 15 USC §6501: Definitions. (1998, October 21). http://uscode.house.gov/view.xhtml?req=granuleid%3AUSC-prelim-title15-section6501&edition=prelim

Dangerfield, K. (2018, March 28). Facebook, Google and others are tracking you. Here’s how to stop targeted ads. Global News. https://globalnews.ca/news/4110311/how-to-stop-targeted-ads-facebook-googlebrowser

Electronic Privacy Information Center. (n.d.). California S.B. 27, “Shine the Light” Law. https://epic.org/privacy/profiling/sb27.html

Feathers, T. (2019, December 4). Schools spy on kids to prevent shootings, but there’s no evidence it works. Vice. https://www.vice.com/en/article/8xwze4/schools-are-using-spyware-to-prevent-shootingsbut-theres-no-evidence-it-works

Federal Communications Commission. (2019, December 30). Children’s internet protection act (CIPA). https://www.fcc.gov/consumers/guides/childrens-internet-protection-act

Fisk, N. W. (2016). Framing internet safety – the governance of youth online. MIT Press Ltd.

Flaherty, D. H. (2008, June). Reflections on reform of the federal privacy act. Office of the Privacy Commissioner of Canada. https://www.priv.gc.ca/en/privacy-topics/privacy-laws-in-canada/the-privacy-act/pa_r/pa_ref_df/

Geary, J. (2012, April 23). DoubleClick (Google): What is it and what does it do?. The Guardian. https://www.theguardian.com/technology/2012/apr/23/doubleclick-tracking-trackers-cookies-web-monitoring

Global Privacy Enforcement Network (GPEN). (2017, October). GPEN Sweep 2017: User controls over personal information. UK Information Commissioner’s Office. http://www.astrid-online.it/static/upload/2017/2017-gpen-sweep—international-report1.pdf

Government of Canada. (2019, June 21). Personal Information Protection and Electronic Documents Act (PIPEDA). Justice Laws Website. http://laws-lois.justice.gc.ca/eng/acts/P-8.6/index.html

Government of Canada. (2021, June 22). Canadian internet use survey, 2020. The Daily. https://www150.statcan.gc.ca/n1/daily-quotidien/210622/dq210622b-eng.htm

Grant-Champan, H., Laird, E., Venzke, C. (2021, September 21). Student activity monitoring software: Research insights and recommendations. Center for Democracy and Technology. https://cdt.org/insights/student-activity-monitoring-software-research-insights-and-recommendations/

Hampson, R. (2017, December 29). Scrolling apps [Photograph]. Unsplash. https://unsplash.com/photos/cqFKhqv6Ong

Hankerson, D. L., Venzke, C., Laird, E., Grant-Chapman, H., & Thakur, D. (2022). Online and observed: Student privacy implications of school-issued devices and student activity monitoring software. Center for Democracy & Technology. https://cdt.org/insights/report-online-and-observed-student-privacy-implications-of-school-issued-devices-and-student-activity-monitoring-software/

Haskins, C. (2019, November 1). Gaggle knows everything about teens and kids in school. BuzzFeed. https://www.buzzfeednews.com/article/carolinehaskins1/gaggle-school-surveillance-technology-education

Hatmaker, T. (2021, July 7). Google faces a major multi-state antitrust lawsuit over google play fees. TechCrunch. https://techcrunch.com/2021/07/07/google-state-lawsuit-android-attorneys-general/

Hermant, B. (2018). Free WiFi inside [Photograph]. Unsplash. https://unsplash.com/photos/X0EtNWqMnq8

Hill, K. (2016, March 31). How Target figured out a teen girl was pregnant before her father did. Forbes. https://www.forbes.com/sites/kashmirhill/2012/02/16/how-target-figured-out-a-teen-girl-was-pregnant-before-her-father-did/?sh=5c44383a6668

Information and Privacy Commission for Ontario (2019). Privacy and access to information in Ontario Schools: A guide for educators. https://www.ipc.on.ca/wp-content/uploads/2019/01/fs-edu-privacy_access-guide-for-educators.pdf

International Working Group on Digital Education (IWG). (2016, October). Personal data protection competency framework for school students. International Conference of Privacy and Data Protection Commissioners. http://globalprivacyassembly.org/wp-content/uploads/2015/02/International-Competency-Framework-for-school-students-on-data-protection-and-privacy.pdf

Levine, M. A. J. (2020, September 17). Troubling Clouds: Gaps affecting privacy protection in British Columbia’s K-12 education system. BC Freedom of Information and Privacy Association. https://fipa.bc.ca/news-release-new-report-highlights-gaps-in-student-privacy-in-bcs-k-12-education-system/

Lohr, S. (2020, September 21). This deal helped turn Google into an ad powerhouse. Is that a problem?. New York Times. https://www.nytimes.com/2020/09/21/technology/google-doubleclick-antitrust-ads.html

Madrigal, D. V. H., Venzke, C., Laird, E., Grant-Chapman, H., & Thakur, D. (2021, September 21). Online and observed: Student privacy implications of school-issued devices and student activity monitoring software. Center for Democracy & Technology. https://cdt.org/insights/report-online-and-observed-student-privacy-implications-of-school-issued-devices-and-student-activity-monitoring-software/

McLeod, J. (2020, June 12). Double-double tracking: How Tim Hortons knows where you sleep, work and vacation. Financial Post. https://financialpost.com/technology/tim-hortons-app-tracking-customers-intimate-data

Ministry of Education. (2020, August 13). Requirements for remote learning. Policy/Program Memorandum 164. https://www.ontario.ca/document/education-ontario-policy-and-program-direction/policyprogram-memorandum-164

Municipal Freedom of Information and Protection of Privacy Act (MFIPPA, R.S.O. 1990, c. M.56. (2021, April 19). Queen’s Printer for Ontario. https://www.ontario.ca/laws/statute/90m56

NASA. (2015, December 26). Connected world [Photograph]. Unsplash. https://unsplash.com/photos/Q1p7bh3SHj8

Organization for Economic Co-operation and Development (OECD). (2021, January). Children in the digital environment: Revised typology of risks. OECD Digital Economy Papers, 302, 1-28. https://doi.org/10.1787/9b8f222e-en

Office of the Privacy Commissioner of Canada. (2021, August 13). Guidelines for obtaining meaningful consent. https://www.priv.gc.ca/en/privacy-topics/collecting-personal-information/consent/gl_omc_201805/#_seven

Office of the Privacy Commissioner of Canada (2022, January 24). Data privacy week: A good time to think about protecting children’s privacy online. https://www.priv.gc.ca/en/opc-news/news-and-announcements/2022/nr-c_220124/

Ohm, P. (2010, August). Broken promises of privacy: Responding to the surprising failure of anonymization. UCLA Law Review, 57, 1701-1777. https://www.uclalawreview.org/pdf/57-6-3.pdf

Palfrey, J., Gasser, U., & Boyd, D. (2010, February 24). Response to FCC notice of inquiry 09-94: “Empowering parents and protecting children in an evolving media landscape.” Berkman Klein Center for Internet & Society at Harvard University. https://cyber.harvard.edu/sites/cyber.law.harvard.edu

/files/Palfrey_Gasser_boyd_response_to_FCC_NOI_09-94_Feb2010.pdf

Robertson, L., & Corrigan, L. (2018). Do you know where your students are? Digital supervision policy in Ontario schools. Journal of Systemics, Cybernetics and Informatics, 16(2), 36-42. http://www.iiisci.org/journal/PDV/sci/pdfs/HB347PG18.pdf

Robertson, L., & Muirhead, W. J. (2020). Digital privacy in the mainstream of education. Journal of Systemics, Cybernetics and Informatics, 16(2), 118-125. http://www.iiisci.org/journal/pdv/sci/pdfs/IP099LL20.pdf

Solove, D. J. (2004). Digital Person: Technology and privacy in the information age. New York University Press. https://scholarship.law.gwu.edu/cgi/viewcontent.cgi?article=2501&context=faculty_publications

Spiske, M. (2018, May 15). Green binary code [Photograph]. Unsplash. https://unsplash.com/photos/iar-afB0QQw

Steeves, V. (2014). Young Canadians in a wired world, phase III: Life online. MediaSmarts. http://mediasmarts.ca/sites/mediasmarts/files/pdfs/publication-report/full/YCWWIII_Life_Online_FullReport.pdf

Sweeney, L. (2004), Simple demographics often identify people uniquely. Carnegie Mellon University, Data Privacy Working Paper 3. Pittsburgh 2000. https://dataprivacylab.org/projects/identifiability/paper1.pdf

Telford, H. (2017, October 29). Opinion: Public schools ask parents to sign away children’s privacy rights. Vancouver Sun. https://vancouversun.com/opinion/op-ed/opinion-public-schools-ask-parents-to-sign-away-childrens-privacy-rights/

Thompson, S. A., & Warzel. (2019, December 19). Twelve Million phones, one dataset, zero privacy. The New York Times: The Privacy Project. https://www.nytimes.com/interactive/2019/12/19/opinion/location-tracking-cell-phone.html

Tullius, T. (2020, May 30). A painting on a wall warning visitors about video surveillance [Photograph]. Unsplash. https://unsplash.com/photos/4dKy7d3lkKM

van Zoonen, L. (2016). Privacy concerns in smart cities. Government Information Quarterly, 33(3), 472–480. https://doi.org/10.1016/j.giq.2016.06.004

Venzke, C., & Laird, E. (2021, September 21). CDT and Coalition of Education and civil rights advocates urge Congress to protect student privacy. Center for Democracy & Technology. https://cdt.org/insights/cdt-and-coalition-of-education-and-civil-rights-advocates-urge-congress-to-protect-student-privacy/

Wojcicki, S. (2009, March 11). Making ads more interesting. Google Official Blog. https://googleblog.blogspot.com/2009/03/making-ads-more-interesting.html

Zuboff, S. (2019). The age of surveillance capitalism: The fight for a human future at the New Frontier of Power. Public Affairs.

Zuboff, S. (2020, June 24). You are now remotely controlled: Surveillance capitalists control the science, and the scientists, the secrets and the truth. The New York Times. https://www.nytimes.com/2020/01/24/opinion/sunday/surveillance-capitalism.html

3

This chapter will help students to:

Students taking the Digital Privacy: Leadership and Policy course are required to write a case study about digital privacy. When writing this case study, they are encouraged to base their digital privacy scenario in a context with which they are familiar, such as their individual experience or a known context. Students examine a problem or situation, suggest solutions and reflect on these solutions. The case study was a deliberate, pedagogical choice for this course for multiple reasons. In the next section, the authors explain the theory and the practice associated with case studies through seven elements:

Case studies allow learners to examine a field of study when the context for practice in the field, in this case, digital privacy leadership and policy, is continuously changing and precariously complex. Both topics: privacy and education provide rich opportunities to theorize problems that are authentic (and sometimes ill-structured and messy) and try out solutions in a safe space. Case studies provide a means or method to:

The context of education is complex as is the topic of digital privacy in education. There is no such thing as a typical day for educational leaders. Interactions will include opportunities to influence and be influenced by students, teachers, instructors, staff, other administration, parents, bus drivers, social workers, police officers, and community leaders, and this is by no means an exhaustive list.

Education is a part of society and education reflects what is happening in society. Education policies are part of larger policies, such as human rights legislation. Similarly, the anxieties of the larger society are reflected in education. In many ways, education is a reflection of the society (an open system) in which it exists and, as in society, decisions and policies are complex and multi-faceted.

Writing a case study is like telling a story. Narrative inquiry has a long history both in and out of education and narratives help students to study the educational experience. According to Connelly and Clandinin (1994), “One theory in educational research holds that humans are storytelling organisms who, individually and socially, lead storied lives. Thus, the study of narrative is the study of the ways humans experience the world” (p. 2). When writing the case study, we recommend that students conceptualize this as telling a story and think about telling their story for an audience.

According to Gill (2011), a discussion case study is created to examine a topic or situation, present options, develop solutions and evaluate the solutions. Case studies are not written to identify the right and wrong solutions but to examine, analyze and weigh complex and competing insights within a context (Gill, 2011). The difference between a case and a case study is that a case is a real-life situation or as close to real-life as possible without breaching confidentiality, whereas a case study is an analysis of a situation (Gill & Mullarkey, 2015). A case study is also a clear pedagogical choice because it moves away from telling a war story about something that happened, to telling the story using a pedagogical design that encourages critical and reflective practice.

A case study creates an opportunity to safely explore problems in a safe context of storytelling. Cases can be thought of as thought experiments where the author can explore ideas through a recounting of actions and individual insights through their interaction with policies and problems. Problems can be described and situated within environments where solutions can be tested and explored without any real-world constraints. Exploring solutions to problems through a combination of fictional and everyday contexts creates spaces where solutions can be tested to the most confounding problems, and potential actions can be explored and planned for within a case. Case studies are a means for creativity where leadership, analysis, research, emerging and new technologies, method, subject matter, evidence and data can be purposefully manipulated creatively to explore the complexity of privacy and human behaviour.

| In this course, the case study assignment includes the following elements which are described below: a) an executive summary; b) description of the context/worksite and policy environment; c) description of the people involved, their responsibilities, and accountability in the organization in addition to an organizational chart with respective reporting lines; d) the problem and a variety of potential solutions; e) critical reflection on the desired solution and decision to resolve the problem or conflict and the impact of the solution on the actors involved; f) video presentation to describe the case study; g) identification of the key discussion questions from the case and topics for critical reflection; and h) the final paper which is a group paper. It should be approximately 2000 words excluding the reference list. Students should label which sections each group member contributed. |

Here is an explanation of each of these segments of a case study:

a) Introduction: This is a description of the case that should explain why this particular case study is compelling, important, or significant. Students will be encouraged to incorporate references into the introduction so that their scenario can be grounded in the literature or situated within gaps in the literature. A case study should have an overview (one page) that introduces the case and draws in or hooks the interest of the readers. The one-page case overview should do the following: 1) Introduce the key decision-maker or the protagonist in the case study by providing a name, this individual’s role in the organization and a brief reference to the decision that needs to be made. 2) Include an overview of the case that provides some context, describing the educational institution, country or region, and when technology is involved, an overview of the technologies will be included in the context. 3) Incorporate an overview page that includes the decision that needs to be made and 4) explain the alternatives to the decision.