Real Analysis of Data in Psychology, Neuroscience & Behaviour Copyright © 2024 by Ali Hashemi is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License, except where otherwise noted.

Real Analysis of Data in Psychology, Neuroscience & Behaviour Copyright © 2024 by Ali Hashemi is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License, except where otherwise noted.

1

Welcome to RAD in PNB! This is an open electronic resource that is designed to aid you on your journey in understanding statistics for a variety of different psychological disciplines using simulated data based on real research. After all, a fundamental aspect of research in psychology is statistical literacy.

You might have noticed that undergraduate psychology programs almost always include at least one course on using statistics in psychological research and, perhaps to your dismay, your instructor may require you to use the statistical software R to do your assignments and tests. You probably would not be surprised to hear that meaningful learning of R is not easy (or at least it is not as easy as we would like) for many students. This can have direct impacts on students being unable to apply their statistical knowledge from the course to future research opportunities. As the instructors for statistics and other psychology courses, and a combined instructing experience of over 8 years now, we certainly recognize that there is a disconnect between what happens in our classes and the way students use (or DON’T use) statistics in later research opportunities.

But FEAR NOT!

That is where this RAD in PNB open-educational resource comes in!

Here, we have worked with an interdisciplinary group of graduate students from the Department of Psychology, Neuroscience & Behaviour at McMaster University to create a rich set of research scenarios, datasets, analysis plans, practice questions, and R scripts that are directly related to recent and/or ongoing research being conducted by the faculty members whose labs you are likely to join in the coming year or two! These graduate students and content creators are becoming experts in their fields in no small part from a thorough understanding of statistics as well as a deep mastery over R.

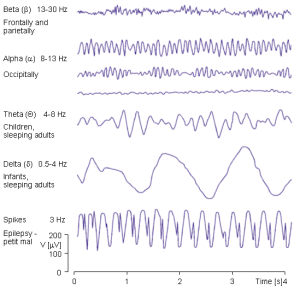

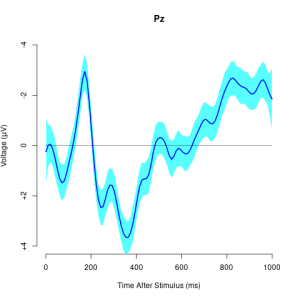

Contributing content to the chapters on psychological statistics are Sevda Montakhaby Nodeh and Carmen Tu. In these chapters you will find questions on many psychological phenomena like perception, attention, cognition, memory, development, narrative, music, and social perceptions. Contributing content to the chapters on statistics related to neuroscience are Matin Yousefbadi and Maheshwar Panday. In these chapters you will find questions focused on understanding electroencephalograms (EEGs), magnetic resonance imaging (MRI), functional MRI (fMRI), as well as key aspects of data wrangling and managing high dimensional datasets. Finally, contributing content to the chapters on behavioural and animal behavioural research and statistics are Brendan McEwan and Sina Zarini. In these chapters you will find questions on a variety of different animal species including bedbugs, flies, frogs, lizards, and fish. We honestly could not have found a more RAD team or made this open electronic resource without their dedication and fantastic contributions!

We hope you can use this OER to…

If you’ve found yourself here from outside of the Department of Psychology, Neuroscience & Behaviour at McMaster University, you can still benefit from the diversity of research questions and analysis techniques presented here. The universality of statistics makes this OER relevant for virtually any background you come here with. So take a look because, after all, a fundamental aspect of research in psychology is statistical literacy.

Before you get started, we want to share a few remarks about this OER. First, this is the first edition and therefore is not meant to exhaustively cover the research in PNB. We do, however, hope that as the years pass, more and more work is added to fully capture the recent and ongoing work going on and in the department. Second, you can get involved with this OER! As you complete studies in your undergraduate or graduate career, we invite you to submit a representative summary of your research, data, and analysis. This is already often done with open-access research publications, so it is not a big step to transform it into an educational resource. We (Ali & Matt) or someone on the team, will be more than happy to be involved in the process of incorporating your work. In this way, this OER will always be up-to-date with recent works. And thirdly, explore this OER with an open mind. You will notice significant variation between different research fields. You will find that in different fields — and even within fields — different researchers prefer different visualization techniques. We provide a sample of what can be done, and hope it sparks enough interest in you that you can find the visualization and analysis techniques that answer your questions best.

So, have fun and stay RAD!

– Ali & Matt

I

As a cognitive researcher at the Cognition and Attention Lab at McMaster University, you are at the forefront of exploring the intricacies of proactive control within attention processes. This line of research is profoundly significant, given that the human sensory system is overwhelmed with a vast array of information at any given second, exceeding what can be processed meaningfully. The essence of research in attention is to decipher the mechanisms through which sensory input is selectively navigated and managed. This is particularly crucial in understanding how individuals anticipate and adjust in preparation for upcoming tasks or stimuli, a phenomenon especially pertinent in environments brimming with potential distractions.

In everyday life, attentional conflicts are commonplace, manifesting when goal-irrelevant information competes with goal-relevant data for attentional precedence. An example of this (one that I’m sure we are all too familiar with) is the disruption from notifications on our mobile devices, which can divert us from achieving our primary goals, such as studying or driving. From a scientific standpoint, unravelling the strategies employed by the human cognitive system to optimize the selection and sustain goal-directed behaviour represents a formidable and compelling challenge.

Your ongoing research is a direct response to this challenge, delving into how proactive control influences the ability to concentrate on task-relevant information while effectively sidelining distractions. This facet of cognitive functionality is not just a theoretical construct; it’s the very foundation of daily human behaviour and interaction.

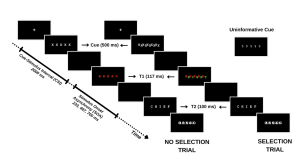

Your study methodically assesses this dynamic by engaging participants in a task where they must identify a sequence of words under varying conditions. The first word (T1) is presented in red, followed rapidly by a second word (T2) in white. The interval between the appearance of T1 and T2, known as the stimulus onset asynchrony (SOA), serves as a critical measure in your experiment. The distinctiveness of your study emerges in the way you manipulate the selective attention demands in each trial, classified into:

By introducing informative and uninformative cues, your investigation probes the role of proactive control. Informative cues give participants a preview of the upcoming trial type, allowing them to prepare mentally for the impending challenge. Conversely, uninformative cues act as control trials and offer no insight into the trial type. The hypothesis posits that such informative cues enable participants to proactively fine-tune their attentional focus in preparation for attentional conflict, potentially enhancing performance on selection trials with informative cues compared to those with uninformative cues.

For an overview of the different trial types please refer to the figure included below.

In this designed study, you are not only tackling the broader question of the role of conscious effort in attention but also contributing to a nuanced understanding of human cognitive processes, paving the way for applications that span from enhancing daily life productivity to optimizing technology interfaces for minimal cognitive disruption.

Let’s begin by running the following code in RStudio to load the required libraries. Make sure to read through the comments embedded throughout the code to understand what each line of code is doing.

# Here we create a list called "my_packages" with all of our required libraries

my_packages <- c("tidyverse", "rstatix", "readxl", "xlsx", "emmeans", "afex",

"kableExtra", "grid", "gridExtra", "superb", "ggpubr", "lsmeans")

# Checking and extracting packages that are not already installed

not_installed <- my_packages[!(my_packages %in% installed.packages()[ , "Package"])]

# Install packages that are not already installed

if(length(not_installed)) install.packages(not_installed)

# Loading the required libraries

library(tidyverse) # for data manipulation

library(rstatix) # for statistical analyses

library(readxl) # to read excel files

library(xlsx) # to create excel files

library(kableExtra) # formatting html ANOVA tables

library(superb) # production of summary stat with adjusted error bars(Cousineau, Goulet, & Harding, 2021)

library(ggpubr) # for making plots

library(grid) # for plots

library(gridExtra) # for arranging multiple ggplots for extraction

library(lsmeans) # for pairwise comparisons

Make sure to have the required dataset (“ProactiveControlCueing.xlsx“) for this exercise downloaded. Set the working directory of your current R session to the folder with the downloaded dataset. You may do this manually in R studio by clicking on the “Session” tab at the top of the screen, and then clicking on “Set Working Directory”.

If the downloaded dataset file and your R session are within the same file, you may choose the option of setting your working directory to the “source file location” (the location where your current R session is saved). If they are in different folders then click on “choose directory” option and browse for the location of the downloaded dataset.

You may also do this by running the following code:

setwd(file.choose())

Once you have set your working directory either manually or by code, in the Console below you will see the full directory of your folder as the output.

Read in the downloaded dataset as “cueingData” and complete the accompanying exercises to the best of your abilities.

# Read xlsx file

cueingData = read_excel("ProactiveControlCueing.xlsx")

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=22#h5p-1

1. Display the first few rows to understand your dataset.

head(cueingData) #Displaying the first few rows## # A tibble: 6 × 6

## ID CUE_TYPE TRIAL_TYPE SOA T1Score T2Score

## <dbl> <chr> <chr> <dbl> <dbl> <dbl>

## 1 1 INFORMATIVE NS 233 100 94.6

## 2 2 INFORMATIVE NS 233 100 97.2

## 3 3 INFORMATIVE NS 233 89.2 93.9

## 4 4 INFORMATIVE NS 233 100 91.9

## 5 5 INFORMATIVE NS 233 100 100

## 6 6 INFORMATIVE NS 233 100 97.3

2. Set up your factors and check for structure. Make sure your dependent measures are in numerical format, and that your factors and levels are set up correctly.

cueingData <- cueingData %>%

convert_as_factor(ID, CUE_TYPE, TRIAL_TYPE, SOA) #setting up factors

str(cueingData) #checking that factors and levels are set-up correctly. Checking to see that dependent measures are in numerical format.

## # A tibble: 6 × 6

## ID CUE_TYPE TRIAL_TYPE SOA T1Score T2Score

## <dbl> <chr> <chr> <dbl> <dbl> <dbl>

## 1 1 INFORMATIVE NS 233 100 94.6

## 2 2 INFORMATIVE NS 233 100 97.2

## 3 3 INFORMATIVE NS 233 89.2 93.9

## 4 4 INFORMATIVE NS 233 100 91.9

## 5 5 INFORMATIVE NS 233 100 100

## 6 6 INFORMATIVE NS 233 100 97.3

## tibble [192 × 6] (S3: tbl_df/tbl/data.frame)

## $ ID : Factor w/ 16 levels "1","2","3","4",..: 1 2 3 4 5 6 7 8 9 10 ...

## $ CUE_TYPE : Factor w/ 2 levels "INFORMATIVE",..: 1 1 1 1 1 1 1 1 1 1 ...

## $ TRIAL_TYPE: Factor w/ 2 levels "NS","S": 1 1 1 1 1 1 1 1 1 1 ...

## $ SOA : Factor w/ 3 levels "233","467","700": 1 1 1 1 1 1 1 1 1 1 ...

## $ T1Score : num [1:192] 100 100 89.2 100 100 ...

## $ T2Score : num [1:192] 94.6 97.2 93.9 91.9 100 ...

3. Perform basic data checks for missing values and data consistency.

sum(is.na(cueingData)) # Checking for missing values in the dataset

## [1] 0

summary(cueingData) # Viewing the summary of the dataset to check for inconsistencies

## ID CUE_TYPE TRIAL_TYPE SOA T1Score

## 1 : 12 INFORMATIVE :96 NS:96 233:64 Min. : 32.43

## 2 : 12 UNINFORMATIVE:96 S :96 467:64 1st Qu.: 77.78

## 3 : 12 700:64 Median : 95.87

## 4 : 12 Mean : 86.33

## 5 : 12 3rd Qu.:100.00

## 6 : 12 Max. :100.00

## (Other):120

## T2Score

## Min. : 29.63

## 1st Qu.: 83.97

## Median : 95.76

## Mean : 87.84

## 3rd Qu.:100.00

## Max. :100.00

table(cueingData$CUE_TYPE, cueingData$TRIAL_TYPE, cueingData$SOA) #checking the number of observations per condition or combination of factors. Data is a balanced design since there is an equal number of observations per cell.## , , = 233

##

##

## NS S

## INFORMATIVE 16 16

## UNINFORMATIVE 16 16

##

## , , = 467

##

##

## NS S

## INFORMATIVE 16 16

## UNINFORMATIVE 16 16

##

## , , = 700

##

##

## NS S

## INFORMATIVE 16 16

## UNINFORMATIVE 16 16

5. The Superb library requires your dataset to be in a wide format. So convert your dataset from a long to a wide format. Save it as “cueingData.wide”.

cueingData.wide <- cueingData %>%

pivot_wider(names_from = c(TRIAL_TYPE, SOA, CUE_TYPE),

values_from = c(T1Score, T2Score) )

6. Using superbPlot() and cueingData.wide calculate the mean, and standard error of the mean (SEM) measure for T1 and T2 scores at each level of the factors. Make sure to calculate Cousineau-Morey corrected SEM values.

EXP1.T1.plot <- superbPlot(cueingData.wide,

WSFactors = c("SOA(3)", "CueType(2)", "TrialType(2)"),

variables = c("T1Score_NS_233_INFORMATIVE", "T1Score_NS_467_INFORMATIVE",

"T1Score_NS_700_INFORMATIVE", "T1Score_NS_233_UNINFORMATIVE",

"T1Score_NS_467_UNINFORMATIVE", "T1Score_NS_700_UNINFORMATIVE",

"T1Score_S_233_INFORMATIVE", "T1Score_S_467_INFORMATIVE",

"T1Score_S_700_INFORMATIVE", "T1Score_S_233_UNINFORMATIVE",

"T1Score_S_467_UNINFORMATIVE", "T1Score_S_700_UNINFORMATIVE"),

statistic = "mean",

errorbar = "SE",

adjustments = list(

purpose = "difference",

decorrelation = "CM",

popSize = 32

),

plotStyle = "line",

factorOrder = c("SOA", "CueType", "TrialType"),

lineParams = list(size=1, linetype="dashed"),

pointParams = list(size = 3))

## superb::FYI: Here is how the within-subject variables are understood:

## SOA CueType TrialType variable

## 1 1 1 T1Score_NS_233_INFORMATIVE

## 2 1 1 T1Score_NS_467_INFORMATIVE

## 3 1 1 T1Score_NS_700_INFORMATIVE

## 1 2 1 T1Score_NS_233_UNINFORMATIVE

## 2 2 1 T1Score_NS_467_UNINFORMATIVE

## 3 2 1 T1Score_NS_700_UNINFORMATIVE

## 1 1 2 T1Score_S_233_INFORMATIVE

## 2 1 2 T1Score_S_467_INFORMATIVE

## 3 1 2 T1Score_S_700_INFORMATIVE

## 1 2 2 T1Score_S_233_UNINFORMATIVE

## 2 2 2 T1Score_S_467_UNINFORMATIVE

## 3 2 2 T1Score_S_700_UNINFORMATIVE

## superb::FYI: The HyunhFeldtEpsilon measure of sphericity per group are 0.134

## superb::FYI: Some of the groups' data are not spherical. Use error bars with caution.

EXP1.T2.plot <- superbPlot(cueingData.wide,

WSFactors = c("SOA(3)", "CueType(2)", "TrialType(2)"),

variables = c("T2Score_NS_233_INFORMATIVE", "T2Score_NS_467_INFORMATIVE",

"T2Score_NS_700_INFORMATIVE", "T2Score_NS_233_UNINFORMATIVE",

"T2Score_NS_467_UNINFORMATIVE", "T2Score_NS_700_UNINFORMATIVE",

"T2Score_S_233_INFORMATIVE", "T2Score_S_467_INFORMATIVE",

"T2Score_S_700_INFORMATIVE", "T2Score_S_233_UNINFORMATIVE",

"T2Score_S_467_UNINFORMATIVE", "T2Score_S_700_UNINFORMATIVE"),

statistic = "mean",

errorbar = "SE",

adjustments = list(

purpose = "difference",

decorrelation = "CM",

popSize = 32

),

plotStyle = "line",

factorOrder = c("SOA", "CueType", "TrialType"),

lineParams = list(size=1, linetype="dashed"),

pointParams = list(size = 3)

)

## superb::FYI: Here is how the within-subject variables are understood:

## SOA CueType TrialType variable

## 1 1 1 T2Score_NS_233_INFORMATIVE

## 2 1 1 T2Score_NS_467_INFORMATIVE

## 3 1 1 T2Score_NS_700_INFORMATIVE

## 1 2 1 T2Score_NS_233_UNINFORMATIVE

## 2 2 1 T2Score_NS_467_UNINFORMATIVE

## 3 2 1 T2Score_NS_700_UNINFORMATIVE

## 1 1 2 T2Score_S_233_INFORMATIVE

## 2 1 2 T2Score_S_467_INFORMATIVE

## 3 1 2 T2Score_S_700_INFORMATIVE

## 1 2 2 T2Score_S_233_UNINFORMATIVE

## 2 2 2 T2Score_S_467_UNINFORMATIVE

## 3 2 2 T2Score_S_700_UNINFORMATIVE

## superb::FYI: The HyunhFeldtEpsilon measure of sphericity per group are 0.226

## superb::FYI: Some of the groups' data are not spherical. Use error bars with caution.

# Re-naming levels of the factors

levels(EXP1.T1.plot$data$SOA) <- c("1" = "233", "2" = "467", "3" = "700")

levels(EXP1.T2.plot$data$SOA) <- c("1" = "233", "2" = "467", "3" = "700")

levels(EXP1.T1.plot$data$TrialType) <- c("1" = "No Selection", "2" = "Selection")

levels(EXP1.T2.plot$data$TrialType) <- c("1" = "No Selection", "2" = "Selection")

levels(EXP1.T1.plot$data$CueType) <- c("1" = "Informative", "2" = "Uninformative")

levels(EXP1.T2.plot$data$CueType) <- c("1" = "Informative", "2" = "Uninformative")

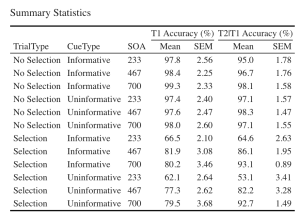

7. Let’s create a beautiful printable HTML table of the summary stats for T1 and T2 Scores. This summary table can then be used in your manuscript. I suggest that you visit the following link for guides on how to create printable tables.

# Extracting summary data with CousineauMorey SEM Bars

EXP1.T1.summaryData <- data.frame(EXP1.T1.plot$data)

EXP1.T2.summaryData <- data.frame(EXP1.T2.plot$data)

# Rounding values in each column

# round(x, 1) rounds to the specified number of decimal places

EXP1.T1.summaryData$center <- round(EXP1.T1.summaryData$center,1)

EXP1.T1.summaryData$upperwidth <- round(EXP1.T1.summaryData$upperwidth,2)

EXP1.T2.summaryData$center <- round(EXP1.T2.summaryData$center,1)

EXP1.T2.summaryData$upperwidth <- round(EXP1.T2.summaryData$upperwidth,2)

# merging T1 and T2|T1 summary tables

EXP1_summarystat_results <- merge(EXP1.T1.summaryData, EXP1.T2.summaryData, by=c("TrialType","CueType","SOA"))

# Rename the column name

colnames(EXP1_summarystat_results)[colnames(EXP1_summarystat_results) == "center.x"] ="Mean"

colnames(EXP1_summarystat_results)[colnames(EXP1_summarystat_results) == "center.y"] ="Mean"

colnames(EXP1_summarystat_results)[colnames(EXP1_summarystat_results) == "upperwidth.x"] ="SEM"

colnames(EXP1_summarystat_results)[colnames(EXP1_summarystat_results) == "upperwidth.y"] ="SEM"

# deleting columns by name "lowerwidth.x" and "lowerwidth.y" in each summary table

EXP1_summarystat_results <- EXP1_summarystat_results[ , ! names(EXP1_summarystat_results) %in% c("lowerwidth.x", "lowerwidth.y")]

#removing suffixes from column names

colnames(EXP1_summarystat_results)<-gsub(".1","",colnames(EXP1_summarystat_results))

# Printable ANOVA html

EXP1_summarystat_results %>%

kbl(caption = "Summary Statistics") %>%

kable_classic(full_width = F, html_font = "Cambria", font_size = 14) %>%

add_header_above(c(" " = 3, "T1 Accuracy (%)" = 2, "T2|T1 Accuracy (%)" = 2))

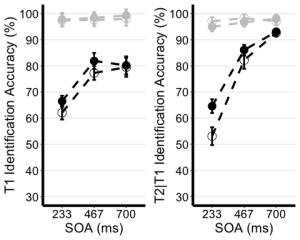

8. Use the unedited summary statistics table from Exercise 2 (EXP1.T1.summaryData and EXP1.T3.summaryData) and the ggplot() function to create separate summary line plots for T1 and T2 scores. The Line plot will visualize the relationship between SOA and the dependent measure while considering the factors of cue-type and trial-type. Your plot must have the following characteristics:

EXP1.T1.ggplotplot <- ggplot(EXP1.T1.summaryData, aes(x=SOA, y=center, color=TrialType, shape = CueType,

group=interaction(CueType, TrialType))) +

geom_point(data=filter(EXP1.T1.summaryData, CueType == "Uninformative"), shape=1, size=4.5) + # assigning shape type to level of factor

geom_point(data=filter(EXP1.T1.summaryData, CueType == "Informative"), shape=16, size=4.5) + # assigning shape type to level of factor

geom_line(linetype="dashed", linewidth=1.2) + # change line thickness and line style

scale_color_manual(values = c("gray78", "black") ) +

xlab("SOA (ms)") +

ylab("T1 Identification Accuracy (%)") +

theme_classic() + # It has no background, no bounding box.

theme(axis.line=element_line(size=1.5), # We make the axes thicker...

axis.text = element_text(size = 14, colour = "black"), # their text bigger...

axis.title = element_text(size = 16, colour = "black"), # their labels bigger...

panel.grid.major.y = element_line(), # adding horizontal grid lines

legend.position = "none") +

coord_cartesian(ylim=c(30, 100)) +

scale_y_continuous(breaks=seq(30, 100, 10)) + # Ticks from 30-100, every 10

geom_errorbar(aes(ymin=center+lowerwidth, ymax=center+upperwidth), width = 0.12, size = 1) # adding error bars from summary table

EXP1.T2.ggplotplot <- ggplot(EXP1.T2.summaryData, aes(x=SOA, y=center, color=TrialType, shape=CueType,

group=interaction(CueType, TrialType))) +

geom_point(data=filter(EXP1.T2.summaryData, CueType == "Uninformative"), shape=1, size=4.5) + # assigning shape type to level of factor

geom_point(data=filter(EXP1.T2.summaryData, CueType == "Informative"), shape=16, size=4.5) + # assigning shape type to level of factor

geom_line(linetype="dashed", linewidth=1.2) + # change line thickness and line style

scale_color_manual(values = c("gray78", "black")) +

xlab("SOA (ms)") +

ylab("T2|T1 Identification Accuracy (%)") +

theme_classic() + # It has no background, no bounding box.

theme(axis.line=element_line(size=1.5), # We make the axes thicker...

axis.text = element_text(size = 14, colour = "black"), # their text bigger...

axis.title = element_text(size = 16, colour = "black"), # their labels bigger...

panel.grid.major.y = element_line(), # adding horizontal grid lines

legend.position = "none") +

guides(fill = guide_legend(override.aes = list(shape = 16) ),

shape = guide_legend(override.aes = list(fill = "black"))) +

coord_cartesian(ylim=c(30, 100)) +

scale_y_continuous(breaks=seq(30, 100, 10)) + # Ticks from 30-100, every 10

geom_errorbar(aes(ymin=center+lowerwidth, ymax=center+upperwidth), width = 0.12, size = 1) # adding error bars from summary table

9. Use ggarrange() to display your plots together.

ggarrange(EXP1.T1.ggplotplot, EXP1.T2.ggplotplot,

nrow = 1, ncol = 2, common.legend = F,

widths = 8, heights = 5)

10. Use the data in long format (“cueingData”) and the anova_test() function, and compute a two-way ANOVA for each dependent variable, but on selection trials only. Set up cue-type and SOA as within-participants factors. (Hint: use the filter() function)

T1_2anova <- anova_test(

data = filter(cueingData, TRIAL_TYPE == "S"), dv = T1Score, wid = ID,

within = c(CUE_TYPE, SOA), detailed = TRUE, effect.size = "pes")

T2_2anova <- anova_test(

data = filter(cueingData, TRIAL_TYPE == "S"), dv = T2Score, wid = ID,

within = c(CUE_TYPE, SOA), detailed = TRUE, effect.size = "pes")

get_anova_table(T1_2anova)

## ANOVA Table (type III tests)

##

## Effect DFn DFd SSn SSd F p p<.05 pes

## 1 (Intercept) 1.00 15.00 534043.211 30705.523 260.886 6.80e-11 * 0.946

## 2 CUE_TYPE 1.00 15.00 253.665 506.454 7.513 1.50e-02 * 0.334

## 3 SOA 1.29 19.35 5091.853 2660.275 28.710 1.16e-05 * 0.657

## 4 CUE_TYPE:SOA 2.00 30.00 77.140 1061.051 1.091 3.49e-01 0.068

get_anova_table(T2_2anova)

## ANOVA Table (type III tests)

##

## Effect DFn DFd SSn SSd F p p<.05 pes

## 1 (Intercept) 1 15 593913.106 11288.706 789.169 2.19e-14 * 0.981

## 2 CUE_TYPE 1 15 661.761 426.947 23.250 2.24e-04 * 0.608

## 3 SOA 2 30 20001.563 3852.629 77.875 1.33e-12 * 0.838

## 4 CUE_TYPE:SOA 2 30 519.154 1298.144 5.999 6.00e-03 * 0.286

Exercise 5: Post-hoc tests

11. Filter for selection trials first, then group your data by SOA and use the pairwise_t_test() function to compare informative and uninformative trials at each level of SOA store and display your computation as “T2_sel_pwc”.

T2_sel_pwc <- filter(cueingData, TRIAL_TYPE == "S") %>%

group_by(SOA) %>%

pairwise_t_test(T2Score ~ CUE_TYPE, paired = TRUE, p.adjust.method = "holm", detailed = TRUE) %>%

add_significance("p.adj")

T2_sel_pwc <- get_anova_table(T2_sel_pwc)

T2_sel_pwc

## # A tibble: 3 × 16

## SOA estimate .y. group1 group2 n1 n2 statistic p df

## <fct> <dbl> <chr> <chr> <chr> <int> <int> <dbl> <dbl> <dbl>

## 1 233 11.5 T2Score INFORMATIVE UNINFO… 16 16 4.36 5.55e-4 15

## 2 467 3.89 T2Score INFORMATIVE UNINFO… 16 16 1.97 6.8 e-2 15

## 3 700 0.356 T2Score INFORMATIVE UNINFO… 16 16 0.190 8.52e-1 15

## # ℹ 6 more variables: conf.low <dbl>, conf.high <dbl>, method <chr>,

## # alternative <chr>, p.adj <dbl>, p.adj.signif <chr>

Welcome! In this assignment, we will be entering a cognition and memory lab at McMaster University. Specifically, we will be examining data from an intriguing cognitive psychology study that explores the role of repetition in recognition memory.

Most of us are familiar with the phrase ‘practice makes perfect’. This motivational idiom aligns with intuition and is confirmed by many real-world observations. Much empirical research also supports this view–repeated opportunities to encode a stimulus improve subsequent memory retrieval and perceptual identification. These observations suggest that stimulus repetition strengthens underlying memory representations.

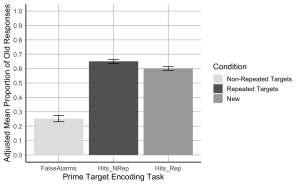

The present study focuses on a contradictory idea, that stimulus repetition can weaken memory encoding. The experiment comprised of three stages: a study phase, a distractor phase, and a surprise recognition memory test.

In the study phase participants aloud a red target word preceded by a briefly presented green prime word. On half of the trials, the prime and target were the same (repeated trials), and on the other half of the trials, the prime and target were different (not-repeated trials). In the figure below you can see an overview of the two different trial types. Following the study phase, participants engaged in a 10-minute distractor task consisting of math problems they had to solve by hand.

The final phase was a surprise recognition memory test where on each test trial they were shown a red word and asked to respond old if the word on the test was one they had previously seen at study, and new if they had never encountered the word before. Half of the trials at the test were words from the study phase and the other half were new words.

Let’s begin by running the following code to load the required libraries. Make sure to read through the comments embedded throughout the code to understand what each line of code is doing.

# Load necessary libraries

library(rstatix) #for performing basic statistical tests

library(dplyr) #for sorting data

library(readxl) #for reading excel files

library(tidyr) #for data sorting and structure

library(ggplot2) #for visualizing your data

library(plotrix) #for computing basic summary stats

Make sure to have the required dataset (“RepDecrementdataset.xlsx”) for this exercise downloaded. Set the working directory of your current R session to the folder with the downloaded dataset. You may do this manually in R studio by clicking on the “Session” tab at the top of the screen, and then clicking on “Set Working Directory”.

If the downloaded dataset file and your R session are within the same file, you may choose the option of setting your working directory to the “source file location” (the location where your current R session is saved). If they are in different folders then click on “choose directory” option and browse for the location of the downloaded dataset.

You may also do this by running the following code

setwd(file.choose())

Once you have set your working directory either manually or by code, in the Console below you will see the full directory of your folder as the output.

Read in the downloaded dataset as “MemoryData” and complete the accompanying exercises to the best of your abilities.

MemoryData <- read_excel('RepDecrementdataset.xlsx')

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=26#h5p-2

1. Display the first few rows of your dataset to familiarize yourself with its structure and contents.

head(MemoryData) #Displaying the first few rows

## # A tibble: 6 × 7

## ID Hits_NRep Hits_Rep FalseAlarms Misses_Nrep Misses_Rep CorrectRej

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 46 34 13 14 26 107

## 2 2 43 44 27 17 16 93

## 3 3 43 35 23 17 24 97

## 4 4 37 36 56 23 24 64

## 5 5 39 35 49 21 25 71

## 6 6 38 43 28 22 17 92

str(MemoryData) #Checking structure of dataset

## tibble [24 × 7] (S3: tbl_df/tbl/data.frame)

## $ ID : num [1:24] 1 2 3 4 5 6 7 8 9 10 ...

## $ Hits_NRep : num [1:24] 46 43 43 37 39 38 20 24 36 38 ...

## $ Hits_Rep : num [1:24] 34 44 35 36 35 43 11 29 43 27 ...

## $ FalseAlarms: num [1:24] 13 27 23 56 49 28 4 11 46 9 ...

## $ Misses_Nrep: num [1:24] 14 17 17 23 21 22 40 36 23 22 ...

## $ Misses_Rep : num [1:24] 26 16 24 24 25 17 49 31 17 33 ...

## $ CorrectRej : num [1:24] 107 93 97 64 71 92 116 109 74 111 ...

colnames(MemoryData)

## [1] "ID" "Hits_NRep" "Hits_Rep" "FalseAlarms" "Misses_Nrep"

## [6] "Misses_Rep" "CorrectRej"

2. Calculate Total Trials for Each Condition:

Note that if the value in “TotalNRep” and “TotalRep” is less than 60 for a participant, it indicates that certain word trials were excluded during the study phase due to issues (e.g., the participant read aloud the prime word instead of the target, leading to trial spoilage).

MemoryData <- MemoryData %>%

mutate(TotalNRep = Hits_NRep + Misses_Nrep)

MemoryData <- MemoryData %>%

mutate(TotalRep = Hits_Rep + Misses_Rep)

MemoryData <- MemoryData %>%

mutate(TotalNew = FalseAlarms + CorrectRej)

3. Transform the counts in the hits, misses, false alarms, and correct rejections columns into proportions. Do this by dividing each count by the total number of trials for the respective condition (e.g., divide hits for non-repeated trials by “TotalNRep”).

MemoryData$Hits_NRep <- (MemoryData$Hits_NRep/MemoryData$TotalNRep)

MemoryData$Misses_Nrep <- (MemoryData$Misses_Nrep/MemoryData$TotalNRep)

MemoryData$Hits_Rep <- (MemoryData$Hits_Rep/MemoryData$TotalRep)

MemoryData$Misses_Rep <- (MemoryData$Misses_Rep/MemoryData$TotalRep)

MemoryData$CorrectRej <- (MemoryData$CorrectRej/MemoryData$TotalNew)

MemoryData$FalseAlarms <- (MemoryData$FalseAlarms/MemoryData$TotalNew)

4. Once the proportions are calculated, remove the “TotalNew”, “TotalRep”, and “TotalNRep” columns from the dataset as they are no longer needed for further analysis.

MemoryData <- MemoryData[, !names(MemoryData) %in% c("TotalNew", "TotalRep","TotalNRep")]

5. Use the pivot_longer() function from the tidyr package to convert your data from wide to long format. Pivot the columns “Hits_NRep”, “Hits_Rep”, and “FalseAlarms”, setting the new column names to “Condition” and “Proportion” for the reshaped data.

long_df <- MemoryData %>%

pivot_longer(

cols = c(Hits_NRep, Hits_Rep, FalseAlarms),

names_to = "Condition",

values_to = "Proportion"

)

6. Using your long formatted dataset, group your data by ID and calculate the per-subject mean and the grand mean of the Proportions column.

data_adjusted <- long_df %>%

group_by(ID) %>%

mutate(SubjectMean = mean(Proportion, na.rm = TRUE)) %>%

ungroup() %>%

mutate(GrandMean = mean(Proportion, na.rm = TRUE)) %>%

mutate(AdjustedScore = Proportion - SubjectMean + GrandMean)

# Calculate the mean and SEM of the adjusted scores

summary_df <- data_adjusted %>%

group_by(Condition) %>%

summarize(

AdjustedMean = mean(AdjustedScore, na.rm = TRUE),

AdjustedSEM = sd(AdjustedScore, na.rm = TRUE) / sqrt(n())

)

7. Create a bar plot where the x-axis represents the Prime-target Encoding task conditions, the y-axis shows the adjusted mean proportion of old responses, and include error bars represent the adjusted SEM. Begin by setting custom colours for each condition. The colour for the bar presenting the false alarms or “New” should be “gray89”; the colour for “Hits_Nrep” or “Non-Repeated Targets” bar should be “gray39”; the colour for the “Hits_Rep” or “Repeated Targets” bar should be “darkgrey”.

# Create the bar plot with adjusted SEM error bars

ggplot(summary_df, aes(x = Condition, y = AdjustedMean, fill = Condition)) +

geom_bar(stat = "identity", position = position_dodge()) +

geom_errorbar(aes(ymin = AdjustedMean - AdjustedSEM, ymax = AdjustedMean + AdjustedSEM), width = 0.2, position = position_dodge(0.9)) +

scale_fill_manual(values = c("Hits_NRep" = "gray39", "Hits_Rep" = "darkgrey", "FalseAlarms" = "gray89"),

labels = c("Non-Repeated Targets", "Repeated Targets", "New")) +

labs(

x = "Prime Target Encoding Task",

y = "Adjusted Mean Proportion of Old Responses",

fill = "Condition"

) +

scale_y_continuous(breaks = seq(0, 1, by = 0.1), limits = c(0, 1)) +

theme_minimal(base_size = 14) +

theme(

axis.line = element_line(color = "black"),

axis.title = element_text(color = "black"),

panel.grid.major = element_line(color = "grey", size = 0.5),

panel.grid.minor = element_blank(),

legend.title = element_text(color = "black")

)

8. Using the wide formatted data file “MemoryData” conduct a two-paired sample t-test comparing the hit rate collapsed across the two repetition conditions (repeated/not-repeated) to the false alarm rate to assess participants’ ability to distinguish old from new items.

collapsed_hitdata <- MemoryData %>%

mutate(HitRate = (Hits_NRep + Hits_Rep) / 2)

# Conduct paired sample t-tests

t_test_results <- t.test(collapsed_hitdata$HitRate, collapsed_hitdata$FalseAlarms, paired = TRUE)

print(t_test_results)

##

## Paired t-test

##

## data: collapsed_hitdata$HitRate and collapsed_hitdata$FalseAlarms

## t = 11.621, df = 23, p-value = 4.179e-11

## alternative hypothesis: true mean difference is not equal to 0

## 95 percent confidence interval:

## 0.3071651 0.4401983

## sample estimates:

## mean difference

## 0.3736817

#Hit rates were higher than false alarm rates, t(23) = 11.62, p < .001.

9. Using the wide formatted data file “MemoryData” conduct a two-paired sample t-test comparing the hit rates for the not-repeated and repeated targets.

# Conduct paired sample t-tests for non-repeated vs repeated hit rates

t_test_results_hits <- t.test(collapsed_hitdata$Hits_NRep, collapsed_hitdata$Hits_Rep, paired = TRUE)

# Print the results for the hit rate comparison

print(t_test_results_hits)

##

## Paired t-test

##

## data: collapsed_hitdata$Hits_NRep and collapsed_hitdata$Hits_Rep

## t = 2.5431, df = 23, p-value = 0.01817

## alternative hypothesis: true mean difference is not equal to 0

## 95 percent confidence interval:

## 0.009071364 0.088174399

## sample estimates:

## mean difference

## 0.04862288

#Hit rates were higher for not-repeated targets than for repeated targets, t(23) = 2.54, p = .018.

You are a researcher at the Early Childhood Development Research Center. Your latest project investigates how infants respond to different combinations of face race and music emotion. In specific you are interested in whether infants associate own- and other-race faces with music of different emotional valences (happy and sad music).

Your project was completed in collaboration with your colleagues in China. While you were responsible for designing your experiment, your collaborators were responsible for recruiting participants and collecting your data.

Chinese infants (3 to 9 months old) were recruited to participate in your experiment. Each infant was randomly assigned to one of the four face-race + music conditions where they saw a series of neutral own- or other-race faces paired with happy or sad musical excerpts.

In the own-happy, infants watched six Asian face videos sequentially paired with six happy musical excerpts. In other-sad, infants watched six African face videos sequentially paired with happy musical excerpts. In general, conditions were procedurally the same, except for the face-music composition. Infant eye movements were recorded using an eye tracker.

Your goal is to determine how face race and music emotion, as well as their interaction, influence the looking behaviour of infants.

Your independent variables:

Your dependent variables:

Let’s begin by loading the required libraries and the dataset as “BabyData”. To do so download the file “infant_eye_tracking_study.csv” and run the following code. Remember to replace ‘path_to_your_downloaded_file’ with the actual path to the dataset on your system.

BabyData <- read.csv('path_to_your_downloaded_file/infant_eye_tracking_study.csv')

library(rstatix) #for performing basic statistical tests

library(dplyr) #for sorting data

library(tidyr) #for data sorting and structure

library(ggplot2) #for visualizing your data

library(readr)

library(ggpubr)

library(gridExtra)

Please complete the accompanying exercises to the best of your abilities.

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=30#h5p-3

1. Display the first few rows to understand your dataset.

summary(BabyData) # Viewing the summary of the dataset to check for inconsistencies

## Age.in.Days Condition Face.Race Music.Emotion Age.Group

## 1 93 Other-Race Happy Music African happy 3

## 2 98 Other-Race Happy Music African happy 3

## 3 93 Other-Race Happy Music African happy 3

## 4 93 Other-Race Happy Music African happy 3

## 5 93 Other-Race Happy Music African happy 3

## 6 100 Other-Race Happy Music African happy 3

## Total.Looking.Time First.Face.Looking.Time Participant.ID

## 1 44.035 8.273 HJOGM7704U

## 2 18.324 6.938 JHSEG5414N

## 3 24.600 4.225 OCQFX4970K

## 4 12.919 7.537 KLDOF5559R

## 5 12.755 4.230 HHPGJ9661Y

## 6 38.777 9.351 NVCPX9518V

2. Use relocate() to re-order your columns such that your “Participant.ID” column appears as the first column in your dataset.

BabyData <- BabyData %>% relocate(Participant.ID, .before = Age.in.Days)

3. Check your data for any missing values. Remove any rows with missing or NA values from the dataset.

sum(is.na(BabyData)) # Checking for missing values in the dataset

## [1] 3

BabyData <- BabyData[!is.na(BabyData$First.Face.Looking.Time), ]

## Participant.ID Age.in.Days Condition Face.Race

## Length:193 Min. : 79.0 Length:193 Length:193

## Class :character 1st Qu.:127.0 Class :character Class :character

## Mode :character Median :185.0 Mode :character Mode :character

## Mean :189.3

## 3rd Qu.:246.0

## Max. :316.0

##

## Music.Emotion Age.Group Total.Looking.Time First.Face.Looking.Time

## Length:193 Min. :3.000 Min. : 1.654 Min. : 0.160

## Class :character 1st Qu.:3.000 1st Qu.:20.671 1st Qu.: 5.309

## Mode :character Median :6.000 Median :30.381 Median : 7.495

## Mean :6.093 Mean :29.196 Mean : 7.041

## 3rd Qu.:9.000 3rd Qu.:38.196 3rd Qu.: 9.185

## Max. :9.000 Max. :50.000 Max. :11.823

## NA's :3

4. Check your data again for any missing values and check data consistency.

sum(is.na(BabyData)) # Checking for missing values in the dataset

## [1] 0

summary(BabyData) # Viewing the summary of the dataset to check for inconsistencies

## Participant.ID Age.in.Days Condition Face.Race

## Length:193 Min. : 79.0 Length:193 Length:193

## Class :character 1st Qu.:127.0 Class :character Class :character

## Mode :character Median :185.0 Mode :character Mode :character

## Mean :189.3

## 3rd Qu.:246.0

## Max. :316.0

##

## Music.Emotion Age.Group Total.Looking.Time First.Face.Looking.Time

## Length:193 Min. :3.000 Min. : 1.654 Min. : 0.160

## Class :character 1st Qu.:3.000 1st Qu.:20.671 1st Qu.: 5.309

## Mode :character Median :6.000 Median :30.381 Median : 7.495

## Mean :6.093 Mean :29.196 Mean : 7.041

## 3rd Qu.:9.000 3rd Qu.:38.196 3rd Qu.: 9.185

## Max. :9.000 Max. :50.000 Max. :11.823

## NA's :0

5. Check for structure and ensure that your factor columns (Music.Emotion, Face.Race, and Condition) are set-up correctly.

str(BabyData)

## 'data.frame': 190 obs. of 8 variables:

## $ Participant.ID : chr "HJOGM7704U" "JHSEG5414N" "OCQFX4970K" "KLDOF5559R" ...

## $ Age.in.Days : int 93 98 93 93 93 100 93 91 98 100 ...

## $ Condition : chr "Other-Race Happy Music" "Other-Race Happy Music" "Other-Race Happy Music" "Other-Race Happy Music" ...

## $ Face.Race : chr "African" "African" "African" "African" ...

## $ Music.Emotion : chr "happy" "happy" "happy" "happy" ...

## $ Age.Group : int 3 3 3 3 3 3 3 3 3 3 ...

## $ Total.Looking.Time : num 44 18.3 24.6 12.9 12.8 ...

## $ First.Face.Looking.Time: num 8.27 6.94 4.22 7.54 4.23 ...

BabyData$Face.Race <- as.factor(BabyData$Face.Race)

BabyData$Music.Emotion <- as.factor(BabyData$Music.Emotion)

BabyData$Condition <- as.factor(BabyData$Condition)

6. Check to see if your design is balanced or unbalanced.

table(BabyData$Age.Group, BabyData$Condition) #unbalanced design

##

## Other-Race Happy Music Other-Race Sad Music Own-Race Happy Music

## 3 16 12 12

## 6 15 19 19

## 9 14 17 17

##

## Own-Race Sad Music

## 3 17

## 6 15

## 9 17

7. Conduct a multi-variable linear regression on the first face looking time as the predicted variable, with Group, face race, and their interactions as the predictors. Display the result.

lm_model1 <- lm(First.Face.Looking.Time ~ Age.Group*Face.Race, data = BabyData)

summary(lm_model1)

##

## Call:

## lm(formula = First.Face.Looking.Time ~ Age.Group * Face.Race,

## data = BabyData)

##

## Residuals:

## Min 1Q Median 3Q Max

## -7.4524 -1.4478 0.3645 2.0507 4.5670

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.50710 0.75573 7.287 8.75e-12 ***

## Age.Group 0.22815 0.11542 1.977 0.0496 *

## Face.RaceChinese -0.04233 1.05722 -0.040 0.9681

## Age.Group:Face.RaceChinese 0.05036 0.16071 0.313 0.7544

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.658 on 186 degrees of freedom

## Multiple R-squared: 0.05411, Adjusted R-squared: 0.03885

## F-statistic: 3.546 on 3 and 186 DF, p-value: 0.01564

8. Conduct a multivariable linear regression similar to the one described in the previous question. You predicted variable should be Total looking time, with Age.Group, Face.Race, Musical.Emotion, and their interactions as the predictors.

lm_model2 <- model <- lm(Total.Looking.Time ~ Age.Group * Face.Race * Music.Emotion, data = BabyData)

summary(lm_model2)

##

## Call:

## lm(formula = Total.Looking.Time ~ Age.Group * Face.Race * Music.Emotion,

## data = BabyData)

##

## Residuals:

## Min 1Q Median 3Q Max

## -24.8431 -8.0316 -0.1786 8.2809 27.7472

##

## Coefficients:

## Estimate Std. Error t value

## (Intercept) 28.0472 4.5406 6.177

## Age.Group -0.5167 0.7144 -0.723

## Face.RaceChinese -11.5424 6.6960 -1.724

## Music.Emotionsad -15.2955 6.6960 -2.284

## Age.Group:Face.RaceChinese 3.0376 1.0229 2.970

## Age.Group:Music.Emotionsad 3.4057 1.0229 3.330

## Face.RaceChinese:Music.Emotionsad 26.8342 9.3820 2.860

## Age.Group:Face.RaceChinese:Music.Emotionsad -5.7421 1.4252 -4.029

## Pr(>|t|)

## (Intercept) 4.16e-09 ***

## Age.Group 0.47045

## Face.RaceChinese 0.08645 .

## Music.Emotionsad 0.02351 *

## Age.Group:Face.RaceChinese 0.00338 **

## Age.Group:Music.Emotionsad 0.00105 **

## Face.RaceChinese:Music.Emotionsad 0.00473 **

## Age.Group:Face.RaceChinese:Music.Emotionsad 8.22e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 11.72 on 182 degrees of freedom

## Multiple R-squared: 0.1742, Adjusted R-squared: 0.1425

## F-statistic: 5.486 on 7 and 182 DF, p-value: 9.76e-06

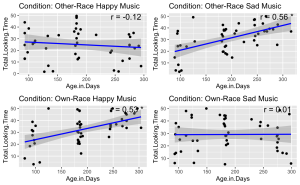

9. Given the significant three-way interaction, conduct Pearson correlation analyses to examine the linear relationship between total face looking time and participant age in days in each condition.

unique_conditions <- unique(BabyData$Condition) #Get unique conditions

correlation_results <- list() ## Initialize a list to store results

# Loop through each condition and perform Pearson correlation

for (condition in unique_conditions) {

# Subset data for the current condition

subset_data <- subset(BabyData, Condition == condition)

subset_data$Age.Group <- as.numeric(as.character(subset_data$Age.Group))

# Perform Pearson correlation

correlation_test <- cor.test(subset_data$Age.Group, subset_data$Total.Looking.Time, method = "pearson")

# Store the result

correlation_results[[condition]] <- correlation_test

}

# Print the results

correlation_results

## $`Other-Race Happy Music`

##

## Pearson's product-moment correlation

##

## data: subset_data$Age.Group and subset_data$Total.Looking.Time

## t = -0.64059, df = 43, p-value = 0.5252

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## -0.3799180 0.2020743

## sample estimates:

## cor

## -0.09722666

##

##

## $`Other-Race Sad Music`

##

## Pearson's product-moment correlation

##

## data: subset_data$Age.Group and subset_data$Total.Looking.Time

## t = 4.4535, df = 46, p-value = 5.356e-05

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.3136678 0.7206311

## sample estimates:

## cor

## 0.5488839

##

##

## $`Own-Race Happy Music`

##

## Pearson's product-moment correlation

##

## data: subset_data$Age.Group and subset_data$Total.Looking.Time

## t = 3.8943, df = 46, p-value = 0.0003166

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.2490419 0.6851408

## sample estimates:

## cor

## 0.4979416

##

##

## $`Own-Race Sad Music`

##

## Pearson's product-moment correlation

##

## data: subset_data$Age.Group and subset_data$Total.Looking.Time

## t = 0.25438, df = 47, p-value = 0.8003

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## -0.2466891 0.3149919

## sample estimates:

## cor

## 0.03707966

10. Visualize the relationship between total face looking time and participant age in days, categorized by different experimental conditions. Each condition should be represented in its own panel within a single figure. Additionally, for each panel:

# Get unique conditions

conditions <- unique(BabyData$Condition)

# Create a list to store plots

plot_list <- list()

# Loop through each condition and create a plot

for (condition in conditions) {

# Subset data for the condition

subset_data <- subset(BabyData, Condition == condition)

# Perform linear regression

fit <- lm(Total.Looking.Time ~ Age.in.Days, data = subset_data)

# Calculate Pearson correlation

cor_test <- cor.test(subset_data$Age.in.Days, subset_data$Total.Looking.Time)

# Create a scatter plot with regression line

p <- ggplot(subset_data, aes(x = Age.in.Days, y = Total.Looking.Time)) +

geom_point() +

geom_smooth(method = 'lm', color = 'blue') +

ggtitle(paste('Condition:', condition)) +

annotate("text", x = Inf, y = Inf, label = paste('r =', round(cor_test$estimate, 2), ifelse(cor_test$p.value < 0.05, "*", "")),

hjust = 1.1, vjust = 1.1, size = 5)

# Add plot to list

plot_list[[condition]] <- p

}

do.call(grid.arrange, c(plot_list, ncol = 2))

11. Analyze the impact of music emotional valence on the looking time for own- and other-race faces among different infant age groups (3, 6, and 9 months). Specifically, you are required to perform a series of independent sample t-tests.

# Ensure Age.Group is treated as a factor

BabyData$Age.Group <- as.factor(BabyData$Age.Group)

# Perform t-tests for each combination of Age.Group, Music.Emotion, and Face.Race

results <- list()

for(age_group in levels(BabyData$Age.Group)) {

for(music_emotion in unique(BabyData$Music.Emotion)) {

# Filter data for specific age group and music emotion

subset_data <- BabyData %>%

filter(Age.Group == age_group, Music.Emotion == music_emotion)

# Perform the t-test comparing Total.Looking.Time for own- vs. other-race faces

t_test_result <- t.test(Total.Looking.Time ~ Face.Race, data = subset_data)

# Store the results

result_name <- paste(age_group, music_emotion, sep="_")

results[[result_name]] <- t_test_result

}

}

# Print results

print(results)

## $`3_happy`

##

## Welch Two Sample t-test

##

## data: Total.Looking.Time by Face.Race

## t = -0.3153, df = 22.294, p-value = 0.7555

## alternative hypothesis: true difference in means between group African and group Chinese is not equal to 0

## 95 percent confidence interval:

## -12.465591 9.173257

## sample estimates:

## mean in group African mean in group Chinese

## 22.76875 24.41492

##

##

## $`3_sad`

##

## Welch Two Sample t-test

##

## data: Total.Looking.Time by Face.Race

## t = -1.0492, df = 22.86, p-value = 0.3051

## alternative hypothesis: true difference in means between group African and group Chinese is not equal to 0

## 95 percent confidence interval:

## -17.297369 5.658457

## sample estimates:

## mean in group African mean in group Chinese

## 21.32725 27.14671

##

##

## $`6_happy`

##

## Welch Two Sample t-test

##

## data: Total.Looking.Time by Face.Race

## t = 0.43226, df = 27.324, p-value = 0.6689

## alternative hypothesis: true difference in means between group African and group Chinese is not equal to 0

## 95 percent confidence interval:

## -6.401791 9.821475

## sample estimates:

## mean in group African mean in group Chinese

## 32.90100 31.19116

##

##

## $`6_sad`

##

## Welch Two Sample t-test

##

## data: Total.Looking.Time by Face.Race

## t = -0.62019, df = 27.075, p-value = 0.5403

## alternative hypothesis: true difference in means between group African and group Chinese is not equal to 0

## 95 percent confidence interval:

## -9.635393 5.162123

## sample estimates:

## mean in group African mean in group Chinese

## 30.20163 32.43827

##

##

## $`9_happy`

##

## Welch Two Sample t-test

##

## data: Total.Looking.Time by Face.Race

## t = -6.0414, df = 21.29, p-value = 5.08e-06

## alternative hypothesis: true difference in means between group African and group Chinese is not equal to 0

## 95 percent confidence interval:

## -27.28467 -13.31931

## sample estimates:

## mean in group African mean in group Chinese

## 19.13607 39.43806

##

##

## $`9_sad`

##

## Welch Two Sample t-test

##

## data: Total.Looking.Time by Face.Race

## t = 3.0179, df = 26.642, p-value = 0.005546

## alternative hypothesis: true difference in means between group African and group Chinese is not equal to 0

## 95 percent confidence interval:

## 3.335708 17.533234

## sample estimates:

## mean in group African mean in group Chinese

## 38.68853 28.25406

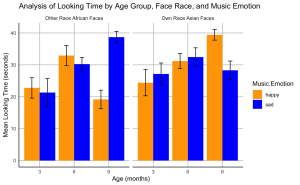

12. Create a bar plot to visualize the effects of music emotional valence on the looking time of infants at different ages for own- and other-race faces.

# Calculate means and standard errors

data_summary <- BabyData %>%

group_by(Age.Group, Face.Race, Music.Emotion) %>%

summarize(Mean = mean(Total.Looking.Time),

SE = sd(Total.Looking.Time)/sqrt(n())) %>%

ungroup()

## `summarise()` has grouped output by 'Age.Group', 'Face.Race'. You can override

## using the `.groups` argument.

# Create the bar plot

ggplot(data_summary, aes(x = factor(Age.Group), y = Mean, fill = Music.Emotion)) +

geom_bar(stat = "identity", position = position_dodge()) +

geom_errorbar(aes(ymin = Mean - SE, ymax = Mean + SE),

position = position_dodge(0.9), width = 0.25) +

scale_fill_manual(values = c("happy" = "orange", "sad" = "blue")) +

facet_wrap(~ Face.Race, scales = "free_x", labeller = labeller(Face.Race = c(Chinese = "Own Race Asian Faces", African = "Other Race African Faces"))) +

labs(x = "Age (months)", y = "Mean Looking Time (seconds)", title = "Analysis of Looking Time by Age Group, Face Race, and Music Emotion") +

theme_minimal() +

theme(

panel.grid.minor = element_blank(),

panel.grid.major = element_line(color = "gray", size = 0.5, linetype = "solid"), # Major grid lines

axis.line = element_line(color = "black", size = 0.5) # Axis lines

)

Welcome to the Perception and Sensorimotor Lab at McMaster University. As a budding cognitive psychologist here, you are about to embark on an explorative journey into the depth effect—a captivating psychological phenomenon that suggests visual events occurring in closer proximity (near space) are processed more efficiently than those farther away (far space). This effect provides a unique window into the cognitive architecture underpinning our sensory experiences, possibly implicating the involvement of the dorsal visual stream, which processes spatial relationships and movements in near space, and the ventral stream, known for its role in recognizing detailed visual information.

Your goal is to dissect whether the depth effect is task-dependent, aligning strictly with the dorsal/ventral stream dichotomy, or whether it represents a universal processing advantage for stimuli in near space across various cognitive tasks.

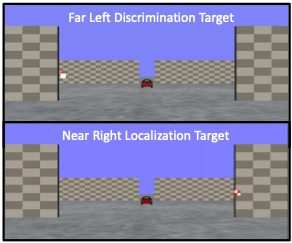

Your research journey begins in your lab. Imagine the lab as a gateway to a three-dimensional world, where the concept of depth is not only a subject of study but also a lived sensory experience for your participants! Seated inside a darkened tent, each participant grips a steering wheel, their primary tool for interaction and inputting responses. Before them, a screen comes to life with a 3D virtual environment meticulously engineered to test the frontiers of depth perception.

The virtual landscape participants encounter is a model of simplicity and complexity; as illustrated in the figure below, before the participants a ground plane extends into the depth of the screen, intersected by two sets of upright placeholder walls at varying depths—near and far. The walls stand on either side of the central axis, mirrored perfectly across the midline. The textures of the ground and placeholders—a random dot matrix and a checkerboard pattern, respectively—maintain a consistent density. These visual hints, alongside the textural gradients and the retinal size variance between near and far objects, act as subtle cues for depth perception.

From their first-person point of view, participants are asked to:

Through this experiment, you are not just observing the depth effect; you are dissecting it, unearthing the cognitive processes that allow humans to navigate the intricate dance of depth in our daily lives!

Let’s begin by loading the required libraries and the dataset. To do so download the file “NearFarRep_Outlier.csv” and run the following code.

# Loading the required

libraries library(tidyverse) # for data manipulation

library(rstatix) # for statistical analyses

library(emmeans) # for pairwise comparisons

library(afex) # for running anova using aov_ez and aov_car

library(kableExtra) # formatting html ANOVA tables

library(ggpubr) # for making plots

library(grid) # for plots

library(gridExtra) # for arranging multiple ggplots for extraction

library(lsmeans) # for pairwise comparisons

Read in the downloaded dataset “NearFarRep_Outlier.csv” as “NearFarData”. Remember to replace ‘path_to_your_downloaded_file’ with the actual path to the dataset on your system.

NearFarData <- read.csv('path_to_your_downloaded_file/NearFarRep_Outlier.csv')

The dataset contains the response times of participants and includes the following columns:

Please complete the accompanying exercises to the best of your abilities.

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=34#h5p-4

1. Display the first few rows to understand your dataset. Display all column names in the dataset.

head(NearFarData) #Displaying the first few rows## X ID Response Con TarRT

## 1 1 10 Loc Near 0.6200754

## 2 2 10 Loc Near 0.2219719

## 3 3 1 Loc Near 0.2270377

## 4 4 9 Loc Near 0.5270686

## 5 5 25 Loc Near 0.2272455

## 6 6 18 Loc Near 0.2292785

colnames(NearFarData)

## [1] "X" "ID" "Response" "Con" "TarRT"

2. Set up “Response” and “Con” as factors, then check the structure of your data to make sure your factors and levels are set up correctly.

NearFarData <- NearFarData %>% convert_as_factor(Response, Con)str(NearFarData)## 'data.frame': 11154 obs. of 5 variables:

## $ X : int 1 2 3 4 5 6 7 8 9 10 ...

## $ ID : int 10 10 1 9 25 18 4 9 8 18 ...

## $ Response: Factor w/ 2 levels "Disc","Loc": 2 2 2 2 2 2 2 2 2 2 ...

## $ Con : Factor w/ 2 levels "Far","Near": 2 2 2 2 2 2 2 2 2 2 ...

## $ TarRT : num 0.62 0.222 0.227 0.527 0.227 ...

3. Perform basic data checks for missing values and data consistency.

sum(is.na(NearFarData)) # Checking for missing values in the dataset## [1] 0

4. Convert the values in your dependent measures column “TarRT” to seconds.

NearFarData$TarRT <- NearFarData$TarRT * 1000

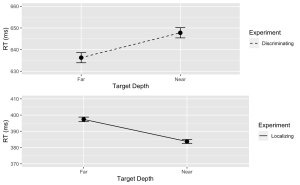

# Calculate means and standard errors for each combination of 'Response' and 'Con'summary_df <- NearFarData %>% group_by(Response, Con) %>% summarise( MeanRT = mean(TarRT), SERT = sd(TarRT) / sqrt(n()) )6. Using the “ggplot2” package, create a line plot with error bars for the Discrimination task.

# Now, using ggplot to create the plotDisc.plot <- ggplot(data = filter(summary_df, Response=="Disc"), aes(x = Con, y = MeanRT, group = Response)) + geom_line(aes(linetype = "Discriminating")) + # Add a linetype aesthetic geom_errorbar(aes(ymin = MeanRT - SERT, ymax = MeanRT + SERT), width = 0.1) + geom_point(size = 3) + theme_gray() + labs( x = "Target Depth", y = "RT (ms)", color = "Experiment", linetype = "Experiment") + scale_linetype_manual(values = "dashed") + # Set the linetype for "Disc" to dashed ylim(630, 660) # Set the y-axis limits

7. Similarly, create a line plot with error bars for the Localization task. Use a dashed line for this plot with the following exceptions:

Loc.plot <- ggplot(data = filter(summary_df, Response=="Loc"), aes(x = Con, y = MeanRT, group = Response)) +

geom_line(aes(linetype = "Localizing")) + # Add a linetype aesthetic

geom_errorbar(aes(ymin = MeanRT - SERT, ymax = MeanRT + SERT), width = 0.1) +

geom_point(size = 3) +

theme_gray() +

labs(

x = "Target Depth",

y = "RT (ms)",

color = "Experiment",

linetype = "Experiment") +

scale_linetype_manual(values = "solid") + # Set the line type for "Disc" to dashed

ylim(370, 410) # Set the y-axis limits

8. Finally, use the grid.arrange() function from the “gridExtra” package to stack the plots for the Discrimination and Localization tasks on top of each other.

grid.arrange(Disc.plot, Loc.plot, ncol = 1) # Stack the plots on top of each other

9. Using the “anova_test” function, conduct a two-way between-subjects ANOVA to investigate the effects of Con (Condition) and Response (Task type) on the target response times (TarRT). After running the ANOVA, use the “get_anova_table” function to present the results.

anova <- anova_test(

data = NearFarData, dv = TarRT, wid = ID,

between = c(Con, Response), detailed = TRUE, effect.size = "pes")

## Warning: The 'wid' column contains duplicate ids across between-subjects

## variables. Automatic unique id will be created

get_anova_table(anova)

## ANOVA Table (type III tests)

##

## Effect SSn SSd DFn DFd F p p<.05

## 1 (Intercept) 2.972143e+09 111582983 1 11150 296993.268 0.00e+00 *

## 2 Con 3.185839e+03 111582983 1 11150 0.318 5.73e-01

## 3 Response 1.762985e+08 111582983 1 11150 17616.741 0.00e+00 *

## 4 Con:Response 4.390483e+05 111582983 1 11150 43.872 3.67e-11 *

## pes

## 1 9.64e-01

## 2 2.86e-05

## 3 6.12e-01

## 4 4.00e-03

## Fitting a linear model to datalm_model <- lm(TarRT ~ Con * Response, data = NearFarData)

# Get the estimated marginal means

emm <- emmeans(lm_model, specs = pairwise ~ Con * Response)

# View the results

print(post_hoc_results)

## contrast estimate SE df t.ratio p.value

## Far Disc - Near Disc -11.5 2.70 11150 -4.250 0.0001

## Far Disc - Far Loc 238.9 2.67 11150 89.348 <.0001

## Far Disc - Near Loc 252.6 2.67 11150 94.581 <.0001

## Near Disc - Far Loc 250.4 2.69 11150 93.131 <.0001

## Near Disc - Near Loc 264.0 2.68 11150 98.341 <.0001

## Far Loc - Near Loc 13.6 2.66 11150 5.124 <.0001

##

## P value adjustment: tukey method for comparing a family of 4 estimates

You are a researcher in the EdCog Lab at McMaster University. The Lab is conducting a study aimed at understanding the beliefs of instructors about student abilities in STEM (Science, Technology, Engineering, and Math) disciplines. This study is motivated by a growing body of literature suggesting that instructors’ beliefs about intelligence and success—categorized into brilliance belief (the idea that success requires innate talent), universality belief (the belief that success is achievable by everyone versus only a select few), and mindset beliefs (the view that intelligence and skills are either fixed or can change over time)—play a crucial role in educational practices and student outcomes. Understanding these beliefs is particularly important in STEM fields, where perceptions of innate talent versus learned skills can significantly influence teaching approaches and student engagement.

The survey was distributed through LimeSurvey to instructors across the Science, Health Sciences, and Engineering faculties. Participants were asked a series of Likert-scale questions (ranging from strongly disagree to strongly agree) aimed at assessing their beliefs in each of the three areas. Additional demographic and background questions were included to control for variables such as years of teaching experience, field of specialization, and level of education.

The sample data file (“EdCogData.xlsx) for this exercise is structured as such:

Let’s begin by running the following code in RStudio to load the required libraries. Make sure to read through the comments embedded throughout the code to understand what each line of code is doing.

# Here we create a list called "my_packages" with all of our required libraries

my_packages <- c("tidyverse", "readxl", "xlsx", "dplyr", "ggplot2")

# Checking and extracting packages that are not already installed

not_installed <- my_packages[!(my_packages %in% installed.packages()[ , "Package"])]

# Install packages that are not already installed

if(length(not_installed)) install.packages(not_installed)

# Loading the required libraries

library(tidyverse) # for data manipulation

library(dplyr) # for data manipulation

library(readxl) # to read excel files

library(xlsx) # to create excel files

library(ggplot2) # for making plots

Make sure to have the required dataset (“EdCogData.xlsx“) for this exercise downloaded. Set the working directory of your current R session to the folder with the downloaded dataset. You may do this manually in R studio by clicking on the “Session” tab at the top of the screen, and then clicking on “Set Working Directory”.

If the downloaded dataset file and your R session are within the same file, you may choose the option of setting your working directory to the “source file location” (the location where your current R session is saved). If they are in different folders then click on “choose directory” option and browse for the location of the downloaded dataset.

You may also do this by running the following code:

setwd(file.choose())

Once you have set your working directory either manually or by code, in the Console below you will see the full directory of your folder as the output.

Read in the downloaded dataset as “edcogData” and complete the accompanying exercises to the best of your abilities.

# Read xlsx file

edcog = read_excel("EdCogData.xlsx")

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=39#h5p-5

Note: Shaded boxes hold the R code, while the white boxes display the code’s output, just as it appears in RStudio. The “#” sign indicates a comment that won’t execute in RStudio.

Load the dataset into RStudio and inspect its structure.

head(edcogData) # View the first few rows of the datasetncol(edcogData) #Q1#[1] 26

colnames(edcogData) #Q2

#[1] "ID" "Brilliance1" "Brilliance2" "Brilliance3" "Brilliance4"

#[6] "Brilliance5" "MindsetGrowth1" "MindsetGrowth2" "MindsetGrowth3" "MindsetGrowth4"

#[11] "MindsetGrowth5" "MindsetFixed1" "MindsetFixed2" "MindsetFixed3" "MindsetFixed4"

#[16] "MindsetFixed5" "Nonuniversality1" "Nonuniversality2" "Nonuniversality3" "Nonuniversality4"

#[21] "Nonuniversality5" "Universality1" "Universality2" "Universality3" "Universality4"

#[26] "Universality5"

Prepare the data for analysis by ensuring it is in the correct format.

1. Are there any missing values in the dataset?

sum(is.na(edcogData))

[1] 0

1. Create aggregate scores for each dimension (Brilliance, Fixed, Growth, Nonuniversal, Universal).

edcogData$Brilliance <- rowMeans(edcogData[,c("Brilliance1", "Brilliance2", "Brilliance3", "Brilliance4", "Brilliance5")])

edcogData$Growth <- rowMeans(edcogData[,c("MindsetGrowth1", "MindsetGrowth2", "MindsetGrowth3", "MindsetGrowth4", "MindsetGrowth5")])

edcogData$Fixed <- rowMeans(edcogData[,c("MindsetFixed1", "MindsetFixed2", "MindsetFixed3", "MindsetFixed4", "MindsetFixed5")])

edcogData$Universal <- rowMeans(edcogData[,c("Universality1", "Universality2", "Universality3", "Universality4", "Universality5")])

edcogData$Nonuniversal <- rowMeans(edcogData[,c("Nonuniversality1", "Nonuniversality2", "Nonuniversality3", "Nonuniversality4", "Nonuniversality5")])

2. Create a new data frame named “edcog.agg.wide” that contains only the ID column and the aggregated score columns from “edcogData”.

edcog.agg.wide <- edcogData %>% select(ID, Brilliance, Fixed, Growth, Nonuniversal, Universal)

3. Convert “edcog.agg.wide” from a wide to a long format named “edcog.agg.long”, with the following columns:

edcog.agg.long <- edcog.agg.wide %>%

select(ID, Brilliance, Fixed, Growth, Nonuniversal, Universal) %>%

pivot_longer(

cols = -ID, # Select all columns except for ID

names_to = "Dimension",

values_to = "AggregateScore" )

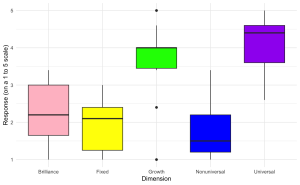

1. Create a boxplot to visualize the distribution of aggregate scores across different dimensions (Brilliance, Fixed, Growth, Nonuniversal, Universal) from the survey data with the following specifications:

ggplot(edcog.agg.long, aes(x = Dimension, y = AggregateScore, fill = Dimension)) +

geom_boxplot() +

scale_fill_manual(values = c("Brilliance" = "pink", "Fixed" = "yellow",

"Growth" = "green", "Nonuniversal" = "blue",

"Universal" = "purple")) +

labs(y = "Response (on a 1 to 5 scale)", x = "Dimension") +

theme_minimal() +

theme(legend.position = "none") # Hide the legend since color coding is evident

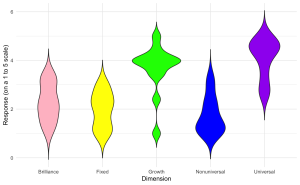

2. Generate a violin plot to visualize the distribution of aggregate scores for different dimensions (Brilliance, Fixed, Growth, Nonuniversal, Universal) from the survey data with the following specifications:

ggplot(edcog.agg.long, aes(x = Dimension, y = AggregateScore, fill = Dimension)) +

geom_violin(trim = FALSE) +

scale_fill_manual(values = c("Brilliance" = "pink", "Fixed" = "yellow",

"Growth" = "green", "Nonuniversal" = "blue",

"Universal" = "purple")) +

labs(y = "Response (on a 1 to 5 scale)", x = "Dimension") +

theme_minimal() + theme(legend.position = "none")

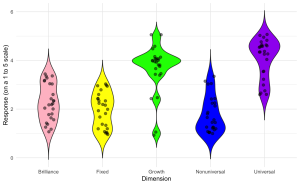

3. Enhance the violin plot by overlaying individual data points to show the raw data distribution alongside the aggregated density estimates.

ggplot(edcog.agg.long, aes(x = Dimension, y = AggregateScore, fill = Dimension)) +

geom_violin(trim = FALSE) +

geom_jitter(width = 0.1, size = 2, alpha = 0.5) + # Adjust 'width' for jittering, 'size' for point size, and 'alpha' for transparency

scale_fill_manual(values = c("Brilliance" = "pink", "Fixed" = "yellow",

"Growth" = "green", "Nonuniversal" = "blue",

"Universal" = "purple")) +

labs(y = "Response (on a 1 to 5 scale)", x = "Dimension") +

theme_minimal() +

theme(legend.position = "none")

You are a clinical researcher at the Hamilton General Hospital, and your lab is studying how people grieve and cope with the loss of a loved one. Specifically, some people ruminate when they grieve, and so your lab is interested in understanding the different ways in which this grief-related rumination manifests in people. Andrews et al. (2021) recently developed the Bereavement Analytical Rumination Questionnaire (BARQ) to evaluate two dimensions of rumination: 1) the cause of the loss (i.e., root cause analysis – RCA) and 2) how an individual reinvests their time meaningfully following the loss (i.e., reinvestment analysis – RIA).

Your lab is curious about the following questions:

Similar to Andrews et al. (2021), your lab decides to collect the following information from a questionnaire distributed to 50 respondents:

To answer the lab’s questions, please run the following analyses.

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=41#h5p-6

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=41#h5p-54

2. Conduct a confirmatory factor analysis (CFA) on the seven items of the BARQ. Items 1-4 should form the latent factor RCA, and items 5-7 should form the latent factor RIA.

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=41#h5p-55

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=41#h5p-56

3. Compare RCA and RIA latent factor means between the following groups. Which comparisons have statistically significant differences in the RCA and RIA means?

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=41#h5p-57

4. The three time variables in the questionnaire may exhibit multilinearity with one another: age of the deceased, current age of the respondent, and the time passed since death. For instance, the age of the deceased and the age of the respondent may be collinear with one another, especially if the relationship of the deceased to respondent is that of a child. To test for multilinearity, assess the variance inflation factor (VIF) of a regression model that includes the three time variables as predictors for RCA and RIA. A VIF of 1 means there is no correlation between predictor variables, while a VIF above 5 indicates a high correlation.

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=41#h5p-58

5. Create a scatterplot of the participant’s latent RCA factor score (y-axis) against the age of the deceased child (x-axis).

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=41#h5p-59

Andrews, P. W., Altman, M., Sevcikova, M., & Cacciatore, J. (2021). An evolutionary approach to grief-related rumination: Construction and validation of the Bereavement Analytical Rumination Questionnaire. Evolution and Human Behavior, 42(5), 441-452.

Hollywood studios and movie producers are keenly interested in determining what types of story scripts will resonate with audiences and critics. A few budding screenwriters approach you, a social psychology researcher specializing in qualitative content analysis, to conduct an analysis of movie plots in order to help them determine the types of storylines that may appeal to mainstream viewers. 150 movies from the last five years were randomly selected and analyzed for plot and character traits. You decide to analyze the following six narrative characteristics for the exploratory study: 1) genre, 2) plot shape, 3) protagonist goal type, 4) protagonist agency, 5) protagonist cooperativeness, and 6) protagonist assertiveness. You are interested to see how these characteristics relate to the following outcomes: 1) average critic rating of the movie (as a percentage score) and 2) the net profit of the movie (in US dollars).

To analyze these six narrative characteristics, you adopt Brown & Tu’s (2020) scheme for plot and Berry & Brown’s (2017) classification scheme for literary characters. The coding scheme for the five narrative characteristics are as follows:

| Label | Code |

| Drama | 1 |

| Comedy | 2 |

| Romance | 3 |

| Action | 4 |

| Horror | 5 |

| Label | Code |

| Fall-Rise | 1 |

| Fall-Rise-Fall | 2 |

| Rise-Fall | 3 |

| Rise-Fall-Rise | 4 |

| Label | Code |

| Striving | 1 |

| Coping | 2 |

| Label | Code |

| High | 1 |

| Medium | 2 |

| Low | 3 |

| Label | Code |

| High | 1 |

| Medium | 2 |

| Low | 3 |

You also recruit a second coder in order to determine whether there is inter-rater reliability in your coding method.

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=43#h5p-60

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=43#h5p-61

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=43#h5p-62

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=43#h5p-63

4. Visualize these plot shape distributions across each genre in a grouped bar plot. Be sure to label the y-axis, x-axis, and legend.

An interactive H5P element has been excluded from this version of the text. You can view it online here:

https://ecampusontario.pressbooks.pub/radpnb/?p=43#h5p-64